LangChain-25 ReAct 让大模型自己思考和决策下一步 AutoGPT实现途径、AGI重要里程碑

大模型ReAct(Reasoning and Acting)是一种新兴的技术框架,旨在通过逻辑推理和行动序列的构建,使大型语言模型(LLM)能够达成特定的目标。这一框架的核心思想是赋予机器模型类似人类的推理和行动能力,从而在各种任务和环境中实现更高效、更智能的决策和操作。

·

背景介绍

大模型ReAct(Reasoning and Acting)是一种新兴的技术框架,旨在通过逻辑推理和行动序列的构建,使大型语言模型(LLM)能够达成特定的目标。这一框架的核心思想是赋予机器模型类似人类的推理和行动能力,从而在各种任务和环境中实现更高效、更智能的决策和操作。

核心组成

ReAct框架主要由三个关键概念组成:Thought(思考)、Act(行动)、和Obs(观察)。

- Thought:由LLM模型生成,是LLM产生行为和依据的基础。它代表了模型在面对特定任务时的逻辑推理过程,是决策的前提。

- Act:指LLM判断本次需要执行的具体行为。这通常涉及选择合适的工具或API,并生成所需的参数,以实现目标行动。

- Obs:LLM框架对于外界输入的获取,类似于LLM的“五官”,将外界的反馈信息同步给LLM模型,协助模型进一步的做分析或者决策。

安装依赖

Prompt

# Get the prompt to use - you can modify this!

# Answer the following questions as best you can. You have access to the following tools:

#

# {tools}

#

# Use the following format:

#

# Question: the input question you must answer

# Thought: you should always think about what to do

# Action: the action to take, should be one of [{tool_names}]

# Action Input: the input to the action

# Observation: the result of the action

# ... (this Thought/Action/Action Input/Observation can repeat N times)

# Thought: I now know the final answer

# Final Answer: the final answer to the original input question

#

# Begin!

#

# Question: {input}

# Thought:{agent_scratchpad}

编写代码

from langchain import hub

from langchain.agents import AgentExecutor, create_react_agent

from langchain_community.tools.tavily_search import TavilySearchResults

from langchain_openai import OpenAI

tools = [TavilySearchResults(max_results=1)]

# Get the prompt to use - you can modify this!

# Answer the following questions as best you can. You have access to the following tools:

#

# {tools}

#

# Use the following format:

#

# Question: the input question you must answer

# Thought: you should always think about what to do

# Action: the action to take, should be one of [{tool_names}]

# Action Input: the input to the action

# Observation: the result of the action

# ... (this Thought/Action/Action Input/Observation can repeat N times)

# Thought: I now know the final answer

# Final Answer: the final answer to the original input question

#

# Begin!

#

# Question: {input}

# Thought:{agent_scratchpad}

prompt = hub.pull("hwchase17/react")

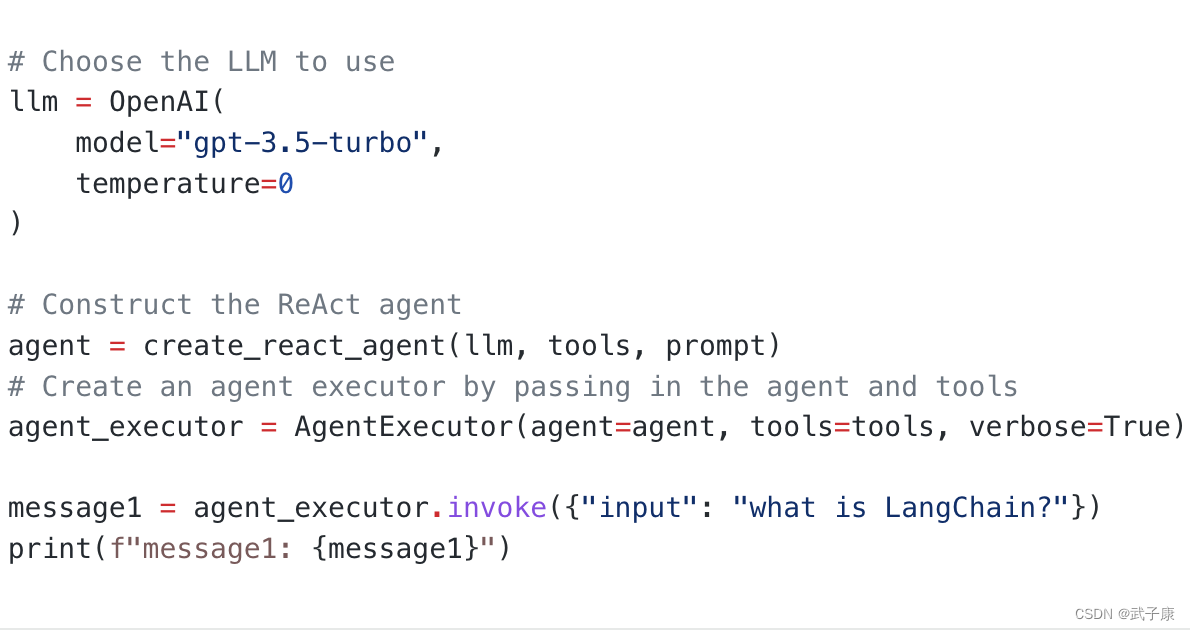

# Choose the LLM to use

llm = OpenAI(

model="gpt-3.5-turbo",

temperature=0

)

# Construct the ReAct agent

agent = create_react_agent(llm, tools, prompt)

# Create an agent executor by passing in the agent and tools

agent_executor = AgentExecutor(agent=agent, tools=tools, verbose=True)

message1 = agent_executor.invoke({"input": "what is LangChain?"})

print(f"message1: {message1}")

执行结果

我们可以看到,大模型自己进行思考,并进行下一步。(详细可看执行日志)

➜ python3 test26.py

> Entering new AgentExecutor chain...

I should search for LangChain to see what it is

Action: tavily_search_results_json

Action Input: "LangChain"[{'url': 'https://towardsdatascience.com/getting-started-with-langchain-a-beginners-guide-to-building-llm-powered-applications-95fc8898732c', 'content': 'linkedin.com/in/804250ab\nMore from Leonie Monigatti and Towards Data Science\nLeonie Monigatti\nin\nTowards Data Science\nRetrieval-Augmented Generation (RAG): From Theory to LangChain Implementation\nFrom the theory of the original academic paper to its Python implementation with OpenAI, Weaviate, and LangChain\n--\n2\nMarco Peixeiro\nin\nTowards Data Science\nTimeGPT: The First Foundation Model for Time Series Forecasting\nExplore the first generative pre-trained forecasting model and apply it in a project with Python\n--\n22\nRahul Nayak\nin\nTowards Data Science\nHow to Convert Any Text Into a Graph of Concepts\nA method to convert any text corpus into a Knowledge Graph using Mistral 7B.\n--\n32\nLeonie Monigatti\nin\nTowards Data Science\nRecreating Andrej Karpathy’s Weekend Project\u200a—\u200aa Movie Search Engine\nBuilding a movie recommender system with OpenAI embeddings and a vector database\n--\n3\nRecommended from Medium\nKrishna Yogi\nBuilding a question-answering system using LLM on your private data\n--\n6\nRahul Nayak\nin\nTowards Data Science\nHow to Convert Any Text Into a Graph of Concepts\nA method to convert any text corpus into a Knowledge Graph using Mistral 7B.\n--\n32\nLists\nPredictive Modeling w/ Python\nPractical Guides to Machine Learning\nNatural Language Processing\nChatGPT prompts\nOnkar Mishra\nUsing langchain for Question Answering on own data\nStep-by-step guide to using langchain to chat with own data\n--\n10\nAmogh Agastya\nin\nBetter Programming\nHarnessing Retrieval Augmented Generation With Langchain\nImplementing RAG using Langchain\n--\n6\nAnindyadeep\nHow to integrate custom LLM using langchain. This is part 1 of my mini-series: Building end to end LLM powered applications without Open AI’s API\n--\n3\nAkriti Upadhyay\nin\nAccredian\nImplementing RAG with Langchain and Hugging Face\nUsing Open Source for Information Retrieval\n--\n6\nHelp\nStatus\nAbout\nCareers\nBlog\nPrivacy\nTerms\nText to speech\nTeams A Beginner’s Guide to Building LLM-Powered Applications\nA LangChain tutorial to build anything with large language models in Python\nLeonie Monigatti\nFollow\nTowards Data Science\n--\n27\nShare\n GitHub - hwchase17/langchain: ⚡ Building applications with LLMs through composability ⚡\n⚡ Building applications with LLMs through composability ⚡ Production Support: As you move your LangChains into…\ngithub.com\nWhat is LangChain?\nLangChain is a framework built to help you build LLM-powered applications more easily by providing you with the following:\nIt is an open-source project (GitHub repository) created by Harrison Chase.\n --\n--\n27\nWritten by Leonie Monigatti\nTowards Data Science\nDeveloper Advocate @'}] I should read the first search result to learn more about LangChain

Action: tavily_search_results_json

Action Input: "LangChain tutorial"[{'url': 'https://python.langchain.com/docs/get_started/quickstart', 'content': "Once we have a key we'll want to set it as an environment variable by running:\nIf you'd prefer not to set an environment variable you can pass the key in directly via the openai_api_key named parameter when initiating the OpenAI LLM class:\nLangSmith\u200b\nMany of the applications you build with LangChain will contain multiple steps with multiple invocations of LLM calls.\n The fact that LLM and ChatModel accept the same inputs means that you can directly swap them for one another in most chains without breaking anything,\nthough it's of course important to think about how inputs are being coerced and how that may affect model performance.\n The base message interface is defined by BaseMessage, which has two required attributes:\nLangChain provides several objects to easily distinguish between different roles:\nIf none of those roles sound right, there is also a ChatMessage class where you can specify the role manually.\n This chain will take input variables, pass those to a prompt template to create a prompt, pass the prompt to a language model, and then pass the output through an (optional) output parser.\n Next steps\u200b\nWe've touched on how to build an application with LangChain, how to trace it with LangSmith, and how to serve it with LangServe.\n"}] I should read the LangChain tutorial to learn more about LangChain

Action: tavily_search_results_json

Action Input: "LangChain tutorial"[{'url': 'https://python.langchain.com/docs/additional_resources/tutorials', 'content': 'Learn how to use Langchain, a Python library for building AI applications with natural language processing and generation. Explore books, handbooks, cheatsheets, courses, and tutorials by various authors and topics.'}] I should read the LangChain tutorial to learn more about LangChain

Action: tavily_search_results_json

开放原子开发者工作坊旨在鼓励更多人参与开源活动,与志同道合的开发者们相互交流开发经验、分享开发心得、获取前沿技术趋势。工作坊有多种形式的开发者活动,如meetup、训练营等,主打技术交流,干货满满,真诚地邀请各位开发者共同参与!

更多推荐

已为社区贡献163条内容

已为社区贡献163条内容

所有评论(0)