航空大数据——由ADS-B报文系统预测飞机坐标(飞行轨迹)(三)

本章是最后一章,主要介绍用于预测的神经网络,提出了一种N-Inception-LSTM的新型网络(相关论文尚未公开,公开后贴链接)。

Y. Chen, J. Sun, Y. Lin, G. Gui and H. Sari, "Hybrid n-Inception-LSTM-based aircraft coordinate prediction method for secure air traffic," IEEE Transactions on Intelligent Transportation Systems, vol. 23, no. 3, pp. 2773-2783, Mar. 2022, doi: 10.1109/TITS.2021.3095129.(Impact factors: 9.6)

航空大数据专题已经更新完毕,欢迎读者交流。上方为已发表论文,含有该专题的更多细节。

第一章:项目背景及数据集分析

第二章:神经网络输入/输出数据集制作

第四章:项目资源汇总及开源

目录

环境版本

显卡:Nvidia 2080Ti

python:3.5.6

numpy:1.14.5

cudatoolkit:9.0

cudnn:7.6.5

tensorflow-gpu:1.10.0

keras:2.2.4

网络结构

网上能搜到很多关于Inception和LSTM网络的介绍,这里就不赘述了。

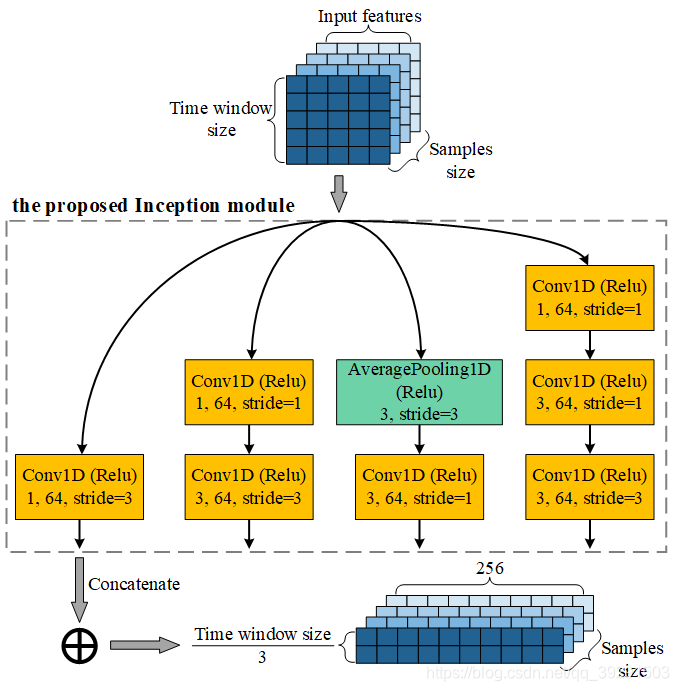

将Inception和LSTM结合,灵感来源于CNN-LSTM网络,能够同时提取数据集中的空间和时间信息,而将普通的CNN换成Inception模块,能够增加网络的尺度适应性。图1所示,是本文用到的Inception模块。

图1 单个Inception模块

图1是本文根据输入输出样本集维度及大小,提出的一个新的Inception模块,实验结果表明该模块拥有良好的性能。与常见的Inception-V1到Inception-V4不同,V1到V4的模块均多用于图像处理,所以用的卷积层多为二维卷积,图1的Inception模块采用了一维卷积,一维卷积足以处理本文数据集,又能降低模型运算量。

图2 N-Inception-LSTM网络结构

图2是本文用到的N-Inception-LSTM网络,N代表有N个Inception-LSTM网络并行,这种结构能够增加网络宽度,提高感知视野。其中,一个Inception model由4个图1所示的Inception模块构成,紧接着Inception model的是两个LSTM模块。

实现代码

在制作数据集过程中,以采样窗长度等于64为例,则网络的输入大小为(64,15),输出大小为64。使用keras构建的网络代码如下:

_input = Input(shape=(64, 15), dtype='float32')

branch_a10 = Conv1D(64, 1, activation='relu', strides=3, padding='same')(_input)

branch_b10 = Conv1D(64, 1, activation='relu', padding='same')(_input)

branch_b10 = Conv1D(64, 3, activation='relu', strides=3, padding='same')(branch_b10)

branch_c10 = AveragePooling1D(3, strides=3, padding='same')(_input)

branch_c10 = Conv1D(64, 3, activation='relu', padding='same')(branch_c10)

branch_d10 = Conv1D(64, 1, activation='relu', padding='same')(_input)

branch_d10 = Conv1D(64, 3, activation='relu', padding='same')(branch_d10)

branch_d10 = Conv1D(64, 3, activation='relu', strides=3, padding='same')(branch_d10)

y10 = keras.layers.concatenate(

[branch_a10, branch_b10, branch_c10, branch_d10], axis=-1)

branch_a20 = Conv1D(64, 1, activation='relu', strides=3, padding='same')(y10)

branch_b20 = Conv1D(64, 1, activation='relu', padding='same')(y10)

branch_b20 = Conv1D(64, 3, activation='relu', strides=3, padding='same')(branch_b20)

branch_c20 = AveragePooling1D(3, strides=3, padding='same')(y10)

branch_c20 = Conv1D(64, 3, activation='relu', padding='same')(branch_c20)

branch_d20 = Conv1D(64, 1, activation='relu', padding='same')(y10)

branch_d20 = Conv1D(64, 3, activation='relu', padding='same')(branch_d20)

branch_d20 = Conv1D(64, 3, activation='relu', strides=3, padding='same')(branch_d20)

y20 = keras.layers.concatenate(

[branch_a20, branch_b20, branch_c20, branch_d20], axis=-1)

branch_a30 = Conv1D(64, 1, activation='relu', strides=3, padding='same')(y20)

branch_b30 = Conv1D(64, 1, activation='relu', padding='same')(y20)

branch_b30 = Conv1D(64, 3, activation='relu', strides=3, padding='same')(branch_b30)

branch_c30 = AveragePooling1D(3, strides=3, padding='same')(y20)

branch_c30 = Conv1D(64, 3, activation='relu', padding='same')(branch_c30)

branch_d30 = Conv1D(64, 1, activation='relu', padding='same')(y20)

branch_d30 = Conv1D(64, 3, activation='relu', padding='same')(branch_d30)

branch_d30 = Conv1D(64, 3, activation='relu', strides=3, padding='same')(branch_d30)

y30 = keras.layers.concatenate(

[branch_a30, branch_b30, branch_c30, branch_d30], axis=-1)

branch_a40 = Conv1D(64, 1, activation='relu', strides=3, padding='same')(y30)

branch_b40 = Conv1D(64, 1, activation='relu', padding='same')(y30)

branch_b40 = Conv1D(64, 3, activation='relu', strides=3, padding='same')(branch_b40)

branch_c40 = AveragePooling1D(3, strides=3, padding='same')(y30)

branch_c40 = Conv1D(64, 3, activation='relu', padding='same')(branch_c40)

branch_d40 = Conv1D(64, 1, activation='relu', padding='same')(y30)

branch_d40 = Conv1D(64, 3, activation='relu', padding='same')(branch_d40)

branch_d40 = Conv1D(64, 3, activation='relu', strides=3, padding='same')(branch_d40)

y40 = keras.layers.concatenate(

[branch_a40, branch_b40, branch_c40, branch_d40], axis=-1)

y50 = LSTM(units=128, return_sequences=True, return_state=False)(y40)

y50 = Dropout(0.5)(y50)

y50 = LSTM(units=128, return_state=False)(y50)

y50 = Dropout(0.5)(y50)

############################################################################

branch_a11 = Conv1D(64, 1, activation='relu', strides=3, padding='same')(_input)

branch_b11 = Conv1D(64, 1, activation='relu', padding='same')(_input)

branch_b11 = Conv1D(64, 3, activation='relu', strides=3, padding='same')(branch_b11)

branch_c11 = AveragePooling1D(3, strides=3, padding='same')(_input)

branch_c11 = Conv1D(64, 3, activation='relu', padding='same')(branch_c11)

branch_d11 = Conv1D(64, 1, activation='relu', padding='same')(_input)

branch_d11 = Conv1D(64, 3, activation='relu', padding='same')(branch_d11)

branch_d11 = Conv1D(64, 3, activation='relu', strides=3, padding='same')(branch_d11)

y11 = keras.layers.concatenate(

[branch_a11, branch_b11, branch_c11, branch_d11], axis=-1)

branch_a21 = Conv1D(64, 1, activation='relu', strides=3, padding='same')(y11)

branch_b21 = Conv1D(64, 1, activation='relu', padding='same')(y11)

branch_b21 = Conv1D(64, 3, activation='relu', strides=3, padding='same')(branch_b21)

branch_c21 = AveragePooling1D(3, strides=3, padding='same')(y11)

branch_c21 = Conv1D(64, 3, activation='relu', padding='same')(branch_c21)

branch_d21 = Conv1D(64, 1, activation='relu', padding='same')(y11)

branch_d21 = Conv1D(64, 3, activation='relu', padding='same')(branch_d21)

branch_d21 = Conv1D(64, 3, activation='relu', strides=3, padding='same')(branch_d21)

y21 = keras.layers.concatenate(

[branch_a21, branch_b21, branch_c21, branch_d21], axis=-1)

branch_a31 = Conv1D(64, 1, activation='relu', strides=3, padding='same')(y21)

branch_b31 = Conv1D(64, 1, activation='relu', padding='same')(y21)

branch_b31 = Conv1D(64, 3, activation='relu', strides=3, padding='same')(branch_b31)

branch_c31 = AveragePooling1D(3, strides=3, padding='same')(y21)

branch_c31 = Conv1D(64, 3, activation='relu', padding='same')(branch_c31)

branch_d31 = Conv1D(64, 1, activation='relu', padding='same')(y21)

branch_d31 = Conv1D(64, 3, activation='relu', padding='same')(branch_d31)

branch_d31 = Conv1D(64, 3, activation='relu', strides=3, padding='same')(branch_d31)

y31 = keras.layers.concatenate(

[branch_a31, branch_b31, branch_c31, branch_d31], axis=-1)

branch_a41 = Conv1D(64, 1, activation='relu', strides=3, padding='same')(y31)

branch_b41 = Conv1D(64, 1, activation='relu', padding='same')(y31)

branch_b41 = Conv1D(64, 3, activation='relu', strides=3, padding='same')(branch_b41)

branch_c41 = AveragePooling1D(3, strides=3, padding='same')(y31)

branch_c41 = Conv1D(64, 3, activation='relu', padding='same')(branch_c41)

branch_d41 = Conv1D(64, 1, activation='relu', padding='same')(y31)

branch_d41 = Conv1D(64, 3, activation='relu', padding='same')(branch_d41)

branch_d41 = Conv1D(64, 3, activation='relu', strides=3, padding='same')(branch_d41)

y41 = keras.layers.concatenate(

[branch_a41, branch_b41, branch_c41, branch_d41], axis=-1)

y51 = LSTM(units=128, return_sequences=True, return_state=False)(y41)

y51 = Dropout(0.5)(y51)

y51 = LSTM(units=128, return_state=False)(y51)

y51 = Dropout(0.5)(y51)

_output = keras.layers.add([y50, y51])

_output = Dense(64)(_output)

model = Model(_input, _output)实验结果

将训练的模型应用于测试集,并将输出进行维度还原,即可观察预测坐标。本文对比了不同时间采样窗大小和不同的模型并行数量对最终结果的影响。

图3 不同时间采样窗大小对比

图4 不同的模型并行数量

控制变量,将不同模型的预测结果与实际轨迹对比,发现模型N-Inception-LSTM,N越大,时间采样窗越大,预测的轨迹越贴合实际轨迹,波动越小。将模型与CNN-LSTM以及单独的CNN比较,前者优势巨大,故不在此讨论,感兴趣的读者可以进行比较。

计算2-Inception-LSTM模型与实际轨迹的二维平均误差,单个点预测的误差在9km左右,这个结果是假设在非常极端的环境下,仅通过信号强度及传感器坐标预测而来的,这个误差范围放在广袤的海洋中,完全能够接受。能为搜救人员提供快速可靠的搜救范围,起码比等待卫星成像或是根据一个不知道是否被篡改的数据进行搜救,要更加及时或可靠。

本章总结

本章提出了一种N-Inception-LSTM模型,由于算力的限制,只做到2-Inception-LSTM,时间采样窗长度为64,但是能够预测的是,N越大,时间采样窗越大,预测的误差会逐步降低。此外,还可以对网络进一步轻量化,如将模型中的卷积层用深度可分离卷积替代,以降低运算量,为提升性能创造更大的空间。

开放原子开发者工作坊旨在鼓励更多人参与开源活动,与志同道合的开发者们相互交流开发经验、分享开发心得、获取前沿技术趋势。工作坊有多种形式的开发者活动,如meetup、训练营等,主打技术交流,干货满满,真诚地邀请各位开发者共同参与!

更多推荐

已为社区贡献4条内容

已为社区贡献4条内容

所有评论(0)