Python爬虫实战:从猎聘网获取职位信息并存入数据库

通过使用python从猎聘网获取职位信息并存入mysql数据库中。接下来,我们将解析得到的职位信息存入MySQL数据库中。获取到的数据通常是JSON格式的,我们需要解析JSON数据,提取出我们需要的职位信息,例如职位名称、公司名称、工作地点、薪资待遇等。这个函数用于读取JavaScript代码,并执行JavaScript来生成一个参数(ckId),用于后续的HTTP请求。这个函数用于解析HTTP响

通过使用python从猎聘网获取职位信息并存入mysql数据库中。

标题:Python爬虫实战:从猎聘网获取职位信息并存入数据库

-

准备工作:

在开始之前,我们需要安装Python和相应的库(requests、pymysql)。 -

获取数据:

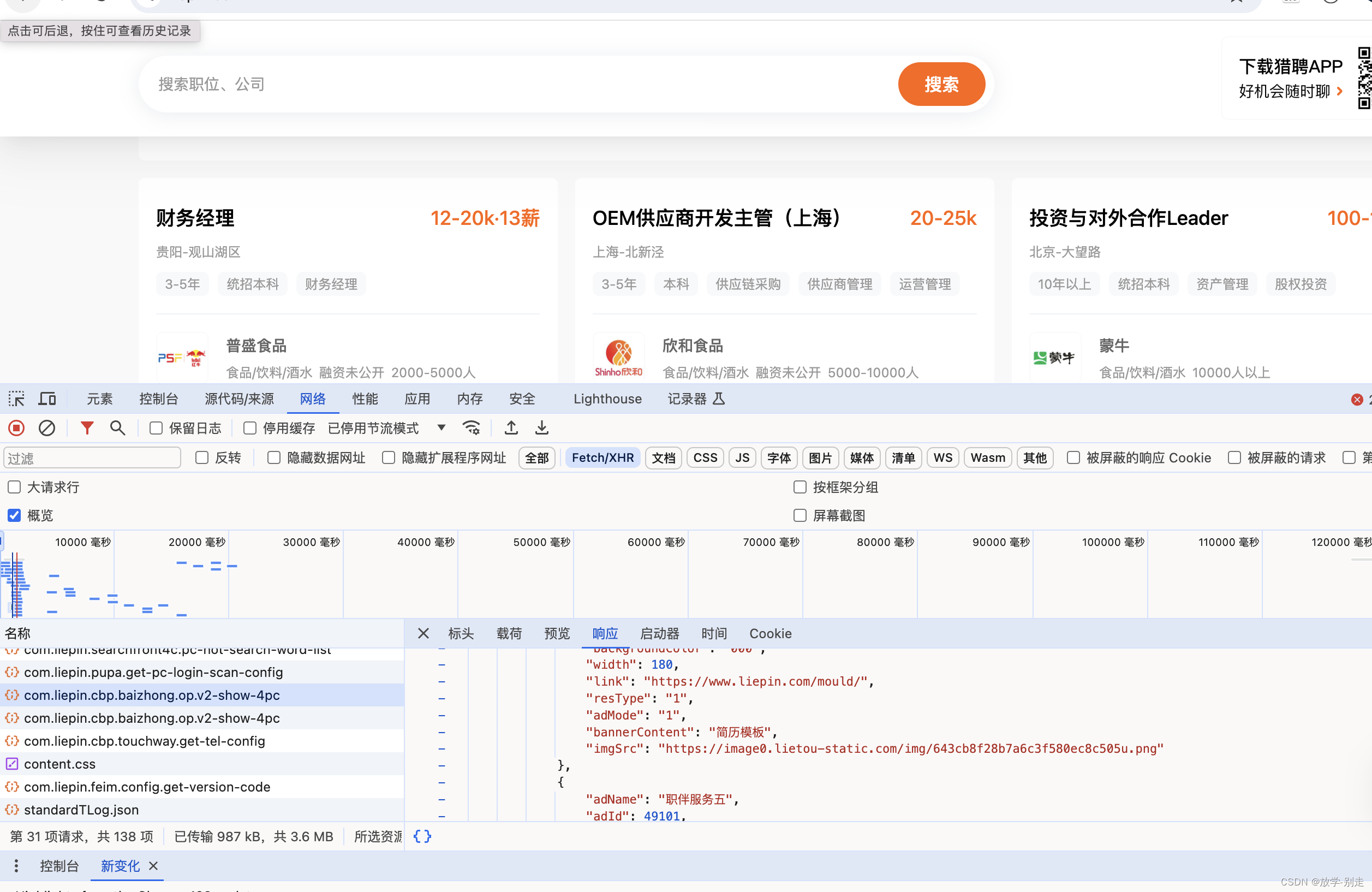

首先,我们需要模拟HTTP请求,向猎聘网发送请求,获取职位信息。我们可以使用requests库来实现这一功能。具体的请求地址和参数可以通过浏览器的开发者工具来获取。 -

解析数据:

获取到的数据通常是JSON格式的,我们需要解析JSON数据,提取出我们需要的职位信息,例如职位名称、公司名称、工作地点、薪资待遇等。 -

存储数据:

接下来,我们将解析得到的职位信息存入MySQL数据库中。我们可以使用pymysql库来连接MySQL数据库,并执行SQL语句将数据插入到数据库表中。

分析猎聘网网页,发猎聘网是通过接口请求获取json数据进行渲染的,但是需要先做一个js反向操作。

下面我将逐行解释代码的功能和执行过程:

导入必要的库:

import time

import requests

import execjs

import pymysql

在这里,我们导入了用于处理时间、发送HTTP请求、执行JavaScript代码以及连接MySQL数据库的库。

设置数据库配置:

db_config = {

'host': '127.0.0.1',

'user': 'root',

'password': '12345678',

'database': 'work_data',

'charset': 'utf8mb4',

'cursorclass': pymysql.cursors.DictCursor

}

这里定义了连接数据库所需的参数,包括主机地址、用户名、密码、数据库名称、字符集等。

读取JavaScript代码:

def read_js_code():

f = open('/Users/shareit/workspace/chart_show/demo.js', encoding='utf-8')

txt = f.read()

js_code = execjs.compile(txt)

ckId = js_code.call('r', 32)

return ckId

这个函数用于读取JavaScript代码,并执行JavaScript来生成一个参数(ckId),用于后续的HTTP请求。

发送HTTP请求获取数据:

def post_data():

read_js_code()

# 设置请求头信息

headers = {...}

# 遍历城市和行业列表发送请求

for name in list:

for i in range(1):

# 构造请求数据

data = {"data": {"mainSearchPcConditionForm":

{"city": "410", "dq": "410", "pubTime": "", "currentPage": i, "pageSize": 40,

"key": "大数据",

"suggestTag": "", "workYearCode": "0$1", "compId": "", "compName": "", "compTag": "",

"industry": name, "salary": "", "jobKind": "", "compScale": "", "compKind": "",

"compStage": "",

"eduLevel": ""},

"passThroughForm":

{"scene": "page", "skId": "z33lm3jhwza7k1xjvcyn8lb8e9ghxx1b",

"fkId": "z33lm3jhwza7k1xjvcyn8lb8e9ghxx1b",

"ckId": read_js_code(),

'sfrom': 'search_job_pc'}}}

# 发送POST请求

response = requests.post(url=url, json=data, headers=headers)

time.sleep(2) # 控制请求频率

parse_data(response)

这个函数负责发送HTTP POST请求来获取猎聘网的职位信息数据。请求的URL、请求头、请求数据都在这里设置。

解析并处理数据:

def parse_data(response):

try:

jobCardList = response.json()['data']['data']['jobCardList']

sync_data2db(jobCardList)

except Exception as e:

return

这个函数用于解析HTTP响应,提取其中的职位信息,并调用sync_data2db()函数将数据存入数据库中。

将数据同步到数据库:

def sync_data2db(jobCardList):

connection = pymysql.connect(**db_config)

try:

with connection.cursor() as cursor:

for job in jobCardList:

# 构造插入数据库的SQL语句和参数

insert_query = "INSERT INTO job_detail(job_title,location,salary_amount,work_experience,tags,company_name,industry,company_size) VALUES (%s,%s,%s,%s,%s,%s,%s,%s)"

values = (job['job']['title'], job['job']['dq'].split("-")[0], process_salary(job['job']['salary']),

job['job']['campusJobKind'] if 'campusJobKind' in job['job'] else '应届'

, " ".join(job['job']['labels']), job['comp']['compName'], job['comp']['compIndustry'], job['comp']['compScale'])

# 执行SQL语句

cursor.execute(insert_query,values)

connection.commit() # 提交事务

except Exception as e:

print(e)

finally:

connection.close() # 关闭数据库连接

这个函数负责将解析后的职位信息存入MySQL数据库中。首先建立数据库连接,然后遍历职位信息列表,构造插入数据库的SQL语句和参数,并执行插入操作。最后提交事务并关闭数据库连接。

主程序入口:

if __name__ == '__main__':

post_data()

这个部分是整个程序的入口,调用post_data()函数开始执行爬取和数据存储的过程。

下面是完整代码

# -*- coding: utf-8 -*-

import time

import requests

import execjs

import pymysql

db_config = {

'host': '127.0.0.1',

'user': 'root',

'password': '12345678',

'database': 'work_data',

'charset': 'utf8mb4',

'cursorclass': pymysql.cursors.DictCursor

}

def read_js_code():

f = open('/Users/shareit/workspace/chart_show/demo.js', encoding='utf-8')

txt = f.read()

js_code = execjs.compile(txt)

ckId = js_code.call('r', 32)

return ckId

def post_data():

read_js_code()

url = "https://api-c.liepin.com/api/com.liepin.searchfront4c.pc-search-job"

headers = {

'Accept': 'application/json, text/plain, */*',

'Accept-Encoding': 'gzip, deflate, br',

'Accept-Language': 'zh-CN,zh;q=0.9',

'Connection': 'keep-alive',

'Sec-Ch-Ua-Platform': 'macOS',

'Content-Length': '398',

'Content-Type': 'application/json;charset=UTF-8;',

'Host': 'api-c.liepin.com',

'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/119.0.0.0 Safari/537.36',

'Origin': 'https://www.liepin.com',

'Referer': 'https://www.liepin.com/',

'Sec-Ch-Ua': '"Google Chrome";v="119", "Chromium";v="119", "Not?A_Brand";v="24"',

'Sec-Ch-Ua-Mobile': '?0',

'Sec-Fetch-Dest': 'empty',

'Sec-Fetch-Mode': 'cors',

'Sec-Fetch-Site': 'same-site',

'X-Client-Type': 'web',

'X-Fscp-Bi-Stat': '{"location": "https://www.liepin.com/zhaopin"}',

'X-Fscp-Fe-Version': '',

'X-Fscp-Std-Info': '{"client_id": "40108"}',

'X-Fscp-Trace-Id': '52262313-e6ca-4cfd-bb67-41b4a32b8bb5',

'X-Fscp-Version': '1.1',

'X-Requested-With': 'XMLHttpRequest',

}

list = ["H01$H0001", "H01$H0002","H01$H0003", "H01$H0004", "H01$H0005","H01$H0006", "H01$H0007", "H01$H0008","H01$H0009", "H01$H00010", "H02$H0018", "H02$H0019", "H03$H0022",

"H03$H0023", "H03$H0024", "H03$H0025", "H04$H0030", "H04$H0031",

"H04$H0032", "H05$H05", "H06$H06", "H07$H07", "H08$H08"]

list = ["H01","H02","H03","H04","H05","H06","H07","H08","H09","H10","H01$H0001", "H01$H0002","H01$H0003", "H01$H0004", "H01$H0005","H01$H0006", "H01$H0007", "H01$H0008","H01$H0009", "H01$H00010"]

for name in list:

print("-------{}---------".format(name))

for i in range(1):

print("------------第{}页-----------".format(i))

data = {"data": {"mainSearchPcConditionForm":

{"city": "410", "dq": "410", "pubTime": "", "currentPage": i, "pageSize": 40,

"key": "大数据",

"suggestTag": "", "workYearCode": "0$1", "compId": "", "compName": "", "compTag": "",

"industry": name, "salary": "", "jobKind": "", "compScale": "", "compKind": "",

"compStage": "",

"eduLevel": ""},

"passThroughForm":

{"scene": "page", "skId": "z33lm3jhwza7k1xjvcyn8lb8e9ghxx1b",

"fkId": "z33lm3jhwza7k1xjvcyn8lb8e9ghxx1b",

"ckId": read_js_code(),

'sfrom': 'search_job_pc'}}}

response = requests.post(url=url, json=data, headers=headers)

time.sleep(2)

parse_data(response)

def process_salary(salary):

if '薪资面议' == salary:

return 0

salary = salary.split("k")[0]

if '-' in salary:

low, high = salary.split('-')

low = float(low) * 1000 # 将 'k' 替换为实际的单位

return low

else:

return float(salary) * 1000

def parse_data(response):

try:

jobCardList = response.json()['data']['data']['jobCardList']

sync_data2db(jobCardList)

except Exception as e:

return

def sync_data2db(jobCardList):

connection = pymysql.connect(**db_config)

try:

with connection.cursor() as cursor:

for job in jobCardList:

print(job)

insert_query = "INSERT INTO job_detail(job_title,location,salary_amount,work_experience,tags,company_name,industry,company_size) VALUES (%s,%s,%s,%s,%s,%s,%s,%s)"

values = (job['job']['title'], job['job']['dq'].split("-")[0], process_salary(job['job']['salary']),

job['job']['campusJobKind'] if 'campusJobKind' in job['job'] else '应届'

, " ".join(job['job']['labels']), job['comp']['compName'], job['comp']['compIndustry'], job['comp']['compScale'])

print(values)

# cursor.execute(insert_query,values)

connection.commit()

except Exception as e:

print(e)

finally:

connection.close()

if __name__ == '__main__':

post_data()

以下是程序运行结果

如有遇到问题可以找小编沟通交流哦。另外小编帮忙辅导大课作业,学生毕设等。不限于python,java,大数据等。

开放原子开发者工作坊旨在鼓励更多人参与开源活动,与志同道合的开发者们相互交流开发经验、分享开发心得、获取前沿技术趋势。工作坊有多种形式的开发者活动,如meetup、训练营等,主打技术交流,干货满满,真诚地邀请各位开发者共同参与!

更多推荐

已为社区贡献2条内容

已为社区贡献2条内容

所有评论(0)