MMEngine之介绍、结构、应用示例、常见用法(一)

主要Runner 调用以下组件来完成训练和推理循环:数据集:负责在训练、测试和推理任务中构建数据集,并将数据输入模型。在使用中,它由 PyTorch DataLoader 包装,该加载器启动多个子进程来加载数据。Model:接受数据并输出训练过程中的损失;在测试和推理任务期间接受数据并执行预测。在分布式环境中,模型由模型包装器(例如,MMDistributedDataParallel)包装。

1、 介绍

MMEngine 是一个基于 PyTorch 训练深度学习模型的基础库。它支持在 Linux、Windows 和 macOS 上运行。它提供了坚实的工程基础,使开发人员无需在工作流程上编写冗余代码。它作为所有 OpenMMLab 代码库的训练引擎,支持各个研究领域的数百种算法。此外,MMEngine 还可以通用地应用于非 OpenMMLab 项目。

它具有以下三个特点:

(1)通用且强大的执行器:

支持用最少的代码训练不同的任务,例如仅用 80 行代码训练 ImageNet(原始 PyTorch 示例需要 400 行)。

轻松兼容 TIMM、TorchVision 和 Detectron2 等流行算法库中的模型。

(2)具有统一接口的开放架构:

使用统一的 API 处理不同的任务:您可以实现一次方法并将其应用于所有兼容的模型。

通过简单的高级抽象支持各种后端设备。目前,MMEngine支持在Nvidia CUDA、Mac MPS、AMD、MLU等设备上进行模型训练。

(3)可定制的培训流程:

定义了具有“乐高”式**可组合性的高度模块化训练引擎**。

提供丰富的组件和策略集。

使用不同级别的 API 完全控制培训过程。

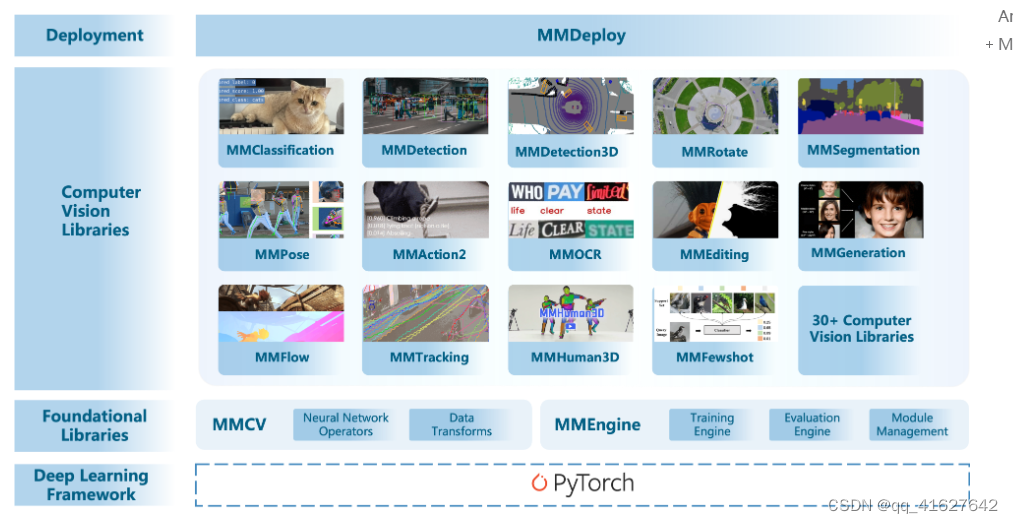

2、 结构

上图说明了 OpenMMLab 2.0 中 MMEngine 的层次结构。MMEngine为OpenMMLab算法库实现了下一代训练架构,为OpenMMLab内30多个算法库提供了统一的执行基础。其核心组件包括训练引擎、评估引擎和模块管理。

1、核心模块及相关组件

训练引擎的核心模块是 Runner. Runner负责执行训练、测试和推理任务,并管理这些过程中所需的各种组件。在训练、测试和推理任务执行过程中的特定位置,设置RunnerHooks 以允许用户扩展、插入和执行自定义逻辑。主要Runner 调用以下组件来完成训练和推理循环:

(1)数据集:负责在训练、测试和推理任务中构建数据集,并将数据输入模型。在使用中,它由 PyTorch DataLoader 包装,该加载器启动多个子进程来加载数据。

(2)Model:接受数据并输出训练过程中的损失;在测试和推理任务期间接受数据并执行预测。在分布式环境中,模型由模型包装器(例如,MMDistributedDataParallel)包装。

(3)Optimizer Wrapper优化器包装器:优化器包装器在训练过程中执行反向传播来优化模型,并通过统一的接口支持混合精度训练和梯度累积。

(4)Parameter Scheduler参数调度器:在训练过程中动态调整优化器超参数,例如学习率和动量。

在训练间隔或测试阶段,指标和评估器负责评估模型的性能。Evaluator根据数据集评估模型的预测。在Evaluator 中,有一个名为 Metrics的抽象 ,它计算各种指标,例如召回率、准确性等。

为了保证接口的统一,OpenMMLab 2.0内的评估器、模型和各个算法库中的数据之间的通信接口都使用 Data Elements进行封装。

在训练和推理执行期间,上述组件可以利用日志管理模块和可视化器进行结构化和非结构化日志存储和可视化。

Logging Modules:负责管理Runner执行过程中产生的各种日志信息。消息中心实现组件、运行器和日志处理器之间的数据共享,而日志处理器则处理日志信息。处理后的日志发送至Logger和Visualizer进行管理和显示。负责 Visualizer可视化模型的特征图、预测结果以及训练过程中生成的结构化日志。它支持多种可视化后端,例如 TensorBoard 和 WanDB。

2、 通用基础模块

MMEngine还实现了算法模型执行过程中所需的各种通用基础模块,包括:

Config:在OpenMMLab算法库中,用户可以通过编写配置文件(config)来配置训练、测试过程以及相关组件。

注册中心:负责管理算法库内具有类似功能的模块。基于算法库模块的抽象,MMEngine定义了一组根注册表。算法库内的注册表可以继承自这些根注册表,从而实现跨算法库模块调用和交互。这允许在 OpenMMLab 框架内跨不同算法无缝集成和利用模块。

文件I/O:为各个模块中的文件读写操作提供统一的接口,一致支持多种文件后端系统和格式,具有可扩展性。

分布式通信原语:在分布式程序执行期间处理不同进程之间的通信。该接口抽象了分布式和非分布式环境之间的差异,并自动处理数据设备和通信后端。

其他实用程序:还有实用程序模块,例如ManagerMixin,它实现了创建和访问全局变量的方法。许多全局可访问对象的基类Runner是ManagerMixin.

3、 15 分钟即可开始使用 MMENGINE

在本教程中,我们将以在 CIFAR-10 数据集上训练 ResNet-50 模型为例。我们将仅用 80 行代码通过MMEgnine构建一个完整且可配置的训练和验证管道。整个过程包括以下步骤:

(1)建立模型

(2)构建数据集和 DataLoader

(3)建立评估指标

(4)构建 Runner 并运行任务

1、建立模型

首先,我们需要建立一个模型。在MMEngine中,模型应该继承自BaseModel.除了表示数据集输入的参数之外,其forward方法还需要接受一个名为mode 的额外参数:

class BaseModel(BaseModule):

"""Base class for all algorithmic models.

BaseModel implements the basic functions of the algorithmic model, such as

weights initialize, batch inputs preprocess(see more information in

:class:`BaseDataPreprocessor`), parse losses, and update model parameters.

Subclasses inherit from BaseModel only need to implement the forward

method, which implements the logic to calculate loss and predictions,

then can be trained in the runner.

Examples:

>>> @MODELS.register_module()

>>> class ToyModel(BaseModel):

>>>

>>> def __init__(self):

>>> super().__init__()

>>> self.backbone = nn.Sequential()

>>> self.backbone.add_module('conv1', nn.Conv2d(3, 6, 5))

>>> self.backbone.add_module('pool', nn.MaxPool2d(2, 2))

>>> self.backbone.add_module('conv2', nn.Conv2d(6, 16, 5))

>>> self.backbone.add_module('fc1', nn.Linear(16 * 5 * 5, 120))

>>> self.backbone.add_module('fc2', nn.Linear(120, 84))

>>> self.backbone.add_module('fc3', nn.Linear(84, 10))

>>>

>>> self.criterion = nn.CrossEntropyLoss()

>>>

>>> def forward(self, batch_inputs, data_samples, mode='tensor'):

>>> data_samples = torch.stack(data_samples)

>>> if mode == 'tensor':

>>> return self.backbone(batch_inputs)

>>> elif mode == 'predict':

>>> feats = self.backbone(batch_inputs)

>>> predictions = torch.argmax(feats, 1)

>>> return predictions

>>> elif mode == 'loss':

>>> feats = self.backbone(batch_inputs)

>>> loss = self.criterion(feats, data_samples)

>>> return dict(loss=loss)

Args:

data_preprocessor (dict, optional): The pre-process config of

:class:`BaseDataPreprocessor`.

init_cfg (dict, optional): The weight initialized config for

:class:`BaseModule`.

Attributes:

data_preprocessor (:obj:`BaseDataPreprocessor`): Used for

pre-processing data sampled by dataloader to the format accepted by

:meth:`forward`.

init_cfg (dict, optional): Initialization config dict.

"""

def __init__(self,

data_preprocessor: Optional[Union[dict, nn.Module]] = None,

init_cfg: Optional[dict] = None):

super().__init__(init_cfg)

if data_preprocessor is None:

data_preprocessor = dict(type='BaseDataPreprocessor')

if isinstance(data_preprocessor, nn.Module):

self.data_preprocessor = data_preprocessor

elif isinstance(data_preprocessor, dict):

self.data_preprocessor = MODELS.build(data_preprocessor)

else:

raise TypeError('data_preprocessor should be a `dict` or '

f'`nn.Module` instance, but got '

f'{type(data_preprocessor)}')

def train_step(self, data: Union[dict, tuple, list],

optim_wrapper: OptimWrapper) -> Dict[str, torch.Tensor]:

"""Implements the default model training process including

preprocessing, model forward propagation, loss calculation,

optimization, and back-propagation.

During non-distributed training. If subclasses do not override the

:meth:`train_step`, :class:`EpochBasedTrainLoop` or

:class:`IterBasedTrainLoop` will call this method to update model

parameters. The default parameter update process is as follows:

1. Calls ``self.data_processor(data, training=False)`` to collect

batch_inputs and corresponding data_samples(labels).

2. Calls ``self(batch_inputs, data_samples, mode='loss')`` to get raw

loss

3. Calls ``self.parse_losses`` to get ``parsed_losses`` tensor used to

backward and dict of loss tensor used to log messages.

4. Calls ``optim_wrapper.update_params(loss)`` to update model.

Args:

data (dict or tuple or list): Data sampled from dataset.

optim_wrapper (OptimWrapper): OptimWrapper instance

used to update model parameters.

Returns:

Dict[str, torch.Tensor]: A ``dict`` of tensor for logging.

"""

# Enable automatic mixed precision training context.

with optim_wrapper.optim_context(self):

data = self.data_preprocessor(data, True)

losses = self._run_forward(data, mode='loss') # type: ignore

parsed_losses, log_vars = self.parse_losses(losses) # type: ignore

optim_wrapper.update_params(parsed_losses)

return log_vars

def val_step(self, data: Union[tuple, dict, list]) -> list:

"""Gets the predictions of given data.

Calls ``self.data_preprocessor(data, False)`` and

``self(inputs, data_sample, mode='predict')`` in order. Return the

predictions which will be passed to evaluator.

Args:

data (dict or tuple or list): Data sampled from dataset.

Returns:

list: The predictions of given data.

"""

data = self.data_preprocessor(data, False)

return self._run_forward(data, mode='predict') # type: ignore

def test_step(self, data: Union[dict, tuple, list]) -> list:

"""``BaseModel`` implements ``test_step`` the same as ``val_step``.

Args:

data (dict or tuple or list): Data sampled from dataset.

Returns:

list: The predictions of given data.

"""

data = self.data_preprocessor(data, False)

return self._run_forward(data, mode='predict') # type: ignore

def parse_losses(

self, losses: Dict[str, torch.Tensor]

) -> Tuple[torch.Tensor, Dict[str, torch.Tensor]]:

"""Parses the raw outputs (losses) of the network.

Args:

losses (dict): Raw output of the network, which usually contain

losses and other necessary information.

Returns:

tuple[Tensor, dict]: There are two elements. The first is the

loss tensor passed to optim_wrapper which may be a weighted sum

of all losses, and the second is log_vars which will be sent to

the logger.

"""

log_vars = []

for loss_name, loss_value in losses.items():

if isinstance(loss_value, torch.Tensor):

log_vars.append([loss_name, loss_value.mean()])

elif is_list_of(loss_value, torch.Tensor):

log_vars.append(

[loss_name,

sum(_loss.mean() for _loss in loss_value)])

else:

raise TypeError(

f'{loss_name} is not a tensor or list of tensors')

loss = sum(value for key, value in log_vars if 'loss' in key)

log_vars.insert(0, ['loss', loss])

log_vars = OrderedDict(log_vars) # type: ignore

return loss, log_vars # type: ignore

def to(self, *args, **kwargs) -> nn.Module:

"""Overrides this method to call :meth:`BaseDataPreprocessor.to`

additionally.

Returns:

nn.Module: The model itself.

"""

# Since Torch has not officially merged

# the npu-related fields, using the _parse_to function

# directly will cause the NPU to not be found.

# Here, the input parameters are processed to avoid errors.

if args and isinstance(args[0], str) and 'npu' in args[0]:

args = tuple(

[list(args)[0].replace('npu', torch.npu.native_device)])

if kwargs and 'npu' in str(kwargs.get('device', '')):

kwargs['device'] = kwargs['device'].replace(

'npu', torch.npu.native_device)

device = torch._C._nn._parse_to(*args, **kwargs)[0]

if device is not None:

self._set_device(torch.device(device))

return super().to(*args, **kwargs)

def cuda(

self,

device: Optional[Union[int, str, torch.device]] = None,

) -> nn.Module:

"""Overrides this method to call :meth:`BaseDataPreprocessor.cuda`

additionally.

Returns:

nn.Module: The model itself.

"""

if device is None or isinstance(device, int):

device = torch.device('cuda', index=device)

self._set_device(torch.device(device))

return super().cuda(device)

def mlu(

self,

device: Union[int, str, torch.device, None] = None,

) -> nn.Module:

"""Overrides this method to call :meth:`BaseDataPreprocessor.mlu`

additionally.

Returns:

nn.Module: The model itself.

"""

device = torch.device('mlu', torch.mlu.current_device())

self._set_device(device)

return super().mlu()

def npu(

self,

device: Union[int, str, torch.device, None] = None,

) -> nn.Module:

"""Overrides this method to call :meth:`BaseDataPreprocessor.npu`

additionally.

Returns:

nn.Module: The model itself.

Note:

This generation of NPU(Ascend910) does not support

the use of multiple cards in a single process,

so the index here needs to be consistent with the default device

"""

device = torch.npu.current_device()

self._set_device(device)

return super().npu()

def cpu(self, *args, **kwargs) -> nn.Module:

"""Overrides this method to call :meth:`BaseDataPreprocessor.cpu`

additionally.

Returns:

nn.Module: The model itself.

"""

self._set_device(torch.device('cpu'))

return super().cpu()

def _set_device(self, device: torch.device) -> None:

"""Recursively set device for `BaseDataPreprocessor` instance.

Args:

device (torch.device): the desired device of the parameters and

buffers in this module.

"""

def apply_fn(module):

if not isinstance(module, BaseDataPreprocessor):

return

if device is not None:

module._device = device

self.apply(apply_fn)

@abstractmethod

def forward(self,

inputs: torch.Tensor,

data_samples: Optional[list] = None,

mode: str = 'tensor') -> Union[Dict[str, torch.Tensor], list]:

"""Returns losses or predictions of training, validation, testing, and

simple inference process.

``forward`` method of BaseModel is an abstract method, its subclasses

must implement this method.

Accepts ``batch_inputs`` and ``data_sample`` processed by

:attr:`data_preprocessor`, and returns results according to mode

arguments.

During non-distributed training, validation, and testing process,

``forward`` will be called by ``BaseModel.train_step``,

``BaseModel.val_step`` and ``BaseModel.test_step`` directly.

During distributed data parallel training process,

``MMSeparateDistributedDataParallel.train_step`` will first call

``DistributedDataParallel.forward`` to enable automatic

gradient synchronization, and then call ``forward`` to get training

loss.

Args:

inputs (torch.Tensor): batch input tensor collated by

:attr:`data_preprocessor`.

data_samples (list, optional):

data samples collated by :attr:`data_preprocessor`.

mode (str): mode should be one of ``loss``, ``predict`` and

``tensor``

- ``loss``: Called by ``train_step`` and return loss ``dict``

used for logging

- ``predict``: Called by ``val_step`` and ``test_step``

and return list of results used for computing metric.

- ``tensor``: Called by custom use to get ``Tensor`` type

results.

Returns:

dict or list:

- If ``mode == loss``, return a ``dict`` of loss tensor used

for backward and logging.

- If ``mode == predict``, return a ``list`` of inference

results.

- If ``mode == tensor``, return a tensor or ``tuple`` of tensor

or ``dict`` of tensor for custom use.

"""

def _run_forward(self, data: Union[dict, tuple, list],

mode: str) -> Union[Dict[str, torch.Tensor], list]:

"""Unpacks data for :meth:`forward`

Args:

data (dict or tuple or list): Data sampled from dataset.

mode (str): Mode of forward.

Returns:

dict or list: Results of training or testing mode.

"""

if isinstance(data, dict):

results = self(**data, mode=mode)

elif isinstance(data, (list, tuple)):

results = self(*data, mode=mode)

else:

raise TypeError('Output of `data_preprocessor` should be '

f'list, tuple or dict, but got {type(data)}')

return results

对于训练, mode的值为“loss”,并且该forward方法应返回包含键“loss”的dict。

对于验证,mode 的值为“predict”,forward 方法应返回包含预测和标签的结果。

import torch.nn.functional as F

import torchvision

from mmengine.model import BaseModel

class MMResNet50(BaseModel):

def __init__(self):

super().__init__()

self.resnet = torchvision.models.resnet50()

def forward(self, imgs, labels, mode):

x = self.resnet(imgs)

if mode == 'loss':

return {'loss': F.cross_entropy(x, labels)}

elif mode == 'predict':

return x, labels

2、构建数据集和 DataLoader

接下来,我们需要创建用于训练和验证的数据集和数据加载器。对于基本训练和验证,我们可以简单地使用 TorchVision 支持的内置数据集。

DataLoader(dataset, batch_size=1, shuffle=False, sampler=None,

batch_sampler=None, num_workers=0, collate_fn=None,

pin_memory=False, drop_last=False, timeout=0,

worker_init_fn=None, *, prefetch_factor=2,

persistent_workers=False)

import torchvision.transforms as transforms

from torch.utils.data import DataLoader

norm_cfg = dict(mean=[0.491, 0.482, 0.447], std=[0.202, 0.199, 0.201])

train_dataloader = DataLoader(batch_size=32,

shuffle=True,

dataset=torchvision.datasets.CIFAR10(

'data/cifar10',

train=True,

download=True,

transform=transforms.Compose([

transforms.RandomCrop(32, padding=4),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize(**norm_cfg)

])))

val_dataloader = DataLoader(batch_size=32,

shuffle=False,

dataset=torchvision.datasets.CIFAR10(

'data/cifar10',

train=False,

download=True,

transform=transforms.Compose([

transforms.ToTensor(),

transforms.Normalize(**norm_cfg)

])))

3、建立评估指标

为了验证和测试模型,我们需要定义一个称为准确性的指标来评估模型。该指标需要继承BaseMetric并实现process和compute_metrics方法,其中该process方法接受数据集的输出和其他输出当mode=“predict”。该场景的输出数据是一批数据。处理完这批数据后,我们将信息保存到self.results属性中。 compute_metrics接受一个results参数。results的输入compute_metrics是保存的所有信息process(在分布式环境中,是从所有进程中results收集的信息)。使用这些信息来计算并返回dict保存评估指标的结果

class BaseMetric(metaclass=ABCMeta):

"""Base class for a metric.

The metric first processes each batch of data_samples and predictions,

and appends the processed results to the results list. Then it

collects all results together from all ranks if distributed training

is used. Finally, it computes the metrics of the entire dataset.

A subclass of class:`BaseMetric` should assign a meaningful value to the

class attribute `default_prefix`. See the argument `prefix` for details.

Args:

collect_device (str): Device name used for collecting results from

different ranks during distributed training. Must be 'cpu' or

'gpu'. Defaults to 'cpu'.

prefix (str, optional): The prefix that will be added in the metric

names to disambiguate homonymous metrics of different evaluators.

If prefix is not provided in the argument, self.default_prefix

will be used instead. Default: None

collect_dir: (str, optional): Synchronize directory for collecting data

from different ranks. This argument should only be configured when

``collect_device`` is 'cpu'. Defaults to None.

`New in version 0.7.3.`

"""

default_prefix: Optional[str] = None

def __init__(self,

collect_device: str = 'cpu',

prefix: Optional[str] = None,

collect_dir: Optional[str] = None) -> None:

if collect_dir is not None and collect_device != 'cpu':

raise ValueError('`collec_dir` could only be configured when '

"`collect_device='cpu'`")

self._dataset_meta: Union[None, dict] = None

self.collect_device = collect_device

self.results: List[Any] = []

self.prefix = prefix or self.default_prefix

self.collect_dir = collect_dir

if self.prefix is None:

print_log(

'The prefix is not set in metric class '

f'{self.__class__.__name__}.',

logger='current',

level=logging.WARNING)

@property

def dataset_meta(self) -> Optional[dict]:

"""Optional[dict]: Meta info of the dataset."""

return self._dataset_meta

@dataset_meta.setter

def dataset_meta(self, dataset_meta: dict) -> None:

"""Set the dataset meta info to the metric."""

self._dataset_meta = dataset_meta

@abstractmethod

def process(self, data_batch: Any, data_samples: Sequence[dict]) -> None:

"""Process one batch of data samples and predictions. The processed

results should be stored in ``self.results``, which will be used to

compute the metrics when all batches have been processed.

Args:

data_batch (Any): A batch of data from the dataloader.

data_samples (Sequence[dict]): A batch of outputs from

the model.

"""

@abstractmethod

def compute_metrics(self, results: list) -> dict:

"""Compute the metrics from processed results.

Args:

results (list): The processed results of each batch.

Returns:

dict: The computed metrics. The keys are the names of the metrics,

and the values are corresponding results.

"""

def evaluate(self, size: int) -> dict:

"""Evaluate the model performance of the whole dataset after processing

all batches.

Args:

size (int): Length of the entire validation dataset. When batch

size > 1, the dataloader may pad some data samples to make

sure all ranks have the same length of dataset slice. The

``collect_results`` function will drop the padded data based on

this size.

Returns:

dict: Evaluation metrics dict on the val dataset. The keys are the

names of the metrics, and the values are corresponding results.

"""

if len(self.results) == 0:

print_log(

f'{self.__class__.__name__} got empty `self.results`. Please '

'ensure that the processed results are properly added into '

'`self.results` in `process` method.',

logger='current',

level=logging.WARNING)

if self.collect_device == 'cpu':

results = collect_results(

self.results,

size,

self.collect_device,

tmpdir=self.collect_dir)

else:

results = collect_results(self.results, size, self.collect_device)

if is_main_process():

# cast all tensors in results list to cpu

results = _to_cpu(results)

_metrics = self.compute_metrics(results) # type: ignore

# Add prefix to metric names

if self.prefix:

_metrics = {

'/'.join((self.prefix, k)): v

for k, v in _metrics.items()

}

metrics = [_metrics]

else:

metrics = [None] # type: ignore

broadcast_object_list(metrics)

# reset the results list

self.results.clear()

return metrics[0]

@METRICS.register_module()

class DumpResults(BaseMetric):

"""Dump model predictions to a pickle file for offline evaluation.

Args:

out_file_path (str): Path of the dumped file. Must end with '.pkl'

or '.pickle'.

collect_device (str): Device name used for collecting results from

different ranks during distributed training. Must be 'cpu' or

'gpu'. Defaults to 'cpu'.

collect_dir: (str, optional): Synchronize directory for collecting data

from different ranks. This argument should only be configured when

``collect_device`` is 'cpu'. Defaults to None.

`New in version 0.7.3.`

"""

def __init__(self,

out_file_path: str,

collect_device: str = 'cpu',

collect_dir: Optional[str] = None) -> None:

super().__init__(

collect_device=collect_device, collect_dir=collect_dir)

if not out_file_path.endswith(('.pkl', '.pickle')):

raise ValueError('The output file must be a pkl file.')

self.out_file_path = out_file_path

def process(self, data_batch: Any, predictions: Sequence[dict]) -> None:

"""transfer tensors in predictions to CPU."""

self.results.extend(_to_cpu(predictions))

def compute_metrics(self, results: list) -> dict:

"""dump the prediction results to a pickle file."""

dump(results, self.out_file_path)

print_log(

f'Results has been saved to {self.out_file_path}.',

logger='current')

return {}

def _to_cpu(data: Any) -> Any:

"""transfer all tensors and BaseDataElement to cpu."""

if isinstance(data, (Tensor, BaseDataElement)):

return data.to('cpu')

elif isinstance(data, list):

return [_to_cpu(d) for d in data]

elif isinstance(data, tuple):

return tuple(_to_cpu(d) for d in data)

elif isinstance(data, dict):

return {k: _to_cpu(v) for k, v in data.items()}

else:

return data

from mmengine.evaluator import BaseMetric

class Accuracy(BaseMetric):

def process(self, data_batch, data_samples):

score, gt = data_samples

# save the middle result of a batch to `self.results`

self.results.append({

'batch_size': len(gt),

'correct': (score.argmax(dim=1) == gt).sum().cpu(),

})

def compute_metrics(self, results):

total_correct = sum(item['correct'] for item in results)

total_size = sum(item['batch_size'] for item in results)

# return the dict containing the eval results

# the key is the name of the metric name

return dict(accuracy=100 * total_correct / total_size)

4、构建 Runner 并运行任务

现在我们可以使用之前定义的Model, DataLoader, and Metrics和以及其他一些配置来构建一个Runner,如下所示:

from torch.optim import SGD

from mmengine.runner import Runner

runner = Runner(

# the model used for training and validation.

# Needs to meet specific interface requirements

model=MMResNet50(),

# working directory which saves training logs and weight files

work_dir='./work_dir',

# train dataloader needs to meet the PyTorch data loader protocol

train_dataloader=train_dataloader,

# optimize wrapper for optimization with additional features like

# AMP, gradtient accumulation, etc

optim_wrapper=dict(optimizer=dict(type=SGD, lr=0.001, momentum=0.9)),

# trainging coinfs for specifying training epoches, verification intervals, etc

train_cfg=dict(by_epoch=True, max_epochs=5, val_interval=1),

# validation dataloaer also needs to meet the PyTorch data loader protocol

val_dataloader=val_dataloader,

# validation configs for specifying additional parameters required for validation

val_cfg=dict(),

# validation evaluator. The default one is used here

val_evaluator=dict(type=Accuracy),

)

runner.train()

最后,让我们将上面的所有代码组合成一个完整的脚本,使用MMEngine执行器进行训练和验证:

import torch.nn.functional as F

import torchvision

import torchvision.transforms as transforms

from torch.optim import SGD

from torch.utils.data import DataLoader

from mmengine.evaluator import BaseMetric

from mmengine.model import BaseModel

from mmengine.runner import Runner

class MMResNet50(BaseModel):

def __init__(self):

super().__init__()

self.resnet = torchvision.models.resnet50()

def forward(self, imgs, labels, mode):

x = self.resnet(imgs)

if mode == 'loss':

return {'loss': F.cross_entropy(x, labels)}

elif mode == 'predict':

return x, labels

class Accuracy(BaseMetric):

def process(self, data_batch, data_samples):

score, gt = data_samples

self.results.append({

'batch_size': len(gt),

'correct': (score.argmax(dim=1) == gt).sum().cpu(),

})

def compute_metrics(self, results):

total_correct = sum(item['correct'] for item in results)

total_size = sum(item['batch_size'] for item in results)

return dict(accuracy=100 * total_correct / total_size)

norm_cfg = dict(mean=[0.491, 0.482, 0.447], std=[0.202, 0.199, 0.201])

train_dataloader = DataLoader(batch_size=32,

shuffle=True,

dataset=torchvision.datasets.CIFAR10(

'data/cifar10',

train=True,

download=True,

transform=transforms.Compose([

transforms.RandomCrop(32, padding=4),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize(**norm_cfg)

])))

val_dataloader = DataLoader(batch_size=32,

shuffle=False,

dataset=torchvision.datasets.CIFAR10(

'data/cifar10',

train=False,

download=True,

transform=transforms.Compose([

transforms.ToTensor(),

transforms.Normalize(**norm_cfg)

])))

runner = Runner(

model=MMResNet50(),

work_dir='./work_dir',

train_dataloader=train_dataloader,

optim_wrapper=dict(optimizer=dict(type=SGD, lr=0.001, momentum=0.9)),

train_cfg=dict(by_epoch=True, max_epochs=5, val_interval=1),

val_dataloader=val_dataloader,

val_cfg=dict(),

val_evaluator=dict(type=Accuracy),

)

runner.train()

Training log would be similar to this:

2022/08/22 15:51:53 - mmengine - INFO -

------------------------------------------------------------

System environment:

sys.platform: linux

Python: 3.8.12 (default, Oct 12 2021, 13:49:34) [GCC 7.5.0]

CUDA available: True

numpy_random_seed: 1513128759

GPU 0: NVIDIA GeForce GTX 1660 SUPER

CUDA_HOME: /usr/local/cuda

...

2022/08/22 15:51:54 - mmengine - INFO - Checkpoints will be saved to /home/mazerun/work_dir by HardDiskBackend.

2022/08/22 15:51:56 - mmengine - INFO - Epoch(train) [1][10/1563] lr: 1.0000e-03 eta: 0:18:23 time: 0.1414 data_time: 0.0077 memory: 392 loss: 5.3465

2022/08/22 15:51:56 - mmengine - INFO - Epoch(train) [1][20/1563] lr: 1.0000e-03 eta: 0:11:29 time: 0.0354 data_time: 0.0077 memory: 392 loss: 2.7734

2022/08/22 15:51:56 - mmengine - INFO - Epoch(train) [1][30/1563] lr: 1.0000e-03 eta: 0:09:10 time: 0.0352 data_time: 0.0076 memory: 392 loss: 2.7789

2022/08/22 15:51:57 - mmengine - INFO - Epoch(train) [1][40/1563] lr: 1.0000e-03 eta: 0:08:00 time: 0.0353 data_time: 0.0073 memory: 392 loss: 2.5725

2022/08/22 15:51:57 - mmengine - INFO - Epoch(train) [1][50/1563] lr: 1.0000e-03 eta: 0:07:17 time: 0.0347 data_time: 0.0073 memory: 392 loss: 2.7382

2022/08/22 15:51:57 - mmengine - INFO - Epoch(train) [1][60/1563] lr: 1.0000e-03 eta: 0:06:49 time: 0.0347 data_time: 0.0072 memory: 392 loss: 2.5956

2022/08/22 15:51:58 - mmengine - INFO - Epoch(train) [1][70/1563] lr: 1.0000e-03 eta: 0:06:28 time: 0.0348 data_time: 0.0072 memory: 392 loss: 2.7351

...

2022/08/22 15:52:50 - mmengine - INFO - Saving checkpoint at 1 epochs

2022/08/22 15:52:51 - mmengine - INFO - Epoch(val) [1][10/313] eta: 0:00:03 time: 0.0122 data_time: 0.0047 memory: 392

2022/08/22 15:52:51 - mmengine - INFO - Epoch(val) [1][20/313] eta: 0:00:03 time: 0.0122 data_time: 0.0047 memory: 308

2022/08/22 15:52:51 - mmengine - INFO - Epoch(val) [1][30/313] eta: 0:00:03 time: 0.0123 data_time: 0.0047 memory: 308

...

2022/08/22 15:52:54 - mmengine - INFO - Epoch(val) [1][313/313] accuracy: 35.7000

4、应用示例

1、训练分割模型

该分割任务示例将分为以下步骤:

下载Camvid数据集

实施 Camvid 数据集

实施细分模型

与跑步者一起训练

1、下载Camvid数据集

首先,您应该从 OpenDataLab 下载 Camvid 数据集:

# https://opendatalab.com/CamVid

# Configure install

pip install opendatalab

# Upgraded version

pip install -U opendatalab

# Login

odl login

# Download this dataset

mkdir data

odl get CamVid -d data

# Preprocess data in Linux. You should extract the files to data manually in

# Windows

tar -xzvf data/CamVid/raw/CamVid.tar.gz.00 -C ./data

2、实施 Camvid 数据集

我们在这里实现了CamVid类,它继承自VisionDataset。在此类中,我们重写了__getitem__和__len__方法以确保每个索引返回图像和标签的字典。此外,我们还实现了 color_to_class 字典来将蒙版的颜色映射到类索引。

import os

import numpy as np

from torchvision.datasets import VisionDataset

from PIL import Image

import csv

def create_palette(csv_filepath):

color_to_class = {}

with open(csv_filepath, newline='') as csvfile:

reader = csv.DictReader(csvfile)

for idx, row in enumerate(reader):

r, g, b = int(row['r']), int(row['g']), int(row['b'])

color_to_class[(r, g, b)] = idx

return color_to_class

class CamVid(VisionDataset):

def __init__(self,

root,

img_folder,

mask_folder,

transform=None,

target_transform=None):

super().__init__(

root, transform=transform, target_transform=target_transform)

self.img_folder = img_folder

self.mask_folder = mask_folder

self.images = list(

sorted(os.listdir(os.path.join(self.root, img_folder))))

self.masks = list(

sorted(os.listdir(os.path.join(self.root, mask_folder))))

self.color_to_class = create_palette(

os.path.join(self.root, 'class_dict.csv'))

def __getitem__(self, index):

img_path = os.path.join(self.root, self.img_folder, self.images[index])

mask_path = os.path.join(self.root, self.mask_folder,

self.masks[index])

img = Image.open(img_path).convert('RGB')

mask = Image.open(mask_path).convert('RGB') # Convert to RGB

if self.transform is not None:

img = self.transform(img)

# Convert the RGB values to class indices

mask = np.array(mask)

mask = mask[:, :, 0] * 65536 + mask[:, :, 1] * 256 + mask[:, :, 2]

labels = np.zeros_like(mask, dtype=np.int64)

for color, class_index in self.color_to_class.items():

rgb = color[0] * 65536 + color[1] * 256 + color[2]

labels[mask == rgb] = class_index

if self.target_transform is not None:

labels = self.target_transform(labels)

data_samples = dict(

labels=labels, img_path=img_path, mask_path=mask_path)

return img, data_samples

def __len__(self):

return len(self.images)

我们利用 Camvid 数据集创建train_dataloader和val_dataloader,作为后续 Runner 中训练和验证的数据加载器。

import torch

import torchvision.transforms as transforms

norm_cfg = dict(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

transform = transforms.Compose(

[transforms.ToTensor(),

transforms.Normalize(**norm_cfg)])

target_transform = transforms.Lambda(

lambda x: torch.tensor(np.array(x), dtype=torch.long))

train_set = CamVid(

'data/CamVid',

img_folder='train',

mask_folder='train_labels',

transform=transform,

target_transform=target_transform)

valid_set = CamVid(

'data/CamVid',

img_folder='val',

mask_folder='val_labels',

transform=transform,

target_transform=target_transform)

train_dataloader = dict(

batch_size=3,

dataset=train_set,

sampler=dict(type='DefaultSampler', shuffle=True),

collate_fn=dict(type='default_collate'))

val_dataloader = dict(

batch_size=3,

dataset=valid_set,

sampler=dict(type='DefaultSampler', shuffle=False),

collate_fn=dict(type='default_collate'))

3、Implement the Segmentation Model

提供的代码定义了一个名为 的模型类MMDeeplabV3。该类源自BaseModel并合并了 DeepLabV3 架构的分割模型。它重写处理forward输入图像和标签的方法,并支持在训练和预测模式下计算损失和返回预测。

from mmengine.model import BaseModel

from torchvision.models.segmentation import deeplabv3_resnet50

import torch.nn.functional as F

class MMDeeplabV3(BaseModel):

def __init__(self, num_classes):

super().__init__()

self.deeplab = deeplabv3_resnet50(num_classes=num_classes)

def forward(self, imgs, data_samples=None, mode='tensor'):

x = self.deeplab(imgs)['out']

if mode == 'loss':

return {'loss': F.cross_entropy(x, data_samples['labels'])}

elif mode == 'predict':

return x, data_samples

4、与跑步者一起训练

在使用 Runner 进行训练之前,我们需要实现 IoU(Intersection over Union)指标来评估模型的性能。

from mmengine.evaluator import BaseMetric

class IoU(BaseMetric):

def process(self, data_batch, data_samples):

preds, labels = data_samples[0], data_samples[1]['labels']

preds = torch.argmax(preds, dim=1)

intersect = (labels == preds).sum()

union = (torch.logical_or(preds, labels)).sum()

iou = (intersect / union).cpu()

self.results.append(

dict(batch_size=len(labels), iou=iou * len(labels)))

def compute_metrics(self, results):

total_iou = sum(result['iou'] for result in self.results)

num_samples = sum(result['batch_size'] for result in self.results)

return dict(iou=total_iou / num_samples)

实现可视化挂钩对于促进预测和标签之间的比较也很重要。

from mmengine.hooks import Hook

import shutil

import cv2

import os.path as osp

class SegVisHook(Hook):

def __init__(self, data_root, vis_num=1) -> None:

super().__init__()

self.vis_num = vis_num

self.palette = create_palette(osp.join(data_root, 'class_dict.csv'))

def after_val_iter(self,

runner,

batch_idx: int,

data_batch=None,

outputs=None) -> None:

if batch_idx > self.vis_num:

return

preds, data_samples = outputs

img_paths = data_samples['img_path']

mask_paths = data_samples['mask_path']

_, C, H, W = preds.shape

preds = torch.argmax(preds, dim=1)

for idx, (pred, img_path,

mask_path) in enumerate(zip(preds, img_paths, mask_paths)):

pred_mask = np.zeros((H, W, 3), dtype=np.uint8)

runner.visualizer.set_image(pred_mask)

for color, class_id in self.palette.items():

runner.visualizer.draw_binary_masks(

pred == class_id,

colors=[color],

alphas=1.0,

)

# Convert RGB to BGR

pred_mask = runner.visualizer.get_image()[..., ::-1]

saved_dir = osp.join(runner.log_dir, 'vis_data', str(idx))

os.makedirs(saved_dir, exist_ok=True)

shutil.copyfile(img_path,

osp.join(saved_dir, osp.basename(img_path)))

shutil.copyfile(mask_path,

osp.join(saved_dir, osp.basename(mask_path)))

cv2.imwrite(

osp.join(saved_dir, f'pred_{osp.basename(img_path)}'),

pred_mask)

最后,用 Runner 训练模型即可!

from torch.optim import AdamW

from mmengine.optim import AmpOptimWrapper

from mmengine.runner import Runner

num_classes = 32 # Modify to actual number of categories.

runner = Runner(

model=MMDeeplabV3(num_classes),

work_dir='./work_dir',

train_dataloader=train_dataloader,

optim_wrapper=dict(

type=AmpOptimWrapper, optimizer=dict(type=AdamW, lr=2e-4)),

train_cfg=dict(by_epoch=True, max_epochs=10, val_interval=10),

val_dataloader=val_dataloader,

val_cfg=dict(),

val_evaluator=dict(type=IoU),

custom_hooks=[SegVisHook('data/CamVid')],

default_hooks=dict(checkpoint=dict(type='CheckpointHook', interval=1)),

)

runner.train()

最后,您可以在文件夹中查看训练结果./work_dir/{timestamp}/vis_data。

5、常见用法

1、恢复培训

恢复训练是指从之前训练保存的状态继续训练,其中状态包括模型的权重、优化器的状态和参数调度器的状态。

自动恢复训练

用户可以设置Runner的resume参数来实现自动恢复训练。当resume is set to True 时,Runner 将尝试自动从最新的检查点恢复。如果有最新的检查点(例如上次训练期间训练被中断),则将从该检查点恢复训练,否则(例如上次训练没有时间保存检查点或开始新的训练任务)训练将重新开始。以下是如何启用自动训练恢复的示例。

runner = Runner(

model=ResNet18(),

work_dir='./work_dir',

train_dataloader=train_dataloader_cfg,

optim_wrapper=dict(optimizer=dict(type='SGD', lr=0.001, momentum=0.9)),

train_cfg=dict(by_epoch=True, max_epochs=3),

resume=True,

)

runner.train()

指定检查点路径

如果要指定恢复训练的路径,还需要load_from另外设置resume=True。请注意,如果仅设置load_from不带resume=True,则仅加载检查点中的权重并重新启动训练,而不是继续之前的状态。

runner = Runner(

model=ResNet18(),

work_dir='./work_dir',

train_dataloader=train_dataloader_cfg,

optim_wrapper=dict(optimizer=dict(type='SGD', lr=0.001, momentum=0.9)),

train_cfg=dict(by_epoch=True, max_epochs=3),

load_from='./work_dir/epoch_2.pth',

resume=True,

)

runner.train()

2、分布式训练

MMEngine支持单机、多机CPU、单GPU、多GPU训练模型。当环境中有多个GPU可用时,我们可以使用以下命令在单机或多机上启用多个GPU,以缩短模型的训练时间。

启动培训

(1)单机多个 GPU

假设当前机器有8个GPU,您可以使用以下命令启用多GPU训练:

python -m torch.distributed.launch --nproc_per_node=8 examples/distributed_training.py --launcher pytorch

如果需要指定GPU索引,可以设置CUDA_VISIBLE_DEVICES环境变量,例如使用第0个和第3个GPU。

CUDA_VISIBLE_DEVICES=0,3 python -m torch.distributed.launch --nproc_per_node=2 examples/distributed_training.py --launcher pytorch

(2)多台机器

假设有 2 台机器连接到以太网,您只需运行以下命令即可。

在第一台机器上:

python -m torch.distributed.launch \

--nnodes 8 \

--node_rank 0 \

--master_addr 127.0.0.1 \

--master_port 29500 \

--nproc_per_node=8 \

examples/distributed_training.py --launcher pytorch

在第二台机器上:

python -m torch.distributed.launch \

--nnodes 8 \

--node_rank 1 \

--master_addr 127.0.0.1 \

--master_port 29500 \

--nproc_per_node=8 \

examples/distributed_training.py --launcher pytorch

如果您在 slurm 集群中运行 MMEngine,只需运行以下命令即可启用 2 台机器和 16 个 GPU 的训练。

srun -p mm_dev \

--job-name=test \

--gres=gpu:8 \

--ntasks=16 \

--ntasks-per-node=8 \

--cpus-per-task=5 \

--kill-on-bad-exit=1 \

python examples/distributed_training.py --launcher="slurm"

定制分布式训练

当用户从单个GPU培训切换到多块GPU培训时,无需进行任何更改。Runner默认会使用MMDistributedDataParallel来包装模型,从而支持多GPU训练。

如果您想向 MMDistributedDataParallel 传递更多参数或使用您自己的参数CustomDistributedDataParallel,您可以设置model_wrapper_cfg。

(1)将更多参数传递给 MMDistributedDataParallel

例如,设置find_unused_parameters为True:

cfg = dict(

model_wrapper_cfg=dict(

type='MMDistributedDataParallel', find_unused_parameters=True)

)

runner = Runner(

model=ResNet18(),

work_dir='./work_dir',

train_dataloader=train_dataloader_cfg,

optim_wrapper=dict(optimizer=dict(type='SGD', lr=0.001, momentum=0.9)),

train_cfg=dict(by_epoch=True, max_epochs=3),

cfg=cfg,

)

runner.train()

(2)使用自定义的 CustomDistributedDataParallel

from mmengine.registry import MODEL_WRAPPERS

@MODEL_WRAPPERS.register_module()

class CustomDistributedDataParallel(DistributedDataParallel):

pass

cfg = dict(model_wrapper_cfg=dict(type='CustomDistributedDataParallel'))

runner = Runner(

model=ResNet18(),

work_dir='./work_dir',

train_dataloader=train_dataloader_cfg,

optim_wrapper=dict(optimizer=dict(type='SGD', lr=0.001, momentum=0.9)),

train_cfg=dict(by_epoch=True, max_epochs=3),

cfg=cfg,

)

runner.train()

3、加快训练速度

混合精准训练

Nvidia 将 Tensor Core 单元引入 Volta 和 Turing 架构中,以支持 FP32 和 FP16 混合精度计算。它们进一步支持 Ampere 架构中的 BF16。启用自动混合精度训练后,部分算子运行在 FP16/BF16 下,其余算子运行在 FP32 上,在不改变模型或降低训练精度的情况下,减少了训练时间和存储需求,从而支持更大批量、更大模型的训练,更大的输入尺寸。

MMEngine 提供了自动混合精度训练的包装器type=‘AmpOptimWrapper’ in optim_wrapper,只需设置即可启用自动混合精度训练,无需更改其他代码。 optim_wrapper

runner = Runner(

model=ResNet18(),

work_dir='./work_dir',

train_dataloader=train_dataloader_cfg,

optim_wrapper=dict(

type='AmpOptimWrapper',

# If you want to use bfloat16, uncomment the following line

# dtype='bfloat16', # valid values: ('float16', 'bfloat16', None)

optimizer=dict(type='SGD', lr=0.001, momentum=0.9)),

train_cfg=dict(by_epoch=True, max_epochs=3),

)

runner.train()

使用更快的优化器

如果使用Ascend设备,可以使用Ascend优化器来缩短模型的训练时间。Ascend设备支持的优化器如下:

NpuFusedAdadelta

NpuFusedAdam

NpuFusedAdamP

NpuFusedAdamW

NpuFusedBertAdam

NpuFusedLamb

NpuFusedRMSprop

NpuFusedRMSpropTF

NpuFusedSGD

4、节省 GPU 内存

内存容量对于深度学习训练和推理至关重要,决定了模型能否成功运行。常见的内存节省方法包括:

启用高效Conv BN评估特征(实验)

我们最近在 MMCV 中引入了一个实验性功能:基于本文讨论的概念的:Efficient Conv BN Eval 。设计此功能的目的是在不影响性能的情况下减少网络训练期间的内存占用。如果您的网络架构包含一系列连续的 Conv+BN 块,并且这些归一化层在训练过程中保持模式不变(使用MMDetectioneval训练目标检测器时常见),此功能可以减少内存消耗超过 20 20% 20 。要启用 Efficient Conv BN Eval 功能,只需添加以下命令行参数:

--cfg-options efficient_conv_bn_eval="[backbone]"

"Enabling the “efficient_conv_bn_eval” feature for these modules …在输出日志中,该功能已成功启用。由于目前正处于实验阶段,我们热切期待听到您的使用体验。请在此讨论线程中分享您的使用报告、观察结果和建议。您的反馈对于进一步开发以及确定是否应将此功能集成到稳定版本中至关重要。

梯度累积

梯度累积是一种按照配置的步数运行累积梯度而不是更新参数的机制,之后更新网络参数并清除梯度。通过这种延迟参数更新的技术,结果类似于使用大批量大小的场景,同时可以节省激活的内存。但需要注意的是,如果模型包含批量归一化层,使用梯度累积会影响性能。

配置可以这样写:

optim_wrapper_cfg = dict(

type='OptimWrapper',

optimizer=dict(type='SGD', lr=0.001, momentum=0.9),

# update every four times

accumulative_counts=4)

import torch

import torch.nn as nn

from torch.utils.data import DataLoader

from mmengine.runner import Runner

from mmengine.model import BaseModel

train_dataset = [(torch.ones(1, 1), torch.ones(1, 1))] * 50

train_dataloader = DataLoader(train_dataset, batch_size=2)

class ToyModel(BaseModel):

def __init__(self) -> None:

super().__init__()

self.linear = nn.Linear(1, 1)

def forward(self, img, label, mode):

feat = self.linear(img)

loss1 = (feat - label).pow(2)

loss2 = (feat - label).abs()

return dict(loss1=loss1, loss2=loss2)

runner = Runner(

model=ToyModel(),

work_dir='tmp_dir',

train_dataloader=train_dataloader,

train_cfg=dict(by_epoch=True, max_epochs=1),

optim_wrapper=dict(optimizer=dict(type='SGD', lr=0.01),

accumulative_counts=4)

)

runner.train()

梯度检查点

梯度检查点是一种时间换空间方法,通过减少保存的激活数量来压缩模型,但是在计算梯度时必须重新计算未存储的激活。相应的功能已经在torch.utils.checkpoint包中实现了。其实现可以简单地总结为,在前向阶段,传递给检查点的前向函数以torch.no_grad模式运行,并且仅保存前向函数的输入和输出。然后在向后阶段重新计算其中间激活。

大模型训练技术

最近的研究表明,训练大型模型有助于提高性能,但训练如此规模的模型需要巨大的资源,并且很难将整个模型存储在单个显卡的内存中。因此引入了大型模型训练技术,例如DeepSpeed ZeRO和 FairScale 中引入的完全共享数据并行 ( FSDP ) 技术。这些技术允许在并行进程之间对参数、梯度和优化器状态进行切片,同时仍然保持数据并行性的简单性。

FSDP从 PyTorch 1.11 开始正式支持。配置可以这样写:

# located in cfg file

model_wrapper_cfg=dict(type='MMFullyShardedDataParallel', cpu_offload=True)

import torch

import torch.nn as nn

from torch.utils.data import DataLoader

from mmengine.runner import Runner

from mmengine.model import BaseModel

train_dataset = [(torch.ones(1, 1), torch.ones(1, 1))] * 50

train_dataloader = DataLoader(train_dataset, batch_size=2)

class ToyModel(BaseModel):

def __init__(self) -> None:

super().__init__()

self.linear = nn.Linear(1, 1)

def forward(self, img, label, mode):

feat = self.linear(img)

loss1 = (feat - label).pow(2)

loss2 = (feat - label).abs()

return dict(loss1=loss1, loss2=loss2)

runner = Runner(

model=ToyModel(),

work_dir='tmp_dir',

train_dataloader=train_dataloader,

train_cfg=dict(by_epoch=True, max_epochs=1),

optim_wrapper=dict(optimizer=dict(type='SGD', lr=0.01)),

cfg=dict(model_wrapper_cfg=dict(type='MMFullyShardedDataParallel', cpu_offload=True))

)

runner.train()

5、训练大模型

训练大型模型时,需要大量资源。单个 GPU 内存往往不足以满足训练需求。因此,开发了训练大型模型的技术,一种典型的方法是DeepSpeed ZeRO。DeepSpeed ZeRO 支持优化器、梯度和参数分片。

DeepSpeed是微软开发的一个基于PyTorch的开源分布式框架。它支持诸如ZeRO、3D-Parallelism、DeepSpeed-MoE和ZeRO-Infinity 之类的培训策略。

从 MMEngine v0.8.0 开始,MMEngine 支持使用 DeepSpeed 训练模型。

要使用 DeepSpeed,您需要先通过运行以下命令来安装它:

pip install deepspeed

安装DeepSpeed后,需要对flexiblerrunner的策略和optim_wrapper参数进行如下配置:

strategy:设置type=‘DeepSpeedStrategy’,并配置其他参数。有关更多细节,请参阅DeepSpeedStrategy。

optim_wrapper:设置type='DeepSpeedOptimwrapper’并配置其他参数。

DeepSpeedOptimWrapper for more details.

以下是与 DeepSpeed 相关的示例配置:

from mmengine.runner._flexible_runner import FlexibleRunner

# set `type='DeepSpeedStrategy'` and configure other parameters

strategy = dict(

type='DeepSpeedStrategy',

fp16=dict(

enabled=True,

fp16_master_weights_and_grads=False,

loss_scale=0,

loss_scale_window=500,

hysteresis=2,

min_loss_scale=1,

initial_scale_power=15,

),

inputs_to_half=[0],

zero_optimization=dict(

stage=3,

allgather_partitions=True,

reduce_scatter=True,

allgather_bucket_size=50000000,

reduce_bucket_size=50000000,

overlap_comm=True,

contiguous_gradients=True,

cpu_offload=False),

)

# set `type='DeepSpeedOptimWrapper'` and configure other parameters

optim_wrapper = dict(

type='DeepSpeedOptimWrapper',

optimizer=dict(type='AdamW', lr=1e-3))

# construct FlexibleRunner

runner = FlexibleRunner(

model=MMResNet50(),

work_dir='./work_dirs',

strategy=strategy,

train_dataloader=train_dataloader,

optim_wrapper=optim_wrapper,

param_scheduler=dict(type='LinearLR'),

train_cfg=dict(by_epoch=True, max_epochs=10, val_interval=1),

val_dataloader=val_dataloader,

val_cfg=dict(),

val_evaluator=dict(type=Accuracy))

# start training

runner.train()

使用两个GPU启动分布式训练:

torchrun --nproc-per-node 2 examples/distributed_training_with_flexible_runner.py --use-deepspeed

6、更好的性能优化器

本文档提供了MMEngine支持的一些第三方优化器,可能会带来更快的收敛速度或者更高的性能。

D-Adaptation

D-Adaptation提供DAdaptAdaGrad,DAdaptAdam和DAdaptSGD优化器。

注意:如果您使用D-Adaptation提供的优化器,则需要将mmengine升级到0.6.0.

安装

pip install dadaptation

以DAdaptAdaGrad为例。

runner = Runner(

model=ResNet18(),

work_dir='./work_dir',

train_dataloader=train_dataloader_cfg,

# To view the input parameters for DAdaptAdaGrad, you can refer to

# https://github.com/facebookresearch/dadaptation/blob/main/dadaptation/dadapt_adagrad.py

optim_wrapper=dict(optimizer=dict(type='DAdaptAdaGrad', lr=0.001, momentum=0.9)),

train_cfg=dict(by_epoch=True, max_epochs=3),

)

runner.train()

Lion-Pytorch

lion-pytorch provides the Lion optimizer。

pip install lion-pytorch

runner = Runner(

model=ResNet18(),

work_dir='./work_dir',

train_dataloader=train_dataloader_cfg,

# To view the input parameters for Lion, you can refer to

# https://github.com/lucidrains/lion-pytorch/blob/main/lion_pytorch/lion_pytorch.py

optim_wrapper=dict(optimizer=dict(type='Lion', lr=1e-4, weight_decay=1e-2)),

train_cfg=dict(by_epoch=True, max_epochs=3),

)

runner.train()

Sophia

Sophia provides Sophia, SophiaG, DecoupledSophia and Sophia2 optimizers.

pip install Sophia-Optimizer

runner = Runner(

model=ResNet18(),

work_dir='./work_dir',

train_dataloader=train_dataloader_cfg,

# To view the input parameters for SophiaG, you can refer to

# https://github.com/kyegomez/Sophia/blob/main/Sophia/Sophia.py

optim_wrapper=dict(optimizer=dict(type='SophiaG', lr=2e-4, betas=(0.965, 0.99), rho = 0.01, weight_decay=1e-1)),

train_cfg=dict(by_epoch=True, max_epochs=3),

)

runner.train()

bitsandbytes

bitsandbytes provides AdamW8bit, Adam8bit, Adagrad8bit, PagedAdam8bit, PagedAdamW8bit, LAMB8bit, LARS8bit, RMSprop8bit, Lion8bit, PagedLion8bit and SGD8bit optimziers

pip install bitsandbytes

runner = Runner(

model=ResNet18(),

work_dir='./work_dir',

train_dataloader=train_dataloader_cfg,

# To view the input parameters for AdamW8bit, you can refer to

# https://github.com/TimDettmers/bitsandbytes/blob/main/bitsandbytes/optim/adamw.py

optim_wrapper=dict(optimizer=dict(type='AdamW8bit', lr=1e-4, weight_decay=1e-2)),

train_cfg=dict(by_epoch=True, max_epochs=3),

)

runner.train()

7、可视化训练日志

MMEngine 集成了TensorBoard、Weights & Biases (WandB)、MLflow、ClearML、Neptune、DVCLive和Aim 等实验管理工具,可以轻松跟踪和可视化损失和准确性等指标。

TensorBoard

visualizer在 Runner 的初始化参数中配置,并设置vis_backends为TensorboardVisBackend

runner = Runner(

model=MMResNet50(),

work_dir='./work_dir',

train_dataloader=train_dataloader,

optim_wrapper=dict(optimizer=dict(type=SGD, lr=0.001, momentum=0.9)),

train_cfg=dict(by_epoch=True, max_epochs=5, val_interval=1),

val_dataloader=val_dataloader,

val_cfg=dict(),

val_evaluator=dict(type=Accuracy),

visualizer=dict(type='Visualizer', vis_backends=[dict(type='TensorboardVisBackend')]),

)

runner.train()

8、设置随机种子

正如PyTorch REPRODUCIBILITY中所述,有 2 个因素影响实验的再现性,即随机数和非确定性算法。

MMEngine 提供了设置随机数和选择确定性算法的功能。用户可以简单地设置Runner randomness参数,该参数最终在set_random_seed中被消耗,它具有以下三个字段:

seed (int):随机种子。如果未设置此参数,将使用随机数。

rankdiff_rank_seed (bool):是否通过将 rank(进程索引)添加到种子中来为不同进程设置不同的种子。

deterministic (bool):是否为 CUDNN 后端设置确定性选项。

我们以15分钟入门为例,演示如何randomness在MMEngine中进行设置。

runner = Runner(

model=MMResNet50(),

work_dir='./work_dir',

train_dataloader=train_dataloader,

optim_wrapper=dict(optimizer=dict(type=SGD, lr=0.001, momentum=0.9)),

train_cfg=dict(by_epoch=True, max_epochs=5, val_interval=1),

val_dataloader=val_dataloader,

val_cfg=dict(),

val_evaluator=dict(type=Accuracy),

# adding randomness setting

randomness=dict(seed=0),

)

runner.train()

然而,即使使用随机数集和选择的确定性算法,任何两个实验之间仍然可能存在一些差异。核心原因是CUDA中的原子操作在并行训练时是无序且随机的。

某些运算符的 CUDA 实现有时不可避免地会在不同的 CUDA 内核中多次执行相同内存地址的加、减、乘、除等原子操作。尤其是在backward过程中,使用atomicAdd非常普遍。这些原子操作在计算时是无序且随机的。因此,当对同一个内存地址多次执行原子操作时,比如说在同一个地址添加多个梯度,执行的顺序是不确定的,即使每个数字相同,数字的顺序也是不确定的。添加的会有所不同。

求和顺序的随机性导致了另一个问题,即由于求和的值一般都是浮点数,存在精度损失的问题,所以最终的结果会略有差异。

因此,通过将随机种子和确定性设置为True,我们可以确保每次实验的模型的初始化权重甚至前向输出都是相同的,并且损失值也是相同的。然而,一次反向传播后可能会出现细微的差异,训练后的模型的最终性能会略有不同。

9、 调试技巧

设置数据集的长度

在调试代码的过程中,有时需要训练几个epoch,例如调试验证过程或检查检查点保存是否符合预期。但是,如果数据集太大,可能需要很长时间才能完成一个epoch,在这种情况下可以设置数据集的长度。请注意,只有继承自BaseDataset 的数据集才支持此功能,并且 BaseDataset 的使用可以在 BaseDataset 中找到

Turn off the training and set indices as 5000 in the dataset field in configs/base/datasets/cifar10_bs16.py.

train_dataloader = dict(

batch_size=16,

num_workers=2,

dataset=dict(

type=dataset_type,

data_prefix='data/cifar10',

test_mode=False,

indices=5000, # set indices=5000,represent every epoch only iterator 5000 samples

pipeline=train_pipeline),

sampler=dict(type='DefaultSampler', shuffle=True),

)

再次启动训练

python tools/train.py configs/resnet/resnet18_8xb16_cifar10.py

正如我们所看到的,迭代次数已更改为313。与之前相比,这可以更快完成一个纪元。

02/20 14:44:58 - mmengine - INFO - Epoch(train) [1][100/313] lr: 1.0000e-01 eta: 0:31:09 time: 0.0154 data_time: 0.0004 memory: 214 loss: 2.1852

02/20 14:44:59 - mmengine - INFO - Epoch(train) [1][200/313] lr: 1.0000e-01 eta: 0:23:18 time: 0.0143 data_time: 0.0002 memory: 214 loss: 2.0424

02/20 14:45:01 - mmengine - INFO - Epoch(train) [1][300/313] lr: 1.0000e-01 eta: 0:20:39 time: 0.0143 data_time: 0.0003 memory: 214 loss: 1.814

10、EPOCHBASEDTRAINING 到 ITERBASEDTRAINING

基于Epoch的训练和基于迭代的训练是MMEngine中常用的两种训练方式。例如,像MMDetection这样的下游存储库选择通过 epoch 来训练模型,而MMSegmentation选择通过迭代来训练模型。

MMEngine中的很多模块默认都是按epoch来训练模型的,如ParamScheduler、LoggerHook、CheckPointHook等,因此如果要进行迭代训练,需要调整这些模块的配置。例如,常用的基于epoch的配置如下:

param_scheduler = dict(

type='MultiStepLR',

milestones=[6, 8]

by_epoch=True # by_epoch is True by default

)

default_hooks = dict(

logger=dict(type='LoggerHook', log_metric_by_epoch=True), # log_metric_by_epoch is True by default

checkpoint=dict(type='CheckpointHook', interval=2, by_epoch=True), # by_epoch is True by default

)

train_cfg = dict(

by_epoch=True, # set by_epoch=True or type='EpochBasedTrainLoop'

max_epochs=10,

val_interval=2

)

log_processor = dict(

by_epoch=True

) # This is the default configuration, and just set it here for comparison.

runner = Runner(

model=ResNet18(),

work_dir='./work_dir',

# Assuming train_dataloader is configured with an epoch-based sampler

train_dataloader=train_dataloader_cfg,

optim_wrapper=dict(optimizer=dict(type='SGD', lr=0.001, momentum=0.9)),

param_scheduler=param_scheduler

default_hooks=default_hooks,

log_processor=log_processor,

train_cfg=train_cfg,

resume=True,

)

将上述配置转换为基于迭代的训练有四个步骤:

设置by_epoch为train_cfgFalse,并设置max_iters为训练迭代总数和val_interval验证迭代之间的间隔。

train_cfg = dict(

by_epoch=False,

max_iters=10000,

val_interval=2000

)

设置log_metric_by_epoch为False记录器中和检查点by_epoch中False。

default_hooks = dict(

logger=dict(type='LoggerHook', log_metric_by_epoch=False),

checkpoint=dict(type='CheckpointHook', by_epoch=False, interval=2000),

)

by_epoch在 param_scheduler 中设置False并将任何与纪元相关的参数转换为迭代。

param_scheduler = dict(

type='MultiStepLR',

milestones=[6000, 8000],

by_epoch=False,

)

或者,如果您可以确保 IterBasedTraining 和 EpochBasedTraining 的迭代总数相同,则只需设置convert_to_iter_based为 True。

param_scheduler = dict(

type='MultiStepLR',

milestones=[6, 8]

convert_to_iter_based=True

)

将 log_processor 中的 by_epoch 设置为 False。

log_processor = dict(

by_epoch=False

)

开放原子开发者工作坊旨在鼓励更多人参与开源活动,与志同道合的开发者们相互交流开发经验、分享开发心得、获取前沿技术趋势。工作坊有多种形式的开发者活动,如meetup、训练营等,主打技术交流,干货满满,真诚地邀请各位开发者共同参与!

更多推荐

已为社区贡献5条内容

已为社区贡献5条内容

所有评论(0)