yolov8数据标注、模型训练到模型部署全过程

yolov8进行自己的数据标注、模型训练以及模型部署全过程

文章目录

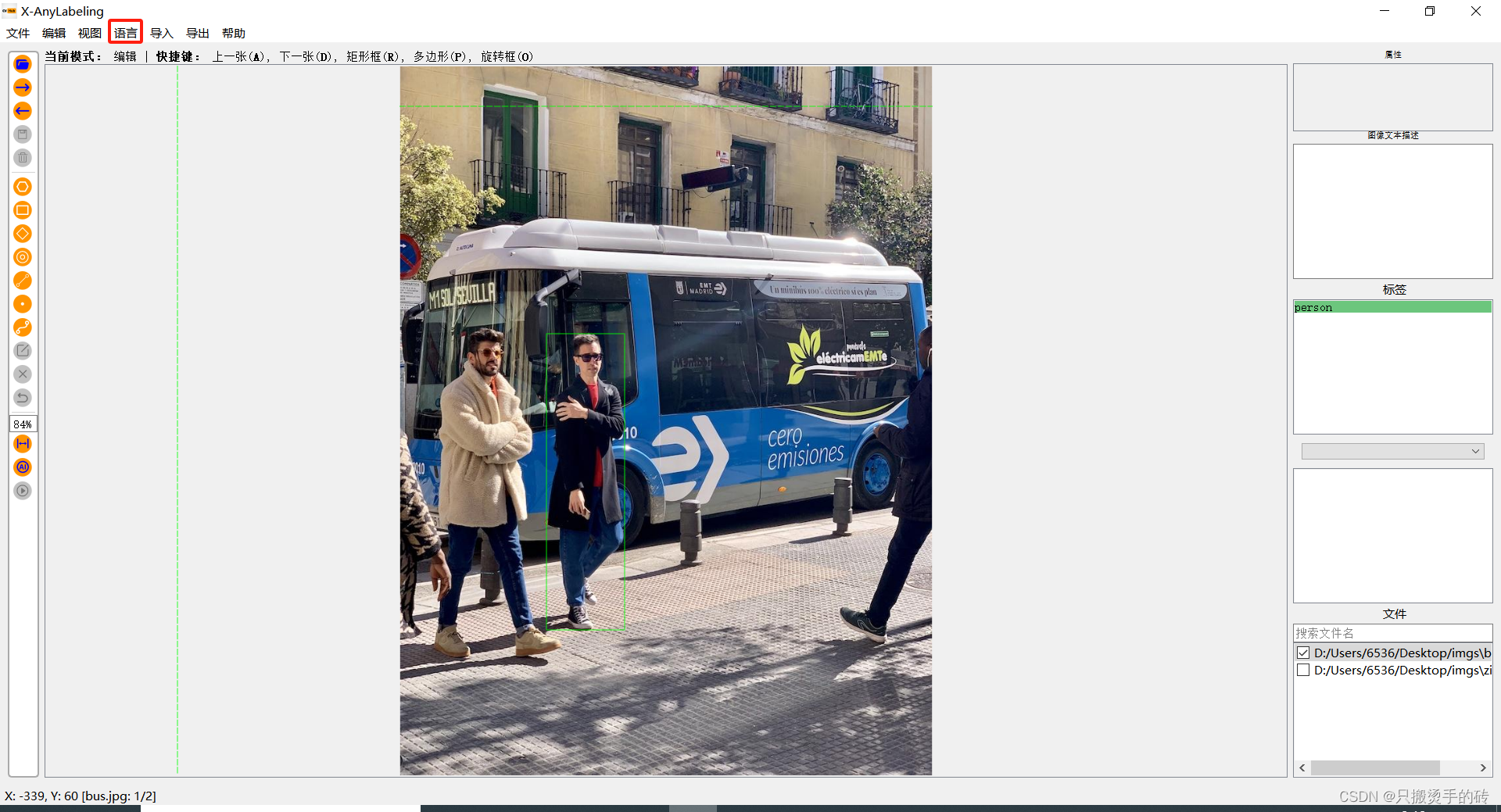

一、数据标注(x-anylabeling)

1. 安装方式

1.1 直接通过Releases安装

https://github.com/CVHub520/X-AnyLabeling/releases

根据自己的系统选择CPU还是GPU推理以及Linux或者win系统

1.2 clone源码后采用终端运行

https://github.com/CVHub520/X-AnyLabeling

在项目中打开终端安装所需的环境依赖:

pip install -r requirements.txt

安装完成后运行app.py

python anylabeling/app.py

注:当直接使用exe运行失败的话,最好就是采用第二种方式,可以通过终端知道报错的原因。

2. 如何使用

注:由于x-anylabeling是可以使用自己训练后的模型,然后自动生成标注数据的,但是第一次的话就需要自己标注数据。

1. 首次打开可以进行语言的选择

2. 打开需要标注数据的文件夹

3. 点击矩形框或者使用快捷键(R)

4. 直接进行标记并自己定义类

5. 打开左上角“文件”选项,点击自动保存

保存的文件类型是json,你可以自己选择导出的类型。

yolov8训练的标签格式是txt,通常我标记的时候都是选择导出voc(xml格式)。

注:你也可以直接导出yolo标签格式

6. 将标记好的数据进行训练的格式进行划分

import os

import random

import shutil

# 输入文件夹路径和划分比例

folder_path = input("请输入文件夹路径:")

train_ratio = float(input("请输入训练集比例:"))

# 检查文件夹是否存在

if not os.path.exists(folder_path):

print("文件夹不存在!")

exit()

# 获取所有jpg和txt文件

jpg_files = [file for file in os.listdir(folder_path) if file.endswith(".jpg")]

txt_files = [file for file in os.listdir(folder_path) if file.endswith(".txt")]

# 检查文件数量是否相等

if len(jpg_files) != len(txt_files):

print("图片和标签数量不匹配!")

exit()

# 打乱文件顺序

random.shuffle(jpg_files)

# 划分训练集和验证集

train_size = int(len(jpg_files) * train_ratio)

train_jpg = jpg_files[:train_size]

train_txt = [file.replace(".jpg", ".txt") for file in train_jpg]

val_jpg = jpg_files[train_size:]

val_txt = [file.replace(".jpg", ".txt") for file in val_jpg]

# 创建文件夹和子文件夹

if not os.path.exists("images/train"):

os.makedirs("images/train")

if not os.path.exists("images/val"):

os.makedirs("images/val")

if not os.path.exists("labels/train"):

os.makedirs("labels/train")

if not os.path.exists("labels/val"):

os.makedirs("labels/val")

# 复制文件到目标文件夹

for file in train_jpg:

shutil.copy(os.path.join(folder_path, file), "images/train")

for file in train_txt:

shutil.copy(os.path.join(folder_path, file), "labels/train")

for file in val_jpg:

shutil.copy(os.path.join(folder_path, file), "images/val")

for file in val_txt:

shutil.copy(os.path.join(folder_path, file), "labels/val")

print("处理完成!")

生成images和labels的文件夹

我这里没有加入测试集,只使用了训练集和验证集

7. 训练好自己的模型后

将生成的.onnx和.yaml放在一个路径下

yaml文件的配置,注意这个类不要用数字,会被认定为int型,然后导致无法生成框,也就是报错。这个类的名称和个数一定要与训练的时候进行配置的一样

就是这里面的class names,这里填的什么,那么上面配置的yaml文件也要一样。

二、模型训练

yolov8的源代码:

https://github.com/ultralytics/ultralytics

-

首先安装yolov8运行所依赖的库

pip install ultralytics -

根据代码进行

首先下载一个预训练模型:https://docs.ultralytics.com/tasks/detect/

from ultralytics import YOLO

# Load a models

model = YOLO("D:\MyProject\yolov8s.pt") # load a pretrained model (recommended for training)

# Use the model

model.train(data="D:\MyProject\data\myData.yaml", epochs=3) # train the model

metrics = model.val() # evaluate model performance on the validation set

results = model("https://ultralytics.com/images/bus.jpg") # predict on an image

path = model.export(format="onnx") # export the model to ONNX format

将前面标记好的数据放在路径下,配置好myData.yaml,如下:

三、模型部署

3.1 onnx转engine

首先把前面训练好的模型pt通过model.export(format="onnx")转换成onnx。

工程文件如下:

环境配置(一):需要配置anaconda、opencv、cuda以及tensorRT。

tensorRT的安装与使用:链接

环境配置(二):

环境配置(三):

opencv_world455.lib

myelin64_1.lib

nvinfer.lib

nvonnxparser.lib

nvparsers.lib

nvinfer_plugin.lib

cuda.lib

cudadevrt.lib

cudart_static.lib

由于x64中Release里面的依赖项太大,所以进行了分卷上传

yolov8使用tensorRT部署的环境依赖项(一)

yolov8使用tensorRT部署的环境依赖项(二)

将上面两个压缩包下载后放到一个文件夹里面,直接解压001,就可以将两个压缩包里面的依赖项全部解压出来。把解压的dll所有文件复制到/x64/Release这个路径下。

代码执行:

#include <iostream>

#include "logging.h"

#include "NvOnnxParser.h"

#include "NvInfer.h"

#include <fstream>

using namespace nvinfer1;

using namespace nvonnxparser;

static Logger gLogger;

int main(int argc, char** argv) {

const char* onnx_filename = "D://TR_YOLOV8_DLL//zy_onnx2engine//models//best.onnx";

const char* engine_filename = "D://TR_YOLOV8_DLL//zy_onnx2engine//models//best.engine";

// 1 onnx解析器

IBuilder* builder = createInferBuilder(gLogger);

const auto explicitBatch = 1U << static_cast<uint32_t>(NetworkDefinitionCreationFlag::kEXPLICIT_BATCH);

INetworkDefinition* network = builder->createNetworkV2(explicitBatch);

nvonnxparser::IParser* parser = nvonnxparser::createParser(*network, gLogger);

parser->parseFromFile(onnx_filename, static_cast<int>(Logger::Severity::kWARNING));

for (int i = 0; i < parser->getNbErrors(); ++i)

{

std::cout << parser->getError(i)->desc() << std::endl;

}

std::cout << "successfully load the onnx model" << std::endl;

// 2build the engine

unsigned int maxBatchSize = 1;

builder->setMaxBatchSize(maxBatchSize);

IBuilderConfig* config = builder->createBuilderConfig();

config->setMaxWorkspaceSize(1 << 20);

//config->setMaxWorkspaceSize(128 * (1 << 20)); // 16MB

config->setFlag(BuilderFlag::kFP16);

ICudaEngine* engine = builder->buildEngineWithConfig(*network, *config);

// 3serialize Model

IHostMemory* gieModelStream = engine->serialize();

std::ofstream p(engine_filename, std::ios::binary);

if (!p)

{

std::cerr << "could not open plan output file" << std::endl;

return -1;

}

p.write(reinterpret_cast<const char*>(gieModelStream->data()), gieModelStream->size());

gieModelStream->destroy();

std::cout << "successfully generate the trt engine model" << std::endl;

return 0;

}

完整项目:https://download.csdn.net/download/qq_44747572/88791740

3.2 c++调用engine模型

这里的两个工程环境部署都跟上面部署的方式一样。进行重复的动作即可

3.2.1 main_tensorRT.cpp

// Xray_test.cpp : 定义控制台应用程序的入口点。

#define _AFXDLL

#include <iomanip>

#include <string>

#include <fstream>

#include "opencv2/core/core.hpp"

#include "opencv2/highgui/highgui.hpp"

#include <io.h>

#include "segmentationModel.h"

// stuff we know about the network and the input/output blobs

#define input_h 640

#define input_w 640

#define channel 3

#define classe 2 // 80个类

#define segWidth 160

#define segHeight 160

#define segChannels 32

#define Num_box 34000 //8400 1280 33600

MODELDLL predictClasse;

#pragma comment(lib, "..//x64//Release//segmentationModel.lib")

using namespace cv;

using namespace std;

int main(int argc, char** argv)

{

//检测测试

string engine_filename = "best.engine";

string img_filename = "imgs//";

predictClasse.LoadYoloV8DetectEngine(engine_filename);

string pattern_jpg = img_filename + "*.jpg"; // test_images

vector<cv::String> image_files;

glob(pattern_jpg, image_files);

vector<ObjectTR> output;

float confTh = 0.25;

for (int i = 0; i < image_files.size(); i++)

{

Mat src = imread(image_files[i], 1);

Mat dst;

clock_t start = clock();

predictClasse.YoloV8DetectPredict(src, dst, channel, classe, input_h, input_w, Num_box, confTh, output);

clock_t end = clock();

std::cout << "总时间:" << end - start << "ms" << std::endl;

cv::namedWindow("output.jpg", 0);

cv::imshow("output.jpg", dst);

cv::waitKey(0);

}

分割测试

//string engine_filename = "yolov8n-seg.engine";

//string img_filename = "imgs//bus.jpg";

//predictClasse.LoadYoloV8SegEngine(engine_filename);

//Mat src = imread(img_filename, 1);

//Mat dst;

//predictClasse.YoloV8SegPredict(src, dst, channel, classe, input_h, input_w, segChannels, segWidth, segHeight, Num_box);

//cv::imshow("output.jpg", dst);

//cv::waitKey(0);

return 0;

}

3.2.2 segmentationModel.cpp

#include "pch.h"

#include "segmentationModel.h"

#define DEVICE 0 // GPU id

static const float CONF_THRESHOLD = 0.25;

static const float NMS_THRESHOLD = 0.5;

static const float MASK_THRESHOLD = 0.5;

const char* INPUT_BLOB_NAME = "images";

const char* OUTPUT_BLOB_NAME = "output0";//detect

const char* OUTPUT_BLOB_NAME1 = "output1";//mask

static Logger gLogger;

IRuntime* runtimeYolov8Seg;

ICudaEngine* engineYolov8Seg;

IExecutionContext* contextYolov8Seg;

MODELDLL::MODELDLL()

{

}

MODELDLL::~MODELDLL()

{

}

//yolov8检测推理

bool MODELDLL::LoadYoloV8DetectEngine(const std::string& engineName)

{

// create a model using the API directly and serialize it to a stream

char* trtModelStream{ nullptr }; //char* trtModelStream==nullptr; 开辟空指针后 要和new配合使用,比如 trtModelStream = new char[size]

size_t size{ 0 };//与int固定四个字节不同有所不同,size_t的取值range是目标平台下最大可能的数组尺寸,一些平台下size_t的范围小于int的正数范围,又或者大于unsigned int. 使用Int既有可能浪费,又有可能范围不够大。

std::ifstream file(engineName, std::ios::binary);

if (file.good()) {

std::cout << "load engine success" << std::endl;

file.seekg(0, file.end);//指向文件的最后地址

size = file.tellg();//把文件长度告诉给size

//std::cout << "\nfile:" << argv[1] << " size is";

//std::cout << size << "";

file.seekg(0, file.beg);//指回文件的开始地址

trtModelStream = new char[size];//开辟一个char 长度是文件的长度

assert(trtModelStream);//

file.read(trtModelStream, size);//将文件内容传给trtModelStream

file.close();//关闭

}

else {

std::cout << "load engine failed" << std::endl;

return 1;

}

runtimeYolov8Seg = createInferRuntime(gLogger);

assert(runtimeYolov8Seg != nullptr);

bool didInitPlugins = initLibNvInferPlugins(nullptr, "");

engineYolov8Seg = runtimeYolov8Seg->deserializeCudaEngine(trtModelStream, size, nullptr);

assert(engineYolov8Seg != nullptr);

contextYolov8Seg = engineYolov8Seg->createExecutionContext();

assert(contextYolov8Seg != nullptr);

delete[] trtModelStream;

return true;

}

bool MODELDLL::YoloV8DetectPredict(const Mat& src, Mat& dst, const int& channel, const int& classe, const int& input_h, const int& input_w, const int& Num_box, float& CONF_THRESHOLD, vector<ObjectTR>& output)

{

cudaSetDevice(DEVICE);

if (src.empty()) { std::cout << "image load faild" << std::endl; return 1; }

int img_width = src.cols;

int img_height = src.rows;

std::cout << "宽高:" << img_width << " " << img_height << std::endl;

// Subtract mean from image

float* data = new float[channel * input_h * input_w];

Mat pr_img0, pr_img;

std::vector<int> padsize;

Mat tempImg = src.clone();

pr_img = preprocess_img(tempImg, input_h, input_w, padsize); // Resize

int newh = padsize[0], neww = padsize[1], padh = padsize[2], padw = padsize[3];

float ratio_h = (float)src.rows / newh;

float ratio_w = (float)src.cols / neww;

int i = 0;// [1,3,INPUT_H,INPUT_W]

//std::cout << "pr_img.step" << pr_img.step << std::endl;

clock_t start_p = clock();

for (int row = 0; row < input_h; ++row) {

uchar* uc_pixel = pr_img.data + row * pr_img.step;//pr_img.step=widthx3 就是每一行有width个3通道的值

for (int col = 0; col < input_w; ++col)

{

data[i] = (float)uc_pixel[2] / 255.0;

data[i + input_h * input_w] = (float)uc_pixel[1] / 255.0;

data[i + 2 * input_h * input_w] = (float)uc_pixel[0] / 255.;

uc_pixel += 3;

++i;

}

}

//优化一:从30多ms降速到20多,仅提速10ms左右,效果不明显

//#pragma omp parallel for

// for (int row = 0; row < input_h; ++row) {

// const uchar* uc_pixel = pr_img.data + row * pr_img.step;

// int i = row * input_w;

// for (int col = 0; col < input_w; ++col) {

// float r = static_cast<float>(uc_pixel[2]) / 255.0f;

// float g = static_cast<float>(uc_pixel[1]) / 255.0f;

// float b = static_cast<float>(uc_pixel[0]) / 255.0f;

// data[i] = r;

// data[i + input_h * input_w] = g;

// data[i + 2 * input_h * input_w] = b;

// uc_pixel += 3;

// ++i;

// }

// }

clock_t end_p = clock();

std::cout << "preprocess_img时间:" << end_p - start_p << "ms" << std::endl;

// Run inference

static const int OUTPUT_SIZE = Num_box * (classe + 4);//output0

float* prob = new float[OUTPUT_SIZE];

//for (int i = 0; i < 10; i++) {//计算10次的推理速度

// auto start = std::chrono::system_clock::now();

// doInference(*context, data, prob, prob1, 1);

// auto end = std::chrono::system_clock::now();

// std::cout << std::chrono::duration_cast<std::chrono::milliseconds>(end - start).count() << "ms" << std::endl;

// }

//auto start = std::chrono::system_clock::now();

clock_t start = clock();

//推理

int batchSize = 1;

const ICudaEngine& engine = (*contextYolov8Seg).getEngine();

// Pointers to input and output device buffers to pass to engine.

// Engine requires exactly IEngine::getNbBindings() number of buffers.

assert(engine.getNbBindings() == 3);

void* buffers[3];

// In order to bind the buffers, we need to know the names of the input and output tensors.

// Note that indices are guaranteed to be less than IEngine::getNbBindings()

const int inputIndex = engine.getBindingIndex(INPUT_BLOB_NAME);

const int outputIndex = engine.getBindingIndex(OUTPUT_BLOB_NAME);

// Create GPU buffers on device

CHECK(cudaMalloc(&buffers[inputIndex], batchSize * 3 * input_h * input_w * sizeof(float)));//

CHECK(cudaMalloc(&buffers[outputIndex], batchSize * OUTPUT_SIZE * sizeof(float)));

// cudaMalloc分配内存 cudaFree释放内存 cudaMemcpy或 cudaMemcpyAsync 在主机和设备之间传输数据

// cudaMemcpy cudaMemcpyAsync 显式地阻塞传输 显式地非阻塞传输

// Create stream

cudaStream_t stream;

CHECK(cudaStreamCreate(&stream));

// DMA input batch data to device, infer on the batch asynchronously, and DMA output back to host

CHECK(cudaMemcpyAsync(buffers[inputIndex], data, batchSize * 3 * input_h * input_w * sizeof(float), cudaMemcpyHostToDevice, stream));

(*contextYolov8Seg).enqueue(batchSize, buffers, stream, nullptr);

CHECK(cudaMemcpyAsync(prob, buffers[outputIndex], batchSize * OUTPUT_SIZE * sizeof(float), cudaMemcpyDeviceToHost, stream));

cudaStreamSynchronize(stream);

// Release stream and buffers

cudaStreamDestroy(stream);

CHECK(cudaFree(buffers[inputIndex]));

CHECK(cudaFree(buffers[outputIndex]));

//

//auto end = std::chrono::system_clock::now();

clock_t end = clock();

//std::cout << "推理时间:" << std::chrono::duration_cast<std::chrono::milliseconds>(end - start).count() << "ms" << std::endl;

std::cout << "推理时间:" << end - start << "ms" << std::endl;

std::vector<int> classIds;//结果id数组

std::vector<float> confidences;//结果每个id对应置信度数组

std::vector<cv::Rect> boxes;//每个id矩形框

// 处理box

int net_length = classe + 4;

cv::Mat out1 = cv::Mat(net_length, Num_box, CV_32F, prob);

//start = std::chrono::system_clock::now();

start = clock();

for (int i = 0; i < Num_box; i++) {

//输出是1*net_length*Num_box;所以每个box的属性是每隔Num_box取一个值,共net_length个值

cv::Mat scores = out1(Rect(i, 4, 1, classe)).clone();

Point classIdPoint;

double max_class_socre;

minMaxLoc(scores, 0, &max_class_socre, 0, &classIdPoint);

max_class_socre = (float)max_class_socre;

if (max_class_socre >= CONF_THRESHOLD) {

float x = (out1.at<float>(0, i) - padw) * ratio_w; //cx

float y = (out1.at<float>(1, i) - padh) * ratio_h; //cy

float w = out1.at<float>(2, i) * ratio_w; //w

float h = out1.at<float>(3, i) * ratio_h; //h

int left = MAX((x - 0.5 * w), 0);

int top = MAX((y - 0.5 * h), 0);

int width = (int)w;

int height = (int)h;

if (width <= 0 || height <= 0) { continue; }

classIds.push_back(classIdPoint.y);

confidences.push_back(max_class_socre);

boxes.push_back(Rect(left, top, width, height));

}

}

//执行非最大抑制以消除具有较低置信度的冗余重叠框(NMS)

std::vector<int> nms_result;

cv::dnn::NMSBoxes(boxes, confidences, CONF_THRESHOLD, NMS_THRESHOLD, nms_result);

ObjectTR result;

Rect holeImgRect(0, 0, src.cols, src.rows);

for (int i = 0; i < nms_result.size(); ++i) {

int idx = nms_result[i];

result.classid = classIds[idx];

result.prob = confidences[idx];

result.rect = boxes[idx] & holeImgRect;

output.push_back(result);

}

//end = std::chrono::system_clock::now();

end = clock();

std::cout << "后处理时间:" << end - start << "ms" << std::endl;

Mat finalImg = src.clone();

DrawPredDetect(finalImg, classe, output);

dst = finalImg.clone();

// Destroy the engine

/* contextYolov8Seg->destroy();

engineYolov8Seg->destroy();

runtimeYolov8Seg->destroy();*/

delete data;

delete prob;

return 0;

}

void MODELDLL::DrawPredDetect(const Mat& img, const int& classe, std::vector<ObjectTR> result) {

//生成随机颜色

std::vector<Scalar> color;

srand(time(0));

for (int i = 0; i < classe; i++) {

int b = rand() % 256;

int g = rand() % 256;

int r = rand() % 256;

color.push_back(Scalar(b, g, r));

}

for (int i = 0; i < result.size(); i++) {

int left, top;

left = result[i].rect.x;

top = result[i].rect.y;

int color_num = i;

rectangle(img, result[i].rect, Scalar(0, 0, 255), 2, 8);

//rectangle(img, result[i].box, color[result[i].id], 2, 8);

char label[100];

sprintf(label, "%d:%.2f", result[i].classid, result[i].prob);

int baseLine;

Size labelSize = getTextSize(label, FONT_HERSHEY_SIMPLEX, 0.5, 1, &baseLine);

top = max(top, labelSize.height);

/*putText(img, label, Point(left, top), FONT_HERSHEY_SIMPLEX, 1, color[result[i].id], 2);*/

putText(img, label, Point(left, top), FONT_HERSHEY_SIMPLEX, 1, Scalar(0, 0, 255), 2);

}

}

// yolov8分割推理

bool MODELDLL::LoadYoloV8SegEngine(const std::string& engineName)

{

// create a model using the API directly and serialize it to a stream

char* trtModelStream{ nullptr }; //char* trtModelStream==nullptr; 开辟空指针后 要和new配合使用,比如 trtModelStream = new char[size]

size_t size{ 0 };//与int固定四个字节不同有所不同,size_t的取值range是目标平台下最大可能的数组尺寸,一些平台下size_t的范围小于int的正数范围,又或者大于unsigned int. 使用Int既有可能浪费,又有可能范围不够大。

std::ifstream file(engineName, std::ios::binary);

if (file.good()) {

std::cout << "load engine success" << std::endl;

file.seekg(0, file.end);//指向文件的最后地址

size = file.tellg();//把文件长度告诉给size

//std::cout << "\nfile:" << argv[1] << " size is";

//std::cout << size << "";

file.seekg(0, file.beg);//指回文件的开始地址

trtModelStream = new char[size];//开辟一个char 长度是文件的长度

assert(trtModelStream);//

file.read(trtModelStream, size);//将文件内容传给trtModelStream

file.close();//关闭

}

else {

std::cout << "load engine failed" << std::endl;

return 1;

}

runtimeYolov8Seg = createInferRuntime(gLogger);

assert(runtimeYolov8Seg != nullptr);

bool didInitPlugins = initLibNvInferPlugins(nullptr, "");

engineYolov8Seg = runtimeYolov8Seg->deserializeCudaEngine(trtModelStream, size, nullptr);

assert(engineYolov8Seg != nullptr);

contextYolov8Seg = engineYolov8Seg->createExecutionContext();

assert(contextYolov8Seg != nullptr);

delete[] trtModelStream;

return true;

}

bool MODELDLL::YoloV8SegPredict(const Mat& src, Mat& dst, const int& channel, const int& classe, const int& input_h, const int& input_w,

const int& segChannels, const int& segWidth, const int& segHeight, const int& Num_box)

{

cudaSetDevice(DEVICE);

if (src.empty()) { std::cout << "image load faild" << std::endl; return 1; }

int img_width = src.cols;

int img_height = src.rows;

std::cout << "宽高:" << img_width << " " << img_height << std::endl;

// Subtract mean from image

float* data = new float[channel * input_h * input_w];

Mat pr_img0, pr_img;

std::vector<int> padsize;

Mat tempImg = src.clone();

pr_img = preprocess_img(tempImg, input_h, input_w, padsize); // Resize

int newh = padsize[0], neww = padsize[1], padh = padsize[2], padw = padsize[3];

float ratio_h = (float)src.rows / newh;

float ratio_w = (float)src.cols / neww;

int i = 0;// [1,3,INPUT_H,INPUT_W]

//std::cout << "pr_img.step" << pr_img.step << std::endl;

for (int row = 0; row < input_h; ++row) {

uchar* uc_pixel = pr_img.data + row * pr_img.step;//pr_img.step=widthx3 就是每一行有width个3通道的值

for (int col = 0; col < input_w; ++col)

{

data[i] = (float)uc_pixel[2] / 255.0;

data[i + input_h * input_w] = (float)uc_pixel[1] / 255.0;

data[i + 2 * input_h * input_w] = (float)uc_pixel[0] / 255.;

uc_pixel += 3;

++i;

}

}

// Run inference

static const int OUTPUT_SIZE = Num_box * (classe + 4 + segChannels);//output0

static const int OUTPUT_SIZE1 = segChannels * segWidth * segHeight;//output1

float* prob = new float[OUTPUT_SIZE];

float* prob1 = new float[OUTPUT_SIZE1];

//for (int i = 0; i < 10; i++) {//计算10次的推理速度

// auto start = std::chrono::system_clock::now();

// doInference(*context, data, prob, prob1, 1);

// auto end = std::chrono::system_clock::now();

// std::cout << std::chrono::duration_cast<std::chrono::milliseconds>(end - start).count() << "ms" << std::endl;

// }

auto start = std::chrono::system_clock::now();

//推理

int batchSize = 1;

const ICudaEngine& engine = (*contextYolov8Seg).getEngine();

// Pointers to input and output device buffers to pass to engine.

// Engine requires exactly IEngine::getNbBindings() number of buffers.

assert(engine.getNbBindings() == 3);

void* buffers[3];

// In order to bind the buffers, we need to know the names of the input and output tensors.

// Note that indices are guaranteed to be less than IEngine::getNbBindings()

const int inputIndex = engine.getBindingIndex(INPUT_BLOB_NAME);

const int outputIndex = engine.getBindingIndex(OUTPUT_BLOB_NAME);

const int outputIndex1 = engine.getBindingIndex(OUTPUT_BLOB_NAME1);

// Create GPU buffers on device

CHECK(cudaMalloc(&buffers[inputIndex], batchSize * 3 * input_h * input_w * sizeof(float)));//

CHECK(cudaMalloc(&buffers[outputIndex], batchSize * OUTPUT_SIZE * sizeof(float)));

CHECK(cudaMalloc(&buffers[outputIndex1], batchSize * OUTPUT_SIZE1 * sizeof(float)));

// cudaMalloc分配内存 cudaFree释放内存 cudaMemcpy或 cudaMemcpyAsync 在主机和设备之间传输数据

// cudaMemcpy cudaMemcpyAsync 显式地阻塞传输 显式地非阻塞传输

// Create stream

cudaStream_t stream;

CHECK(cudaStreamCreate(&stream));

// DMA input batch data to device, infer on the batch asynchronously, and DMA output back to host

CHECK(cudaMemcpyAsync(buffers[inputIndex], data, batchSize * 3 * input_h * input_w * sizeof(float), cudaMemcpyHostToDevice, stream));

(*contextYolov8Seg).enqueue(batchSize, buffers, stream, nullptr);

CHECK(cudaMemcpyAsync(prob, buffers[outputIndex], batchSize * OUTPUT_SIZE * sizeof(float), cudaMemcpyDeviceToHost, stream));

CHECK(cudaMemcpyAsync(prob1, buffers[outputIndex1], batchSize * OUTPUT_SIZE1 * sizeof(float), cudaMemcpyDeviceToHost, stream));

cudaStreamSynchronize(stream);

// Release stream and buffers

cudaStreamDestroy(stream);

CHECK(cudaFree(buffers[inputIndex]));

CHECK(cudaFree(buffers[outputIndex]));

CHECK(cudaFree(buffers[outputIndex1]));

//

auto end = std::chrono::system_clock::now();

std::cout << "推理时间:" << std::chrono::duration_cast<std::chrono::milliseconds>(end - start).count() << "ms" << std::endl;

std::vector<int> classIds;//结果id数组

std::vector<float> confidences;//结果每个id对应置信度数组

std::vector<cv::Rect> boxes;//每个id矩形框

std::vector<cv::Mat> picked_proposals; //后续计算mask

// 处理box

int net_length = classe + 4 + segChannels;

cv::Mat out1 = cv::Mat(net_length, Num_box, CV_32F, prob);

start = std::chrono::system_clock::now();

for (int i = 0; i < Num_box; i++) {

//输出是1*net_length*Num_box;所以每个box的属性是每隔Num_box取一个值,共net_length个值

cv::Mat scores = out1(Rect(i, 4, 1, classe)).clone();

Point classIdPoint;

double max_class_socre;

minMaxLoc(scores, 0, &max_class_socre, 0, &classIdPoint);

max_class_socre = (float)max_class_socre;

if (max_class_socre >= CONF_THRESHOLD) {

cv::Mat temp_proto = out1(Rect(i, 4 + classe, 1, segChannels)).clone();

picked_proposals.push_back(temp_proto.t());

float x = (out1.at<float>(0, i) - padw) * ratio_w; //cx

float y = (out1.at<float>(1, i) - padh) * ratio_h; //cy

float w = out1.at<float>(2, i) * ratio_w; //w

float h = out1.at<float>(3, i) * ratio_h; //h

int left = MAX((x - 0.5 * w), 0);

int top = MAX((y - 0.5 * h), 0);

int width = (int)w;

int height = (int)h;

if (width <= 0 || height <= 0) { continue; }

classIds.push_back(classIdPoint.y);

confidences.push_back(max_class_socre);

boxes.push_back(Rect(left, top, width, height));

}

}

//执行非最大抑制以消除具有较低置信度的冗余重叠框(NMS)

std::vector<int> nms_result;

cv::dnn::NMSBoxes(boxes, confidences, CONF_THRESHOLD, NMS_THRESHOLD, nms_result);

std::vector<cv::Mat> temp_mask_proposals;

std::vector<OutputSeg> output;

Rect holeImgRect(0, 0, src.cols, src.rows);

for (int i = 0; i < nms_result.size(); ++i) {

int idx = nms_result[i];

OutputSeg result;

result.id = classIds[idx];

result.confidence = confidences[idx];

result.box = boxes[idx] & holeImgRect;

output.push_back(result);

temp_mask_proposals.push_back(picked_proposals[idx]);

}

// 处理mask

Mat maskProposals;

for (int i = 0; i < temp_mask_proposals.size(); ++i)

maskProposals.push_back(temp_mask_proposals[i]);

Mat protos = Mat(segChannels, segWidth * segHeight, CV_32F, prob1);

Mat matmulRes = (maskProposals * protos).t();//n*32 32*25600 A*B是以数学运算中矩阵相乘的方式实现的,要求A的列数等于B的行数时

Mat masks = matmulRes.reshape(output.size(), { segWidth, segHeight});//n*160*160

std::vector<Mat> maskChannels;

cv::split(masks, maskChannels);

Rect roi(int((float)padw / input_w * segWidth), int((float)padh / input_h * segHeight), int(segWidth - padw / 2), int(segHeight - padh / 2));

for (int i = 0; i < output.size(); ++i) {

Mat dest, mask;

cv::exp(-maskChannels[i], dest);//sigmoid

dest = 1.0 / (1.0 + dest);//160*160

dest = dest(roi);

resize(dest, mask, cv::Size(src.cols, src.rows), INTER_NEAREST);

//crop----截取box中的mask作为该box对应的mask

Rect temp_rect = output[i].box;

mask = mask(temp_rect) > MASK_THRESHOLD;

output[i].boxMask = mask;

}

end = std::chrono::system_clock::now();

std::cout << "后处理时间:" << std::chrono::duration_cast<std::chrono::milliseconds>(end - start).count() << "ms" << std::endl;

Mat finalImg = src.clone();

DrawPred(finalImg, classe, output);

dst = finalImg.clone();

// Destroy the engine

contextYolov8Seg->destroy();

engineYolov8Seg->destroy();

runtimeYolov8Seg->destroy();

delete data;

delete prob;

delete prob1;

return 0;

}

void MODELDLL::DrawPred(const Mat& img, const int& classe, std::vector<OutputSeg> result) {

//生成随机颜色

std::vector<Scalar> color;

srand(time(0));

for (int i = 0; i < classe; i++) {

int b = rand() % 256;

int g = rand() % 256;

int r = rand() % 256;

color.push_back(Scalar(b, g, r));

}

Mat mask = img.clone();

for (int i = 0; i < result.size(); i++) {

int left, top;

left = result[i].box.x;

top = result[i].box.y;

int color_num = i;

rectangle(img, result[i].box, color[result[i].id], 2, 8);

mask(result[i].box).setTo(color[result[i].id], result[i].boxMask);

char label[100];

sprintf(label, "%d:%.2f", result[i].id, result[i].confidence);

//std::string label = std::to_string(result[i].id) + ":" + std::to_string(result[i].confidence);

int baseLine;

Size labelSize = getTextSize(label, FONT_HERSHEY_SIMPLEX, 0.5, 1, &baseLine);

top = max(top, labelSize.height);

putText(img, label, Point(left, top), FONT_HERSHEY_SIMPLEX, 1, color[result[i].id], 2);

}

addWeighted(img, 0.5, mask, 0.8, 1, img); //将mask加在原图上面

}

完整项目:https://download.csdn.net/download/qq_44747572/88791748

3.3 c++动态调用engine模型

3.3.1 onnx2engine

#include <iostream>

#include "NvInfer.h"

#include "NvOnnxParser.h"

#include "logging.h"

#include "opencv2/opencv.hpp"

#include <fstream>

#include <sstream>

#include "cuda_runtime_api.h"

static Logger gLogger;

using namespace nvinfer1;

bool saveEngine(const ICudaEngine& engine, const std::string& fileName)

{

std::ofstream engineFile(fileName, std::ios::binary);

if (!engineFile)

{

std::cout << "Cannot open engine file: " << fileName << std::endl;

return false;

}

IHostMemory* serializedEngine = engine.serialize();

if (serializedEngine == nullptr)

{

std::cout << "Engine serialization failed" << std::endl;

return false;

}

engineFile.write(static_cast<char*>(serializedEngine->data()), serializedEngine->size());

return !engineFile.fail();

}

void print_dims(const nvinfer1::Dims& dim)

{

for (int nIdxShape = 0; nIdxShape < dim.nbDims; ++nIdxShape)

{

printf("dim %d=%d\n", nIdxShape, dim.d[nIdxShape]);

}

}

int main()

{

const char* pchModelPth = "D://Users//6536//Desktop//TR_YOLOV8_DLL//zy_onnx2engine_batch//models_batch//best.onnx";

const char* strTrtSavedPath = "D://Users//6536//Desktop//TR_YOLOV8_DLL//zy_onnx2engine_batch//models_batch//best.engine";

// 1、创建一个builder

IBuilder* pBuilder = createInferBuilder(gLogger);

// 2、 创建一个 network,要求网络结构里,没有隐藏的批量处理维度

INetworkDefinition* pNetwork = pBuilder->createNetworkV2(1U << static_cast<int>(NetworkDefinitionCreationFlag::kEXPLICIT_BATCH));

// 3、 创建一个配置文件

nvinfer1::IBuilderConfig* config = pBuilder->createBuilderConfig();

// 4、 设置profile,这里动态batch专属

IOptimizationProfile* profile = pBuilder->createOptimizationProfile();

// 这里有个OptProfileSelector,这个用来设置优化的参数,比如(Tensor的形状或者动态尺寸),

profile->setDimensions("images", OptProfileSelector::kMIN, Dims4(1, 3, 640, 640));

profile->setDimensions("images", OptProfileSelector::kOPT, Dims4(2, 3, 640, 640));

profile->setDimensions("images", OptProfileSelector::kMAX, Dims4(4, 3, 640, 640));

config->addOptimizationProfile(profile);

auto parser = nvonnxparser::createParser(*pNetwork, gLogger.getTRTLogger());

if (!parser->parseFromFile(pchModelPth, static_cast<int>(gLogger.getReportableSeverity())))

{

printf("解析onnx模型失败\n");

}

int maxBatchSize = 4;

config->setMaxWorkspaceSize(1 << 32);

pBuilder->setMaxBatchSize(maxBatchSize);

//设置推理模式

config->setFlag(BuilderFlag::kFP16);

ICudaEngine* engine = pBuilder->buildEngineWithConfig(*pNetwork, *config);

// 序列化保存模型

saveEngine(*engine, strTrtSavedPath);

nvinfer1::Dims dim = engine->getBindingDimensions(0);

// 打印维度

print_dims(dim);

}

3.3.1 engineMain.cpp

// Xray_test.cpp : 定义控制台应用程序的入口点。

#define _AFXDLL

#include <iomanip>

#include <string>

#include <fstream>

#include "opencv2/core/core.hpp"

#include "opencv2/highgui/highgui.hpp"

#include <io.h>

#include "segmentationModel.h"

// stuff we know about the network and the input/output blobs

#define input_h 640

#define input_w 640

#define channel 3

#define classe 2 // 80个类

#define segWidth 160

#define segHeight 160

#define segChannels 32

#define Num_box 8400 //640 8400 1280 33600

MODELDLL predictClasse;

#pragma comment(lib, "..//x64//Release//segmentationModel.lib")

using namespace cv;

using namespace std;

int main(int argc, char** argv)

{

//检测测试

string engine_filename = "D://Users//6536//Desktop//TR_YOLOV8_DLL//zy_Xray_inspection_batch//models//best.trt";

string img_filename = "D://Users//6536//Desktop//TR_YOLOV8_DLL//zy_Xray_inspection_batch//imgs//";

predictClasse.LoadYoloV8DetectEngine(engine_filename);

string pattern_jpg = img_filename + "*.jpg"; // test_images

vector<cv::String> image_files;

glob(pattern_jpg, image_files);

vector<Mat> vecImg;

vector<Mat> vecDst;

float confTh = 0.25;

int batch = 1;

for (int i = 0; i < image_files.size(); i = i + batch)

{

for (int k = i; k < i + batch; k++)

{

if (k >= image_files.size())

break;

Mat src = imread(image_files[k], 1);

vecImg.push_back(src);

}

vector<vector<ObjectTR>> output;

clock_t start = clock();

predictClasse.YoloV8DetectPredict(vecImg, vecDst, channel, classe, input_h, input_w, Num_box, confTh, output);

clock_t end = clock();

std::cout << "总时间:" << end - start << "ms" << std::endl;

/*cv::namedWindow("output.jpg", 0);

cv::imshow("output.jpg", vecDst[0]);

cv::waitKey(0);*/

vecImg.clear();

vecDst.clear();

}

分割测试

//string engine_filename = "D://Users//6536//Desktop//TR_YOLOV8_DLL//zy_Xray_inspection//models//yolov8n-seg.engine";

//string img_filename = "D://Users//6536//Desktop//TR_YOLOV8_DLL//zy_Xray_inspectionimgs//bus.jpg";

//predictClasse.LoadYoloV8SegEngine(engine_filename);

//Mat src = imread(img_filename, 1);

//Mat dst;

//predictClasse.YoloV8SegPredict(src, dst, channel, classe, input_h, input_w, segChannels, segWidth, segHeight, Num_box);

//cv::imshow("output.jpg", dst);

//cv::waitKey(0);

return 0;

}

3.3.2 engineInferDll

3.3.2.1 Infer.cpp

#include "pch.h"

#include "segmentationModel.h"

#define DEVICE 1 // GPU id

static const float CONF_THRESHOLD = 0.25;

static const float NMS_THRESHOLD = 0.5;

static const float MASK_THRESHOLD = 0.5;

const char* INPUT_BLOB_NAME = "images";

const char* OUTPUT_BLOB_NAME = "output0";//detect

const char* OUTPUT_BLOB_NAME1 = "output1";//mask

static Logger gLogger;

IRuntime* runtimeYolov8Seg;

ICudaEngine* engineYolov8Seg;

IExecutionContext* contextYolov8Seg;

MODELDLL::MODELDLL()

{

}

MODELDLL::~MODELDLL()

{

}

//yolov8检测推理

bool MODELDLL::LoadYoloV8DetectEngine(const std::string& engineName)

{

// create a model using the API directly and serialize it to a stream

char* trtModelStream{ nullptr }; //char* trtModelStream==nullptr; 开辟空指针后 要和new配合使用,比如 trtModelStream = new char[size]

size_t size{ 0 };//与int固定四个字节不同有所不同,size_t的取值range是目标平台下最大可能的数组尺寸,一些平台下size_t的范围小于int的正数范围,又或者大于unsigned int. 使用Int既有可能浪费,又有可能范围不够大。

std::ifstream file(engineName, std::ios::binary);

if (file.good()) {

std::cout << "load engine success" << std::endl;

file.seekg(0, file.end);//指向文件的最后地址

size = file.tellg();//把文件长度告诉给size

//std::cout << "\nfile:" << argv[1] << " size is";

//std::cout << size << "";

file.seekg(0, file.beg);//指回文件的开始地址

trtModelStream = new char[size];//开辟一个char 长度是文件的长度

assert(trtModelStream);//

file.read(trtModelStream, size);//将文件内容传给trtModelStream

file.close();//关闭

}

else {

std::cout << "load engine failed" << std::endl;

return 1;

}

runtimeYolov8Seg = createInferRuntime(gLogger);

assert(runtimeYolov8Seg != nullptr);

bool didInitPlugins = initLibNvInferPlugins(nullptr, "");

engineYolov8Seg = runtimeYolov8Seg->deserializeCudaEngine(trtModelStream, size, nullptr);

assert(engineYolov8Seg != nullptr);

contextYolov8Seg = engineYolov8Seg->createExecutionContext();

assert(contextYolov8Seg != nullptr);

delete[] trtModelStream;

return true;

}

bool MODELDLL::YoloV8DetectPredict(const vector<Mat>& vecImg, vector<Mat>& vecDst, const int& channel, const int& classe, const int& input_h, const int& input_w, const int& Num_box, float& CONF_THRESHOLD, vector<vector<ObjectTR>>& output)

{

cudaSetDevice(DEVICE);

if (vecImg.size() == 0)

return false;

clock_t start_p = clock();

int maxBatchSize = vecImg.size();

// Subtract mean from image

std::vector<float*> dataVec;

dataVec.resize(maxBatchSize);

for (int i = 0; i < maxBatchSize; ++i)

{

dataVec[i] = new float[1 * channel * input_h * input_w];

}

int nInputSize = channel * input_h * input_w * sizeof(float);

Mat pr_img;

std::vector<int> padsize;

vector<vector<int>> vecPadSize;

for (int j = 0; j < vecImg.size(); j++)

{

Mat src = vecImg[j];

if (src.empty()) { std::cout << "image load faild" << std::endl; return 1; }

int img_width = src.cols;

int img_height = src.rows;

std::cout << "宽高:" << img_width << " " << img_height << std::endl;

Mat tempImg = src.clone();

pr_img = preprocess_img(tempImg, input_h, input_w, padsize); // Resize

vecPadSize.push_back(padsize);

int i = 0;// [1,3,INPUT_H,INPUT_W]

//pr_img.convertTo(pr_img, CV_32FC3, 1.0f / 255.0f);

for (int row = 0; row < input_h; ++row) {

uchar* uc_pixel = pr_img.data + row * pr_img.step;//pr_img.step=widthx3 就是每一行有width个3通道的值

for (int col = 0; col < input_w; ++col)

{

dataVec[j][i] = (float)uc_pixel[2] / 255.0;

dataVec[j][i + input_h * input_w] = (float)uc_pixel[1] / 255.0;

dataVec[j][i + 2 * input_h * input_w] = (float)uc_pixel[0] / 255.;

uc_pixel += 3;

++i;

}

}

}

clock_t end_p = clock();

std::cout << "预处理时间:" << end_p - start_p << "ms" << std::endl;

// Run inference

int nOutputSize = Num_box * (classe + 4) * sizeof(float);

float* prob = new float[maxBatchSize * Num_box * (classe + 4)];

std::vector<float*> probVec;

probVec.resize(maxBatchSize);

for (int i = 0; i < maxBatchSize; ++i)

{

probVec[i] = new float[Num_box * (classe + 4)];

}

clock_t start_infer = clock();

//推理

const ICudaEngine& engine = (*contextYolov8Seg).getEngine();

// Pointers to input and output device buffers to pass to engine.

// Engine requires exactly IEngine::getNbBindings() number of buffers.

int nNumBindings = engineYolov8Seg->getNbBindings();

std::vector<void*> buffers;

buffers.clear();

buffers.resize(nNumBindings);

// In order to bind the buffers, we need to know the names of the input and output tensors.

// Note that indices are guaranteed to be less than IEngine::getNbBindings()

const int inputIndex = engine.getBindingIndex(INPUT_BLOB_NAME);

const int outputIndex = engine.getBindingIndex(OUTPUT_BLOB_NAME);

// Create GPU buffers on device

CHECK(cudaMalloc(&buffers[inputIndex], maxBatchSize * nInputSize));//

CHECK(cudaMalloc(&buffers[outputIndex], maxBatchSize * nOutputSize));

// cudaMalloc分配内存 cudaFree释放内存 cudaMemcpy或 cudaMemcpyAsync 在主机和设备之间传输数据

// cudaMemcpy cudaMemcpyAsync 显式地阻塞传输 显式地非阻塞传输

// DMA input batch data to device, infer on the batch asynchronously, and DMA output back to host

for (int i = 0; i < maxBatchSize; i++)

{

cudaMemcpy((unsigned char*)buffers[inputIndex] + nInputSize * i, dataVec[i], nInputSize, cudaMemcpyHostToDevice);

}

// 动态维度,设置batch = 4

contextYolov8Seg->setBindingDimensions(0, Dims4(maxBatchSize, channel, input_h, input_w));

contextYolov8Seg->executeV2(buffers.data());

CHECK(cudaMemcpy(prob, buffers[outputIndex], maxBatchSize * nOutputSize, cudaMemcpyDeviceToHost));

for (int i = 0; i < 100; ++i)

{

std::cout << prob[i] << " ";

}

std::cout << "\n-------------------------" << std::endl;

vecDst.clear();

std::vector<int> classIds;//结果id数组

std::vector<float> confidences;//结果每个id对应置信度数组

std::vector<cv::Rect> boxes;//每个id矩形框

//处理box

int net_length = classe + 4;

int dataSize = Num_box * (classe + 4);

vector<Mat> outputMat;

for (int i = 0; i < vecImg.size(); ++i)

{

for (int j = 0; j < dataSize; j++) {

(probVec[i][j]) = *(prob + j + (i * dataSize));

}

cv::Mat out1 = cv::Mat(net_length, Num_box, CV_32F, probVec[i]);

outputMat.push_back(out1);

}

clock_t end_infer = clock();

std::cout << "推理时间:" << end_infer - start_infer << "ms" << std::endl;

//start = std::chrono::system_clock::now();

clock_t start_post = clock();

for (int m = 0; m < vecImg.size(); ++m)

{

Mat src = vecImg[m];

int newh = vecPadSize[m][0], neww = vecPadSize[m][1], padh = vecPadSize[m][2], padw = vecPadSize[m][3];

float ratio_h = (float)src.rows / newh;

float ratio_w = (float)src.cols / neww;

Mat out1 = outputMat[m];

for (int i = 0; i < Num_box; i++) {

//输出是1*net_length*Num_box;所以每个box的属性是每隔Num_box取一个值,共net_length个值

cv::Mat scores = out1(Rect(i, 4, 1, classe)).clone();

Point classIdPoint;

double max_class_socre;

minMaxLoc(scores, 0, &max_class_socre, 0, &classIdPoint);

max_class_socre = (float)max_class_socre;

if (max_class_socre >= CONF_THRESHOLD) {

float x = (out1.at<float>(0, i) - padw) * ratio_w; //cx

float y = (out1.at<float>(1, i) - padh) * ratio_h; //cy

float w = out1.at<float>(2, i) * ratio_w; //w

float h = out1.at<float>(3, i) * ratio_h; //h

int left = MAX((x - 0.5 * w), 0);

int top = MAX((y - 0.5 * h), 0);

int width = (int)w;

int height = (int)h;

if (width <= 0 || height <= 0) { continue; }

classIds.push_back(classIdPoint.y);

confidences.push_back(max_class_socre);

boxes.push_back(Rect(left, top, width, height));

}

}

//执行非最大抑制以消除具有较低置信度的冗余重叠框(NMS)

std::vector<int> nms_result;

cv::dnn::NMSBoxes(boxes, confidences, CONF_THRESHOLD, NMS_THRESHOLD, nms_result);

ObjectTR result;

Rect holeImgRect(0, 0, src.cols, src.rows);

vector<ObjectTR> tempOutput;

for (int i = 0; i < nms_result.size(); ++i) {

int idx = nms_result[i];

result.classid = classIds[idx];

result.prob = confidences[idx];

result.rect = boxes[idx] & holeImgRect;

tempOutput.push_back(result);

}

Mat finalImg = src.clone();

DrawPredDetect(finalImg, classe, tempOutput);

Mat tempDst = finalImg.clone();

vecDst.push_back(tempDst);

output.push_back(tempOutput);

}

clock_t end_post = clock();

std::cout << "后处理时间:" << end_post - start_post << "ms" << std::endl;

// Release stream and buffers

CHECK(cudaFree(buffers[inputIndex]));

CHECK(cudaFree(buffers[outputIndex]));

std::vector<void*>().swap(buffers);

// Destroy the engine

/* contextYolov8Seg->destroy();

engineYolov8Seg->destroy();

runtimeYolov8Seg->destroy();*/

dataVec.clear();

probVec.clear();

for (int i = 0; i < maxBatchSize; ++i)

{

delete[] dataVec[i];

delete[] probVec[i];

}

delete[] prob;

return true;

}

void MODELDLL::DrawPredDetect(const Mat& img, const int& classe, std::vector<ObjectTR>& result) {

//生成随机颜色

std::vector<Scalar> color;

srand(time(0));

for (int i = 0; i < classe; i++) {

int b = rand() % 256;

int g = rand() % 256;

int r = rand() % 256;

color.push_back(Scalar(b, g, r));

}

for (int i = 0; i < result.size(); i++) {

int left, top;

left = result[i].rect.x;

top = result[i].rect.y;

int color_num = i;

rectangle(img, result[i].rect, Scalar(0, 0, 255), 2, 8);

//rectangle(img, result[i].box, color[result[i].id], 2, 8);

char label[100];

sprintf(label, "%d:%.2f", result[i].classid, result[i].prob);

int baseLine;

Size labelSize = getTextSize(label, FONT_HERSHEY_SIMPLEX, 0.5, 1, &baseLine);

top = max(top, labelSize.height);

/*putText(img, label, Point(left, top), FONT_HERSHEY_SIMPLEX, 1, color[result[i].id], 2);*/

putText(img, label, Point(left, top), FONT_HERSHEY_SIMPLEX, 1, Scalar(0, 0, 255), 2);

}

}

// yolov8分割推理

bool MODELDLL::LoadYoloV8SegEngine(const std::string& engineName)

{

// create a model using the API directly and serialize it to a stream

char* trtModelStream{ nullptr }; //char* trtModelStream==nullptr; 开辟空指针后 要和new配合使用,比如 trtModelStream = new char[size]

size_t size{ 0 };//与int固定四个字节不同有所不同,size_t的取值range是目标平台下最大可能的数组尺寸,一些平台下size_t的范围小于int的正数范围,又或者大于unsigned int. 使用Int既有可能浪费,又有可能范围不够大。

std::ifstream file(engineName, std::ios::binary);

if (file.good()) {

std::cout << "load engine success" << std::endl;

file.seekg(0, file.end);//指向文件的最后地址

size = file.tellg();//把文件长度告诉给size

//std::cout << "\nfile:" << argv[1] << " size is";

//std::cout << size << "";

file.seekg(0, file.beg);//指回文件的开始地址

trtModelStream = new char[size];//开辟一个char 长度是文件的长度

assert(trtModelStream);//

file.read(trtModelStream, size);//将文件内容传给trtModelStream

file.close();//关闭

}

else {

std::cout << "load engine failed" << std::endl;

return 1;

}

runtimeYolov8Seg = createInferRuntime(gLogger);

assert(runtimeYolov8Seg != nullptr);

bool didInitPlugins = initLibNvInferPlugins(nullptr, "");

engineYolov8Seg = runtimeYolov8Seg->deserializeCudaEngine(trtModelStream, size, nullptr);

assert(engineYolov8Seg != nullptr);

contextYolov8Seg = engineYolov8Seg->createExecutionContext();

assert(contextYolov8Seg != nullptr);

delete[] trtModelStream;

return true;

}

bool MODELDLL::YoloV8SegPredict(const Mat& src, Mat& dst, const int& channel, const int& classe, const int& input_h, const int& input_w,

const int& segChannels, const int& segWidth, const int& segHeight, const int& Num_box)

{

cudaSetDevice(DEVICE);

if (src.empty()) { std::cout << "image load faild" << std::endl; return 1; }

int img_width = src.cols;

int img_height = src.rows;

std::cout << "宽高:" << img_width << " " << img_height << std::endl;

// Subtract mean from image

float* data = new float[channel * input_h * input_w];

Mat pr_img0, pr_img;

std::vector<int> padsize;

Mat tempImg = src.clone();

pr_img = preprocess_img(tempImg, input_h, input_w, padsize); // Resize

int newh = padsize[0], neww = padsize[1], padh = padsize[2], padw = padsize[3];

float ratio_h = (float)src.rows / newh;

float ratio_w = (float)src.cols / neww;

int i = 0;// [1,3,INPUT_H,INPUT_W]

//std::cout << "pr_img.step" << pr_img.step << std::endl;

for (int row = 0; row < input_h; ++row) {

uchar* uc_pixel = pr_img.data + row * pr_img.step;//pr_img.step=widthx3 就是每一行有width个3通道的值

for (int col = 0; col < input_w; ++col)

{

data[i] = (float)uc_pixel[2] / 255.0;

data[i + input_h * input_w] = (float)uc_pixel[1] / 255.0;

data[i + 2 * input_h * input_w] = (float)uc_pixel[0] / 255.;

uc_pixel += 3;

++i;

}

}

// Run inference

static const int OUTPUT_SIZE = Num_box * (classe + 4 + segChannels);//output0

static const int OUTPUT_SIZE1 = segChannels * segWidth * segHeight;//output1

float* prob = new float[OUTPUT_SIZE];

float* prob1 = new float[OUTPUT_SIZE1];

//for (int i = 0; i < 10; i++) {//计算10次的推理速度

// auto start = std::chrono::system_clock::now();

// doInference(*context, data, prob, prob1, 1);

// auto end = std::chrono::system_clock::now();

// std::cout << std::chrono::duration_cast<std::chrono::milliseconds>(end - start).count() << "ms" << std::endl;

// }

auto start = std::chrono::system_clock::now();

//推理

int batchSize = 1;

const ICudaEngine& engine = (*contextYolov8Seg).getEngine();

// Pointers to input and output device buffers to pass to engine.

// Engine requires exactly IEngine::getNbBindings() number of buffers.

assert(engine.getNbBindings() == 3);

void* buffers[3];

// In order to bind the buffers, we need to know the names of the input and output tensors.

// Note that indices are guaranteed to be less than IEngine::getNbBindings()

const int inputIndex = engine.getBindingIndex(INPUT_BLOB_NAME);

const int outputIndex = engine.getBindingIndex(OUTPUT_BLOB_NAME);

const int outputIndex1 = engine.getBindingIndex(OUTPUT_BLOB_NAME1);

// Create GPU buffers on device

CHECK(cudaMalloc(&buffers[inputIndex], batchSize * 3 * input_h * input_w * sizeof(float)));//

CHECK(cudaMalloc(&buffers[outputIndex], batchSize * OUTPUT_SIZE * sizeof(float)));

CHECK(cudaMalloc(&buffers[outputIndex1], batchSize * OUTPUT_SIZE1 * sizeof(float)));

// cudaMalloc分配内存 cudaFree释放内存 cudaMemcpy或 cudaMemcpyAsync 在主机和设备之间传输数据

// cudaMemcpy cudaMemcpyAsync 显式地阻塞传输 显式地非阻塞传输

// Create stream

cudaStream_t stream;

CHECK(cudaStreamCreate(&stream));

// DMA input batch data to device, infer on the batch asynchronously, and DMA output back to host

CHECK(cudaMemcpyAsync(buffers[inputIndex], data, batchSize * 3 * input_h * input_w * sizeof(float), cudaMemcpyHostToDevice, stream));

(*contextYolov8Seg).enqueue(batchSize, buffers, stream, nullptr);

CHECK(cudaMemcpyAsync(prob, buffers[outputIndex], batchSize * OUTPUT_SIZE * sizeof(float), cudaMemcpyDeviceToHost, stream));

CHECK(cudaMemcpyAsync(prob1, buffers[outputIndex1], batchSize * OUTPUT_SIZE1 * sizeof(float), cudaMemcpyDeviceToHost, stream));

cudaStreamSynchronize(stream);

// Release stream and buffers

cudaStreamDestroy(stream);

CHECK(cudaFree(buffers[inputIndex]));

CHECK(cudaFree(buffers[outputIndex]));

CHECK(cudaFree(buffers[outputIndex1]));

//

auto end = std::chrono::system_clock::now();

std::cout << "推理时间:" << std::chrono::duration_cast<std::chrono::milliseconds>(end - start).count() << "ms" << std::endl;

std::vector<int> classIds;//结果id数组

std::vector<float> confidences;//结果每个id对应置信度数组

std::vector<cv::Rect> boxes;//每个id矩形框

std::vector<cv::Mat> picked_proposals; //后续计算mask

// 处理box

int net_length = classe + 4 + segChannels;

cv::Mat out1 = cv::Mat(net_length, Num_box, CV_32F, prob);

start = std::chrono::system_clock::now();

for (int i = 0; i < Num_box; i++) {

//输出是1*net_length*Num_box;所以每个box的属性是每隔Num_box取一个值,共net_length个值

cv::Mat scores = out1(Rect(i, 4, 1, classe)).clone();

Point classIdPoint;

double max_class_socre;

minMaxLoc(scores, 0, &max_class_socre, 0, &classIdPoint);

max_class_socre = (float)max_class_socre;

if (max_class_socre >= CONF_THRESHOLD) {

cv::Mat temp_proto = out1(Rect(i, 4 + classe, 1, segChannels)).clone();

picked_proposals.push_back(temp_proto.t());

float x = (out1.at<float>(0, i) - padw) * ratio_w; //cx

float y = (out1.at<float>(1, i) - padh) * ratio_h; //cy

float w = out1.at<float>(2, i) * ratio_w; //w

float h = out1.at<float>(3, i) * ratio_h; //h

int left = MAX((x - 0.5 * w), 0);

int top = MAX((y - 0.5 * h), 0);

int width = (int)w;

int height = (int)h;

if (width <= 0 || height <= 0) { continue; }

classIds.push_back(classIdPoint.y);

confidences.push_back(max_class_socre);

boxes.push_back(Rect(left, top, width, height));

}

}

//执行非最大抑制以消除具有较低置信度的冗余重叠框(NMS)

std::vector<int> nms_result;

cv::dnn::NMSBoxes(boxes, confidences, CONF_THRESHOLD, NMS_THRESHOLD, nms_result);

std::vector<cv::Mat> temp_mask_proposals;

std::vector<OutputSeg> output;

Rect holeImgRect(0, 0, src.cols, src.rows);

for (int i = 0; i < nms_result.size(); ++i) {

int idx = nms_result[i];

OutputSeg result;

result.id = classIds[idx];

result.confidence = confidences[idx];

result.box = boxes[idx] & holeImgRect;

output.push_back(result);

temp_mask_proposals.push_back(picked_proposals[idx]);

}

// 处理mask

Mat maskProposals;

for (int i = 0; i < temp_mask_proposals.size(); ++i)

maskProposals.push_back(temp_mask_proposals[i]);

Mat protos = Mat(segChannels, segWidth * segHeight, CV_32F, prob1);

Mat matmulRes = (maskProposals * protos).t();//n*32 32*25600 A*B是以数学运算中矩阵相乘的方式实现的,要求A的列数等于B的行数时

Mat masks = matmulRes.reshape(output.size(), { segWidth, segHeight});//n*160*160

std::vector<Mat> maskChannels;

cv::split(masks, maskChannels);

Rect roi(int((float)padw / input_w * segWidth), int((float)padh / input_h * segHeight), int(segWidth - padw / 2), int(segHeight - padh / 2));

for (int i = 0; i < output.size(); ++i) {

Mat dest, mask;

cv::exp(-maskChannels[i], dest);//sigmoid

dest = 1.0 / (1.0 + dest);//160*160

dest = dest(roi);

resize(dest, mask, cv::Size(src.cols, src.rows), INTER_NEAREST);

//crop----截取box中的mask作为该box对应的mask

Rect temp_rect = output[i].box;

mask = mask(temp_rect) > MASK_THRESHOLD;

output[i].boxMask = mask;

}

end = std::chrono::system_clock::now();

std::cout << "后处理时间:" << std::chrono::duration_cast<std::chrono::milliseconds>(end - start).count() << "ms" << std::endl;

Mat finalImg = src.clone();

DrawPred(finalImg, classe, output);

dst = finalImg.clone();

// Destroy the engine

contextYolov8Seg->destroy();

engineYolov8Seg->destroy();

runtimeYolov8Seg->destroy();

delete data;

delete prob;

delete prob1;

return 0;

}

void MODELDLL::DrawPred(const Mat& img, const int& classe, std::vector<OutputSeg> result) {

//生成随机颜色

std::vector<Scalar> color;

srand(time(0));

for (int i = 0; i < classe; i++) {

int b = rand() % 256;

int g = rand() % 256;

int r = rand() % 256;

color.push_back(Scalar(b, g, r));

}

Mat mask = img.clone();

for (int i = 0; i < result.size(); i++) {

int left, top;

left = result[i].box.x;

top = result[i].box.y;

int color_num = i;

rectangle(img, result[i].box, color[result[i].id], 2, 8);

mask(result[i].box).setTo(color[result[i].id], result[i].boxMask);

char label[100];

sprintf(label, "%d:%.2f", result[i].id, result[i].confidence);

//std::string label = std::to_string(result[i].id) + ":" + std::to_string(result[i].confidence);

int baseLine;

Size labelSize = getTextSize(label, FONT_HERSHEY_SIMPLEX, 0.5, 1, &baseLine);

top = max(top, labelSize.height);

putText(img, label, Point(left, top), FONT_HERSHEY_SIMPLEX, 1, color[result[i].id], 2);

}

addWeighted(img, 0.5, mask, 0.8, 1, img); //将mask加在原图上面

}

3.3.2.2 Infer.h

#pragma once

#ifdef ZYSEGMENTATIONMODEL_EXPORT

#define ZYSEGMENTATIONMODEL_API _declspec(dllexport)

#else

#define ZYSEGMENTATIONMODEL_API _declspec(dllimport)

#endif

#include <iostream>

#include "NvInfer.h"

#include "cuda_runtime_api.h"

#include "NvInferPlugin.h"

#include "logging.h"

#include <opencv2/opencv.hpp>

#include "utils.h"

#include <string>

#include <fstream>

using namespace nvinfer1;

using namespace cv;

using namespace std;

struct OutputSeg {

int id; //结果类别id

float confidence; //结果置信度

cv::Rect box; //矩形框

cv::Mat boxMask; //矩形框内mask,节省内存空间和加快速度

};

typedef struct {

float prob;

cv::Rect rect;

int classid;

}ObjectTR;

class ZYSEGMENTATIONMODEL_API MODELDLL

{

public:

MODELDLL();

~MODELDLL();

//检测

bool LoadYoloV8DetectEngine(const std::string& engineName);

bool YoloV8DetectPredict(const vector<Mat>& vecImg, vector<Mat>& vecDst, const int& channel, const int& classe, const int& input_h, const int& input_w,

const int& Num_box, float& CONF_THRESHOLD, vector<vector<ObjectTR>>& output);

//分割

bool LoadYoloV8SegEngine(const std::string& engineName);

bool YoloV8SegPredict(const Mat& src, Mat& dst, const int& channel, const int& classe, const int& input_h, const int& input_w,

const int& segChannels, const int& segWidth, const int& segHeight, const int& Num_box);

private:

//检测

void DrawPredDetect(const Mat& img, const int& classe, std::vector<ObjectTR>& result);

//分割

void DrawPred(const Mat& img, const int& classe, std::vector<OutputSeg> result);

};

开放原子开发者工作坊旨在鼓励更多人参与开源活动,与志同道合的开发者们相互交流开发经验、分享开发心得、获取前沿技术趋势。工作坊有多种形式的开发者活动,如meetup、训练营等,主打技术交流,干货满满,真诚地邀请各位开发者共同参与!

更多推荐

已为社区贡献2条内容

已为社区贡献2条内容

所有评论(0)