华为Altas 200DK A2 部署实战(五) 在Atlas 200 DK A2上部署Yolov8-pose姿态估计模型

本文在Atlas 200 DK A2上部署了Yolov8官方预训练的人体关键点检测模型,以及根据自制数据集训练的手部关键点检测模型,包含单张图片,摄像头视频帧,还有本地视频三种推理预测方式。

在Atlas 200 DK A2上部署Yolov8-pose姿态估计模型

对于我们用Pytorch框架训练的模型,我们将其转化为onnx模型后,要在开发板上部署还需要进一步转化成om模型。参考链接。

对于om模型的转化可以有两种方式实现,在PC端和开发板上都可以实现,前提是必须安装CANN工具包以及其依赖包。当然也可以通过华为自己的IDE MindStdio实现转化,但是其本质上还是基于CANN工具包进行的。开发板上的合设环境以及安装好了最新版本的CANN工具包。推荐在PC端上进行模型的转化,实测在开发板上转换模型速度有点慢。

在PC端上安装CANN工具包,以Ubuntu系统为例,首先安装依赖项。

# 首先安装依赖项,PC端连接网络,执行如下命令检查源是否可用。

apt-get update

# 检查root用户的umask

umask

#如果umask不等于0022,执行以下操作

vi ~/.bashrc

#在文件最后一行后面添加umask 0022内容,按执行esc 以及 :wq!命令保存文件并退出

source ~/.bashrc

#安装CANN工具包完成后,记得执行上述步骤将umask改为0027

#安装以下包

sudo apt-get install -y gcc g++ make cmake zlib1g \

zlib1g-dev openssl libsqlite3-dev \

libssl-dev libffi-dev libbz2-dev libxslt1-dev \

unzip pciutils net-tools libblas-dev gfortran libblas3

#在python环境中安装以下包,按照官网的教程,系统的python环境版本应该要大于等于3.7.5

pip3 install attrs

pip3 install numpy

pip3 install decorator

pip3 install sympy

pip3 install cffi

pip3 install pyyaml

pip3 install pathlib2

pip3 install psutil

pip3 install protobuf

pip3 install scipy

pip3 install requests

pip3 install absl-py

然后我们下载CANN工具包,点击此链接下载,这是6.2.RC2版本的CANN,华为的CANN分为社区版和商业版,我们的板子上是7.0 RC1版本,作为普通用户,我们目前下载不了这么高的版本。我认为只要PC端上的CANN版本小于等于板子上实际的CANN版本应该都是可以兼容的。

下载成功后,我们进入下载目录。

#增加对软件包的可执行权限

chmod +x Ascend-cann-toolkit_6.2.RC2_linux-x86_64.run

#执行以下命令安装软件

./Ascend-cann-toolkit_6.2.RC2_linux-x86_64.run --install

安装完成后,若显示如下信息,则安装成功,xxx对应软件包名。

[INFO] xxx install success

接下来画重点,我们还要配置环境变量,每次我们在使用CANN工具之前,都需要执行下面两句指令,如果嫌麻烦可以自己写进~/.bashrc文件的环境变量中。

#CANN_INSTALL_PATH 代表你安装的CANN工具包所在的目录,需要自己替换

source CANN_INSTALL_PATH/ascend-toolkit/set_env.sh

export LD_LIBRARY_PATH=CANN_INSTALL_PATH/ascend-toolkit/latest/x86_64-linux/devlib/:$LD_LIBRARY_PATH

至此,我们的CANN工具包就安装成功了。

接下来我们进行onnx模型到om模型的转化,注意在执行转化时我们不要进入conda环境,否则可能会报错,直接base环境就可以。参考链接。

# model为我们要转化的onnx模型 framework表示源模型为onnx output表示输出目录 soc_version表示开发板处理器信息

atc --model=handpose.onnx --framework=5 --output=handpose --soc_version=Ascend310B4

执行结果如下所示

为了方便展示,我们还将Yolov8官方预训练的Yolov8-pose.pt也一起转化成了yolov8n-pose.om文件,Yolov8官方提供的是人体关键点检测,我们自己训练的是手部关键点检测。

接下来我们将我们转化后的om模型放到开发板上然后编写测试例程。我们可以通过Vscode的SSH连接远程访问我们的开发板进行开发(这只是我选用的方式,我们也可以在开发板上安装IDE比如华为自己的MindStdio进行开发,功能很强大但是上手需要时间)。如下图所示。

我们第一步先跑通Yolov8官方预训练的yolov8n-pose.pt版本的模型。首先利用官方提供的yolov5的样例框架进行模型应用样例的设计,进入到/samples/notebooks/01-yolov5目录下,将其中main.ipynb的内容复制下来,粘贴到一个新创建的test.py文件中,如下所示修改。

# test.py

# 导入代码依赖

import cv2

import numpy as np

from skvideo.io import vreader, FFmpegWriter

from ais_bench.infer.interface import InferSession

import time

from det_utils import NMS ,postprocess, preprocess_warpAffine, random_color, draw_bbox

cfg = {

'conf_thres': 0.25, # 模型置信度阈值,阈值越低,得到的预测框越多

'iou_thres': 0.45, # IOU阈值,高于这个阈值的重叠预测框会被过滤掉

'input_shape': [640, 640], # 模型输入尺寸

}

# 模型路径

model_path = 'yolov8n-pose.om'

# 初始化推理模型

model = InferSession(0, model_path)

# 选择模式

infer_mode = 'camera'

# 模型推理

def model_infer(image, model, cfg):

start_time = time.time()

# 数据预处理

img_pre, IM = preprocess_warpAffine(image)

pre_time = time.time()

print("pre:",pre_time - start_time)

# 模型推理

output = model.infer([img_pre])[0].transpose(0,2,1) #(1, 56, 8400) to (1,8400,56)

infer_time = time.time()

print("infer:",infer_time - pre_time)

# 后处理

boxes = postprocess(output, IM, cfg['conf_thres'], cfg['iou_thres'])

post_time = time.time()

print("post:",post_time - infer_time)

# 绘图

img = draw_bbox(boxes, image)

draw_time = time.time()

print("draw:",draw_time - post_time)

return img

# 推理图片

def infer_image(img_path, model, cfg):

# 通过cv2.imread()载入图像

img = model_infer(cv2.imread(img_path), model, cfg)

# 保存

cv2.imwrite("infer-pose.jpg", img)

print("save done")

# 推理视频

def infer_video(video_path, model, labels_dict, cfg):

"""视频推理"""

image_widget = widgets.Image(format='jpeg', width=800, height=600)

display(image_widget)

# 读入视频

cap = cv2.VideoCapture(video_path)

while True:

ret, img_frame = cap.read()

if not ret:

break

# 对视频帧进行推理

image_pred = infer_frame_with_vis(img_frame, model, labels_dict, cfg, bgr2rgb=True)

image_widget.value = img2bytes(image_pred)

# 推理摄像头的流

def infer_camera(model, cfg):

"""外设摄像头实时推理"""

def find_camera_index():

max_index_to_check = 2 # Maximum index to check for camera

for index in range(max_index_to_check):

cap = cv2.VideoCapture(index)

if cap.read()[0]:

cap.release()

return index

# If no camera is found

raise ValueError("No camera found.")

# 获取摄像头

camera_index = find_camera_index()

cap = cv2.VideoCapture(camera_index)

# 返回当前时间

start_time = time.time()

counter = 0

while True:

# 从摄像头中读取一帧图像

_, frame = cap.read()

image = model_infer(frame, model, cfg)

counter += 1 # 计算帧数

if frame is not None:

try:

# 实时显示帧数

if (time.time() - start_time) != 0:

cv2.putText(image, "FPS:{0}".format(float('%.1f' % (counter / (time.time() - start_time)))), (5, 30),

cv2.FONT_HERSHEY_SIMPLEX, 0.75, (0, 0, 255), 1)

# 显示图像

cv2.imshow('keypoint', image)

except:

print(frame)

else:

exit(0)

if cv2.waitKey(1) & 0xFF == ord('q'):

break

# 释放资源

cap.release()

cv2.destroyAllWindows()

# 推理模式选择

if infer_mode == 'image':

img_path = 'bus.jpg'

infer_image(img_path, model, cfg)

elif infer_mode == 'camera':

infer_camera(model, cfg)

elif infer_mode == 'video':

video_path = 'racing.mp4'

infer_video(video_path, model, cfg)

然后再将/samples/notebooks/01-yolov5目录下的文件det_utils.py拷贝下来,这是用来做模型的前处理,后处理,以及画图部分的模块,修改其代码如下。

# det_utils.py

"""

Copyright 2022 Huawei Technologies Co., Ltd

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

"""

import cv2

import numpy as np

import torch

import torchvision

# 17个关键点连接顺序

skeleton = [[16, 14], [14, 12], [17, 15], [15, 13], [12, 13], [6, 12], [7, 13], [6, 7], [6, 8],

[7, 9], [8, 10], [9, 11], [2, 3], [1, 2], [1, 3], [2, 4], [3, 5], [4, 6], [5, 7]]

# 调色板

pose_palette = np.array([[255, 128, 0], [255, 153, 51], [255, 178, 102], [230, 230, 0], [255, 153, 255],

[153, 204, 255], [255, 102, 255], [255, 51, 255], [102, 178, 255], [51, 153, 255],

[255, 153, 153], [255, 102, 102], [255, 51, 51], [153, 255, 153], [102, 255, 102],

[51, 255, 51], [0, 255, 0], [0, 0, 255], [255, 0, 0], [255, 255, 255]],dtype=np.uint8)

# 骨架颜色

kpt_color = pose_palette[[16, 16, 16, 16, 16, 0, 0, 0, 0, 0, 0, 9, 9, 9, 9, 9, 9]]

#关键点颜色

limb_color = pose_palette[[9, 9, 9, 9, 7, 7, 7, 0, 0, 0, 0, 0, 16, 16, 16, 16, 16, 16, 16]]

def preprocess_warpAffine(image, dst_width=640, dst_height=640):

scale = min((dst_width / image.shape[1], dst_height / image.shape[0]))

ox = (dst_width - scale * image.shape[1]) / 2

oy = (dst_height - scale * image.shape[0]) / 2

M = np.array([

[scale, 0, ox],

[0, scale, oy]

], dtype=np.float32)

img_pre = cv2.warpAffine(image, M, (dst_width, dst_height), flags=cv2.INTER_LINEAR,

borderMode=cv2.BORDER_CONSTANT, borderValue=(114, 114, 114))

IM = cv2.invertAffineTransform(M)

img_pre = (img_pre[...,::-1] / 255.0).astype(np.float32) # BGR to RGB, 0 - 255 to 0.0 - 1.0

img_pre = img_pre.transpose(2, 0, 1)[None] # BHWC to BCHW (n, 3, h, w)

img_pre = torch.from_numpy(img_pre)

return img_pre, IM

def iou(box1, box2):

def area_box(box):

return (box[2] - box[0]) * (box[3] - box[1])

left, top = max(box1[:2], box2[:2])

right, bottom = min(box1[2:4], box2[2:4])

union = max((right-left), 0) * max((bottom-top), 0)

cross = area_box(box1) + area_box(box2) - union

if cross == 0 or union == 0:

return 0

return union / cross

def NMS(boxes, iou_thres):

remove_flags = [False] * len(boxes)

keep_boxes = []

for i, ibox in enumerate(boxes):

if remove_flags[i]:

continue

keep_boxes.append(ibox)

for j in range(i + 1, len(boxes)):

if remove_flags[j]:

continue

jbox = boxes[j]

if iou(ibox, jbox) > iou_thres:

remove_flags[j] = True

return keep_boxes

def postprocess(pred, IM=[], conf_thres=0.25, iou_thres=0.45):

# 输入是模型推理的结果,即8400个预测框

# 1,8400,56 [cx,cy,w,h,conf,17*3]

boxes = []

for img_id, box_id in zip(*np.where(pred[...,4] > conf_thres)):

item = pred[img_id, box_id]

cx, cy, w, h, conf = item[:5]

left = cx - w * 0.5

top = cy - h * 0.5

right = cx + w * 0.5

bottom = cy + h * 0.5

keypoints = item[5:].reshape(-1, 3)

keypoints[:, 0] = keypoints[:, 0] * IM[0][0] + IM[0][2]

keypoints[:, 1] = keypoints[:, 1] * IM[1][1] + IM[1][2]

boxes.append([left, top, right, bottom, conf, *keypoints.reshape(-1).tolist()])

boxes = np.array(boxes)

lr = boxes[:,[0, 2]]

tb = boxes[:,[1, 3]]

boxes[:,[0,2]] = IM[0][0] * lr + IM[0][2]

boxes[:,[1,3]] = IM[1][1] * tb + IM[1][2]

boxes = sorted(boxes.tolist(), key=lambda x:x[4], reverse=True)

return NMS(boxes, iou_thres)

def hsv2bgr(h, s, v):

h_i = int(h * 6)

f = h * 6 - h_i

p = v * (1 - s)

q = v * (1 - f * s)

t = v * (1 - (1 - f) * s)

r, g, b = 0, 0, 0

if h_i == 0:

r, g, b = v, t, p

elif h_i == 1:

r, g, b = q, v, p

elif h_i == 2:

r, g, b = p, v, t

elif h_i == 3:

r, g, b = p, q, v

elif h_i == 4:

r, g, b = t, p, v

elif h_i == 5:

r, g, b = v, p, q

return int(b * 255), int(g * 255), int(r * 255)

def random_color(id):

h_plane = (((id << 2) ^ 0x937151) % 100) / 100.0

s_plane = (((id << 3) ^ 0x315793) % 100) / 100.0

return hsv2bgr(h_plane, s_plane, 1)

def draw_bbox(boxes, img):

for box in boxes:

left, top, right, bottom = int(box[0]), int(box[1]), int(box[2]), int(box[3])

confidence = box[4]

label = 0

color = random_color(label)

cv2.rectangle(img, (left, top), (right, bottom), color, 2, cv2.LINE_AA)

caption = f"Person {confidence:.2f}"

w, h = cv2.getTextSize(caption, 0, 1, 2)[0]

cv2.rectangle(img, (left - 3, top - 33), (left + w + 10, top), color, -1)

cv2.putText(img, caption, (left, top - 5), 0, 1, (0, 0, 0), 2, 16)

keypoints = box[5:]

keypoints = np.array(keypoints).reshape(-1, 3)

for i, keypoint in enumerate(keypoints):

x, y, conf = keypoint

color_k = [int(x) for x in kpt_color[i]]

if conf < 0.5:

continue

if x != 0 and y != 0:

cv2.circle(img, (int(x), int(y)), 5, color_k, -1, lineType=cv2.LINE_AA)

for i, sk in enumerate(skeleton):

pos1 = (int(keypoints[(sk[0] - 1), 0]), int(keypoints[(sk[0] - 1), 1]))

pos2 = (int(keypoints[(sk[1] - 1), 0]), int(keypoints[(sk[1] - 1), 1]))

conf1 = keypoints[(sk[0] - 1), 2]

conf2 = keypoints[(sk[1] - 1), 2]

if conf1 < 0.5 or conf2 < 0.5:

continue

if pos1[0] == 0 or pos1[1] == 0 or pos2[0] == 0 or pos2[1] == 0:

continue

cv2.line(img, pos1, pos2, [int(x) for x in limb_color[i]], thickness=2, lineType=cv2.LINE_AA)

return img

做完这些后,我们就可以通过运行test.py在开发板上进行测试了,下面贴上效果。

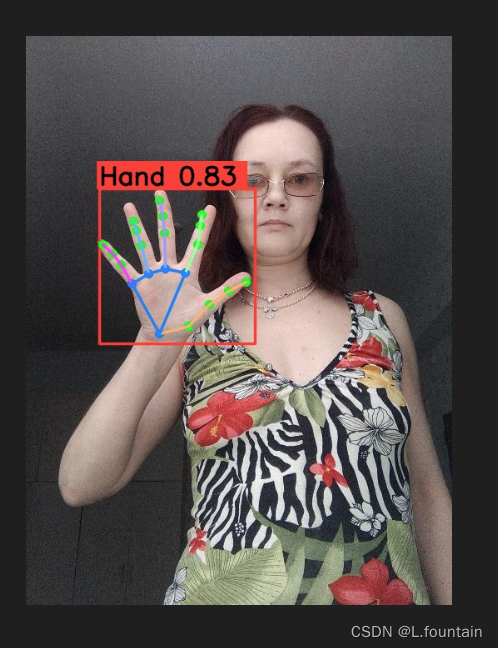

可以看见我们对Yolov8官方预训练的模型已经部署成功了。下面部署我们自己针对自制数据集训练的Yolov8n-pose手部关键点检测模型。需新建一个det_utils2.py,在det_utils.py的基础上进行修改如下所示。

"""

Copyright 2022 Huawei Technologies Co., Ltd

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

"""

import cv2

import numpy as np

import torch

import torchvision

# 21个关键点连接顺序

skeleton = [[1, 2], [2, 3], [3, 4], [4, 5], [1, 6], [6, 7], [7, 8], [8, 9], [6, 10],

[10, 11], [11, 12], [12, 13], [10, 14], [14, 15], [15, 16], [16, 17], [14, 18], [18, 19], [19, 20],[20,21],[1,18]]

# 调色板

pose_palette = np.array([[255, 128, 0], [255, 153, 51], [255, 178, 102], [230, 230, 0], [255, 153, 255],

[153, 204, 255], [255, 102, 255], [255, 51, 255], [102, 178, 255], [51, 153, 255],

[255, 153, 153], [255, 102, 102], [255, 51, 51], [153, 255, 153], [102, 255, 102],

[51, 255, 51], [0, 255, 0], [0, 0, 255], [255, 0, 0], [255, 255, 255]],dtype=np.uint8)

#关键点颜色

kpt_color = pose_palette[[0, 16, 16, 16, 16, 0, 16, 16, 16, 0, 16, 16, 16, 0, 16, 16, 16, 0, 16, 16, 16, 16, 16]]

# 骨架颜色

limb_color = pose_palette[[8, 8, 8, 8, 0, 13, 13, 13, 0, 10, 10, 10, 0, 2, 2, 2, 0, 7, 7, 7, 0]]

def preprocess_warpAffine(image, dst_width=640, dst_height=640):

scale = min((dst_width / image.shape[1], dst_height / image.shape[0]))

ox = (dst_width - scale * image.shape[1]) / 2

oy = (dst_height - scale * image.shape[0]) / 2

M = np.array([

[scale, 0, ox],

[0, scale, oy]

], dtype=np.float32)

img_pre = cv2.warpAffine(image, M, (dst_width, dst_height), flags=cv2.INTER_LINEAR,

borderMode=cv2.BORDER_CONSTANT, borderValue=(114, 114, 114))

IM = cv2.invertAffineTransform(M)

img_pre = (img_pre[...,::-1] / 255.0).astype(np.float32) # BGR to RGB, 0 - 255 to 0.0 - 1.0

img_pre = img_pre.transpose(2, 0, 1)[None] # BHWC to BCHW (n, 3, h, w)

img_pre = torch.from_numpy(img_pre)

return img_pre, IM

def iou(box1, box2):

def area_box(box):

return (box[2] - box[0]) * (box[3] - box[1])

left, top = max(box1[:2], box2[:2])

right, bottom = min(box1[2:4], box2[2:4])

union = max((right-left), 0) * max((bottom-top), 0)

cross = area_box(box1) + area_box(box2) - union

if cross == 0 or union == 0:

return 0

return union / cross

def NMS(boxes, iou_thres):

remove_flags = [False] * len(boxes)

keep_boxes = []

for i, ibox in enumerate(boxes):

if remove_flags[i]:

continue

keep_boxes.append(ibox)

for j in range(i + 1, len(boxes)):

if remove_flags[j]:

continue

jbox = boxes[j]

if iou(ibox, jbox) > iou_thres:

remove_flags[j] = True

return keep_boxes

def postprocess(pred, IM=[], conf_thres=0.25, iou_thres=0.45):

# 输入是模型推理的结果,即8400个预测框

# 1,8400,42 [cx,cy,w,h,conf,21*2]

boxes = []

for img_id, box_id in zip(*np.where(pred[...,4] > conf_thres)):

item = pred[img_id, box_id]

cx, cy, w, h, conf = item[:5]

left = cx - w * 0.5

top = cy - h * 0.5

right = cx + w * 0.5

bottom = cy + h * 0.5

keypoints = item[5:].reshape(-1, 2)

keypoints[:, 0] = keypoints[:, 0] * IM[0][0] + IM[0][2]

keypoints[:, 1] = keypoints[:, 1] * IM[1][1] + IM[1][2]

boxes.append([left, top, right, bottom, conf, *keypoints.reshape(-1).tolist()])

#防止原始图像没有待测目标

if boxes !=[]:

boxes = np.array(boxes)

lr = boxes[:,[0, 2]]

tb = boxes[:,[1, 3]]

boxes[:,[0,2]] = IM[0][0] * lr + IM[0][2]

boxes[:,[1,3]] = IM[1][1] * tb + IM[1][2]

boxes = sorted(boxes.tolist(), key=lambda x:x[4], reverse=True)

return NMS(boxes, iou_thres)

return []

def hsv2bgr(h, s, v):

h_i = int(h * 6)

f = h * 6 - h_i

p = v * (1 - s)

q = v * (1 - f * s)

t = v * (1 - (1 - f) * s)

r, g, b = 0, 0, 0

if h_i == 0:

r, g, b = v, t, p

elif h_i == 1:

r, g, b = q, v, p

elif h_i == 2:

r, g, b = p, v, t

elif h_i == 3:

r, g, b = p, q, v

elif h_i == 4:

r, g, b = t, p, v

elif h_i == 5:

r, g, b = v, p, q

return int(b * 255), int(g * 255), int(r * 255)

def random_color(id):

h_plane = (((id << 2) ^ 0x937151) % 100) / 100.0

s_plane = (((id << 3) ^ 0x315793) % 100) / 100.0

return hsv2bgr(h_plane, s_plane, 1)

def draw_bbox(boxes, img):

for box in boxes:

left, top, right, bottom = int(box[0]), int(box[1]), int(box[2]), int(box[3])

confidence = box[4]

label = 0

color = random_color(label)

cv2.rectangle(img, (left, top), (right, bottom), color, 2, cv2.LINE_AA)

caption = f"Person {confidence:.2f}"

w, h = cv2.getTextSize(caption, 0, 1, 2)[0]

cv2.rectangle(img, (left - 3, top - 33), (left + w + 10, top), color, -1)

cv2.putText(img, caption, (left, top - 5), 0, 1, (0, 0, 0), 2, 16)

keypoints = box[5:]

keypoints = np.array(keypoints).reshape(-1, 2)

for i, keypoint in enumerate(keypoints):

x, y = keypoint

color_k = [int(x) for x in kpt_color[i]]

if x != 0 and y != 0:

cv2.circle(img, (int(x), int(y)), 5, color_k, -1, lineType=cv2.LINE_AA)

for i, sk in enumerate(skeleton):

pos1 = (int(keypoints[(sk[0] - 1), 0]), int(keypoints[(sk[0] - 1), 1]))

pos2 = (int(keypoints[(sk[1] - 1), 0]), int(keypoints[(sk[1] - 1), 1]))

if pos1[0] == 0 or pos1[1] == 0 or pos2[0] == 0 or pos2[1] == 0:

continue

cv2.line(img, pos1, pos2, [int(x) for x in limb_color[i]], thickness=2, lineType=cv2.LINE_AA)

return img

然后我们将test.py开头导入的包由from det_utils import xxx改为from det_utils2 import xxx,就可以进行手部关键点的推理预测了。效果如下。

可以看出我们现在推理摄像头视频能达到16,17帧的水平,这个速度是比那些top-down分别用目标检测和关键点检测来推理的模型速度更快的。

至此,在Atlas 200 DK A2上部署Yolov8-pose模型就结束了,后续将会更新一些算子和程序的优化来改进此样例。

开放原子开发者工作坊旨在鼓励更多人参与开源活动,与志同道合的开发者们相互交流开发经验、分享开发心得、获取前沿技术趋势。工作坊有多种形式的开发者活动,如meetup、训练营等,主打技术交流,干货满满,真诚地邀请各位开发者共同参与!

更多推荐

已为社区贡献3条内容

已为社区贡献3条内容

所有评论(0)