部署K8S 1.15.2时遇到的问题及解决办法

配置文件路径:/etc/kubernetes/manifests/scheduler.conf 、/etc/kubernetes/manifests/controller-manager.conf。如controller-manager组件的配置如下:可以去掉--port=0这个设置,然后重启sudo systemctl restart kubelet。报错原因:https://github.co

1. 全部node节点出现 Unable to read config path "/etc/kubernetes/manifests": path does not exist, ignoring

报错原因:未知

解决办法:创建一个空manifests

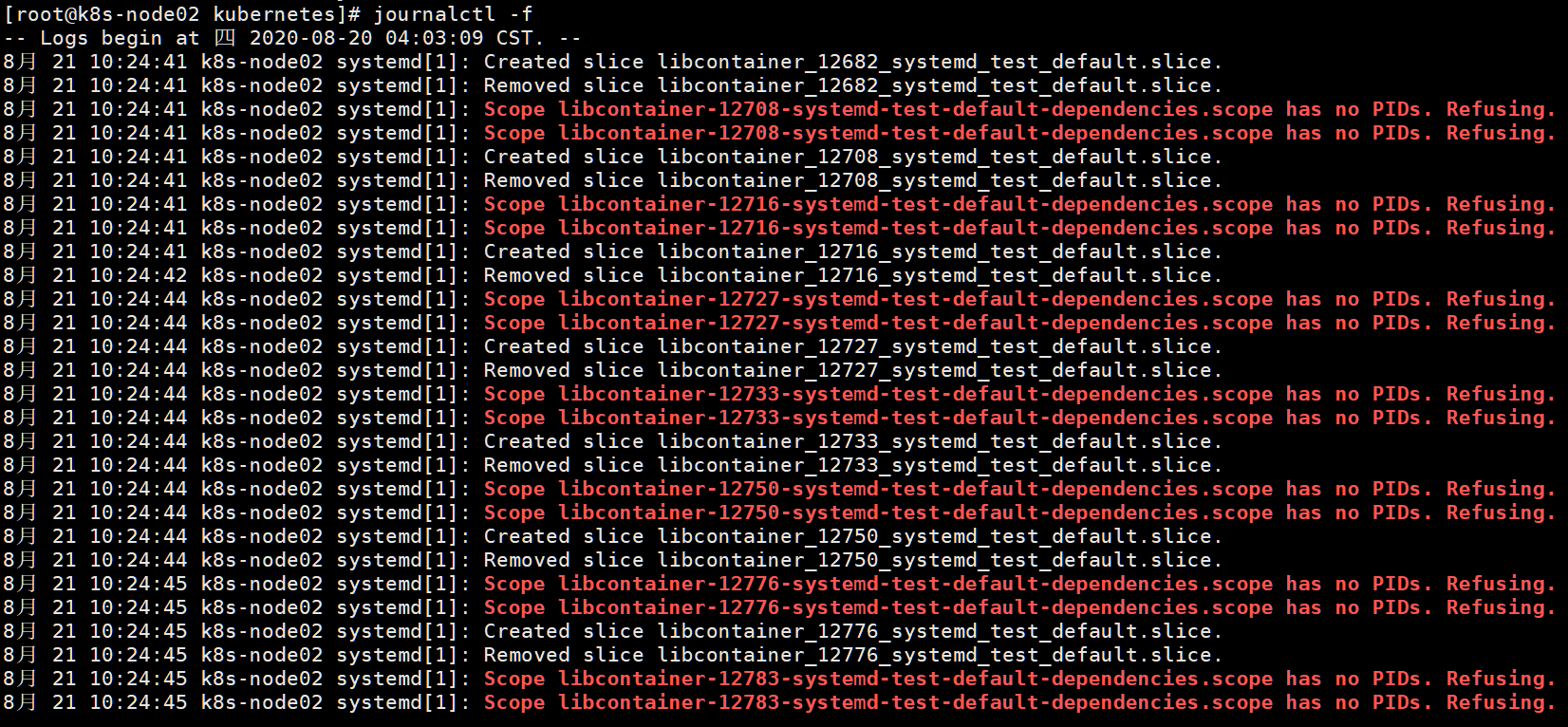

$ mkdir /etc/kubernetes/manifests2. 所有的node节点不停的报“Scope libcontainer-12708-systemd-test-default-dependencies.scope has no PIDs. Refusing.”

报错原因:https://github.com/kubernetes/kubernetes/issues/76531

解决办法:根据github上大家的回答,初步判定是bug,将docker版本升级到18.09,k8s版本升级到1.16.3,该问题已被修复

3. kubectl get cs显示scheduler Unhealthy,controller-manager Unhealthy

通过kubeadm安装的k8s集群获取kube-scheduler和kube-controller-manager组件状态异常

$ kubectl get cs

NAME STATUS MESSAGE ERROR

controller-manager Unhealthy Get http://127.0.0.1:10252/healthz: dial tcp 127.0.0.1:10252: connect: connection refused

scheduler Unhealthy Get http://127.0.0.1:10251/healthz: dial tcp 127.0.0.1:10251: connect: connection refused

etcd-0 Healthy {"health":"true"}kubernetes版本:v1.18.6

镜像信息

$ sudo docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

nginx latest 8cf1bfb43ff5 4 hours ago 132MB

k8s.gcr.io/kube-proxy v1.18.6 c3d62d6fe412 6 days ago 117MB

k8s.gcr.io/kube-apiserver v1.18.6 56acd67ea15a 6 days ago 173MB

k8s.gcr.io/kube-controller-manager v1.18.6 ffce5e64d915 6 days ago 162MB

k8s.gcr.io/kube-scheduler v1.18.6 0e0972b2b5d1 6 days ago 95.3MB

quay.io/coreos/flannel v0.12.0-amd64 4e9f801d2217 4 months ago 52.8MB

k8s.gcr.io/pause 3.2 80d28bedfe5d 5 months ago 683kB

k8s.gcr.io/coredns 1.6.7 67da37a9a360 5 months ago 43.8MB

k8s.gcr.io/etcd 3.4.3-0 303ce5db0e90 9 months ago 288MBk8s组件pod状态

$ kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-66bff467f8-22hgp 1/1 Running 0 45m

kube-system coredns-66bff467f8-ck6qq 1/1 Running 0 45m

kube-system etcd-node-14 1/1 Running 0 46m

kube-system kube-apiserver-node-14 1/1 Running 0 46m

kube-system kube-controller-manager-node-14 1/1 Running 0 17m

kube-system kube-flannel-ds-amd64-lm7lt 1/1 Running 0 44m

kube-system kube-proxy-5hghv 1/1 Running 0 45m

kube-system kube-scheduler-node-14 1/1 Running 0 17m排查思路:

1、先查看本地的端口,可以确认没有启动10251、10252端口

2、确认kube-scheduler和kube-controller-manager组件配置是否禁用了非安全端口

配置文件路径:/etc/kubernetes/manifests/scheduler.conf 、/etc/kubernetes/manifests/controller-manager.conf

如controller-manager组件的配置如下:可以去掉--port=0这个设置,然后重启sudo systemctl restart kubelet

重启服务之后确认组件状态,显示就正常了

$ kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health":"true"}更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)