http://thesecretlivesofdata.com/raft/

https://github.com/coreos/etcd

1 Introduction

Consensus algorithms allow a collection of machines to work as a coherent group that can survive the failures of some of its members. Because of this, they play a key role in building reliable large-scale software systems.

Paxos [15, 16] has dominated the discussion of consensus algorithms over the last decade:most implementations of consensus are based on Paxos or influenced by it, and Paxos has become the primary vehicle used to teach students about consensus.

Unfortunately, Paxos is quite difficult to understand, in spite of numerous attempts to make it more approachable.

Furthermore, its architecture requires complex changes to support practical systems. As a result, both system builders and students struggle with Paxos.

After struggling with Paxos ourselves, we set out to find a new consensus algorithm that could provide a better foundation for system building and education.

Our approach was unusual in that our primary goal was understandability: could we define a consensus algorithm for practical systems and describe it in a way that is significantly easier to learn than Paxos? Furthermore,we wanted the algorithm to facilitate the development of intuitions that are essential for system builders. It was important not just for the algorithmto work, but for it to be obvious why it works.

The result of this work is a consensus algorithm called Raft.

In designing Raft we applied specific techniques to improve understandability, including decomposition (Raft separates leader election, log replication, and safety) and state space reduction (relative to Paxos, Raft reduces the degree of nondeterminismand the ways servers can be inconsistent with each other).

Raft不同于一般的系统,他的设计目标是可理解性,之所以这样的研究是有价值的,是因为Paxos协议实在是太难于理解,并很难于实现;采用,功能分解和状态空间规约等方式达到这个目标

Raft is similar in many ways to existing consensus algorithms (most notably, Oki and Liskov’s Viewstamped Replication [29, 22]), but it has several novel features:

• Strong leader: Raft uses a stronger form of leadership than other consensus algorithms.

For example, log entries only flow from the leader to other servers.

This simplifies the management of the replicated log and makes Raft easier to understand.

• Leader election: Raft uses randomized timers to elect leaders.

This adds only a small amount of mechanism to the heartbeats already required for any consensus algorithm, while resolving conflicts simply and rapidly.

• Membership changes: Raft’s mechanism for changing the set of servers in the cluster uses a new joint consensus approach where the majorities of two different configurations overlap during transitions.

This allows the cluster to continue operating normally during configuration changes.

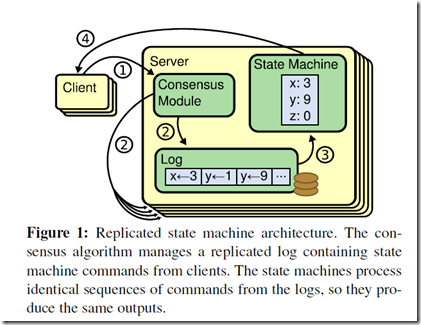

2 Replicated state machines

Consensus algorithms typically arise in the context of replicated state machines [37].

In this approach, state machines on a collection of servers compute identical copies of the same state and can continue operating even if some of the servers are down.

Replicated state machines are used to solve a variety of fault tolerance problems in distributed systems. For example, large-scale systems that have a single cluster leader, such as GFS [8], HDFS [38], and RAMCloud [33], typically use a separate replicated state machine to manage leader election and store configuration information that must survive leader crashes. Examples of replicated state machines include Chubby [2] and ZooKeeper [11].

Replicated state machines are typically implemented using a replicated log, as shown in Figure 1. Each server stores a log containing a series of commands, which its state machine executes in order. Each log contains the same commands in the same order, so each state machine processes the same sequence of commands. Since the state machines are deterministic, each computes the same state and the same sequence of outputs.

Keeping the replicated log consistent is the job of the consensus algorithm. The consensus module on a server receives commands from clients and adds them to its log.

It communicates with the consensus modules on other servers to ensure that every log eventually contains the same requests in the same order, even if some servers fail.

Once commands are properly replicated, each server’s state machine processes them in log order, and the outputs are returned to clients. As a result, the servers appear to form a single, highly reliable state machine.

一致性问题,就是分布式状态机,如何在不同replicas上保持唯一的执行序列

Consensus algorithms for practical systems typically have the following properties:

• They ensure safety (never returning an incorrect result) under all non-Byzantine conditions, including network delays, partitions, and packet loss, duplication, and reordering.

• They are fully functional (available) as long as any majority of the servers are operational and can communicate with each other and with clients.

Thus, a typical cluster of five servers can tolerate the failure of any two servers. Servers are assumed to fail by stopping; they may later recover from state on stable storage and rejoin the cluster.

• They do not depend on timing to ensure the consistency of the logs: faulty clocks and extreme message delays can, at worst, cause availability problems.

• In the common case, a command can complete as soon as a majority of the cluster has responded to a single round of remote procedure calls; a minority of slow servers need not impact overall system performance.

一致性算法,需要保护如下一些属性:

1. safety,安全性,即总是返回正确的结果(non-Byzantine),即使发生各种网络延迟,分裂,丢包,重复包,乱序包。。。。。。

2. 高可用,只要majority的server可以服务和可达

3. 不依赖时间来保证一致性,时钟或延时问题,最坏的情况,只是影响可用性,而非一致性

4. 命令只要在majority完成响应(一轮rpc)后,就被认为complete;minority的慢server,不会影响系统性能

3 What’s wrong with Paxos?

Over the last ten years, Leslie Lamport’s Paxos protocol [15] has become almost synonymous with consensus:

it is the protocol most commonly taught in courses, and most implementations of consensus use it as a starting point. Paxos first defines a protocol capable of reaching agreement on a single decision, such as a single replicated log entry. We refer to this subset as single-decree Paxos.

Paxos then combinesmultiple instances of this protocol to facilitate a series of decisions such as a log (multi-Paxos).

Paxos ensures both safety and liveness, and it supports changes in cluster membership. Its correctness has been proven, and it is efficient in the normal case.

Unfortunately, Paxos has two significant drawbacks.

The first drawback is that Paxos is exceptionally difficult to understand. The full explanation [15] is notoriously opaque; few people succeed in understanding it, and only with great effort. As a result, there have been several attempts to explain Paxos in simpler terms [16, 20, 21].

These explanations focus on the single-decree subset, yet they are still challenging. In an informal survey of attendees at NSDI 2012, we found few people who were comfortable with Paxos, even among seasoned researchers.

We struggled with Paxos ourselves; we were not able to understand the complete protocol until after reading several simplified explanations and designing our own alternative protocol, a process that took almost a year.

We hypothesize that Paxos’ opaqueness derives from its choice of the single-decree subset as its foundation.

Single-decree Paxos is dense and subtle: it is divided into two stages that do not have simple intuitive explanations and cannot be understood independently. Because of this, it is difficult to develop intuitions about why the singledecree

protocol works. The composition rules for multi-Paxos add significant additional complexity and subtlety.

We believe that the overall problemof reaching consensus on multiple decisions (i.e., a log instead of a single entry) can be decomposed in other ways that are more direct and obvious.

The second problem with Paxos is that it does not provide a good foundation for building practical implementations.

One reason is that there is no widely agreedupon algorithm for multi-Paxos. Lamport’s descriptions are mostly about single-decree Paxos; he sketched possible approaches to multi-Paxos, but many details are missing.

There have been several attempts to flesh out and optimize Paxos, such as [26], [39], and [13], but these differ from each other and from Lamport’s sketches.

Systems such as Chubby [4] have implemented Paxos-like algorithms, but in most cases their details have not been published.

Furthermore, the Paxos architecture is a poor one for building practical systems; this is another consequence of the single-decree decomposition. For example, there is little benefit to choosing a collection of log entries independently and then melding them into a sequential log; this just adds complexity. It is simpler and more efficient to design a system around a log, where new entries are appended sequentially in a constrained order. Another problem is that Paxos uses a symmetric peer-to-peer approach at its core (though it eventually suggests a weak form of leadership as a performance optimization). This makes sense in a simplified world where only one decision will be made, but few practical systems use this approach. If a series of decisions must be made, it is simpler and faster to first elect a leader, then have the leader coordinate the decisions.

As a result, practical systems bear little resemblance to Paxos. Each implementation begins with Paxos, discovers the difficulties in implementing it, and then develops a significantly different architecture. This is timeconsuming and error-prone, and the difficulties of understanding Paxos exacerbate the problem. Paxos’ formulationmay be a good one for proving theorems about its correctness, but real implementations are so different from Paxos that the proofs have little value. The following comment from the Chubby implementers is typical:

There are significant gaps between the description of the Paxos algorithm and the needs of a real-world system. . . . the final system will be based on an unproven protocol [4].

Because of these problems, we concluded that Paxos does not provide a good foundation either for system building or for education. Given the importance of consensus in large-scale software systems, we decided to see if we could design an alternative consensus algorithm with better properties than Paxos. Raft is the result of that experiment.

4 Designing for understandability

We had several goals in designing Raft: it must provide a complete and practical foundation for system building, so that it significantly reduces the amount of design work required of developers; it must be safe under all conditions

and available under typical operating conditions; and it must be efficient for common operations. But our most important goal—and most difficult challenge—was understandability. It must be possible for a large audience to understand the algorithmcomfortably. In addition, it must be possible to develop intuitions about the algorithm, so that system builders can make the extensions that are inevitable in real-world implementations.

There were numerous points in the design of Raft where we had to choose among alternative approaches.

In these situations we evaluated the alternatives based on understandability: how hard is it to explain each alternative (for example, how complex is its state space, and does it have subtle implications?), and how easy will it be for a

reader to completely understand the approach and its implications?

We recognize that there is a high degree of subjectivity in such analysis; nonetheless, we used two techniques that are generally applicable.

The first technique is the well-known approach of problem decomposition: wherever possible, we divided problems into separate pieces that could be solved, explained, and understood relatively independently. For example, in Raft we separated leader election, log replication, safety, and membership changes.

Our second approach was to simplify the state space by reducing the number of states to consider, making the system more coherent and eliminating nondeterminism where possible.

Specifically, logs are not allowed to have holes, and Raft limits the ways in which logs can become inconsistent with each other. Although in most cases we tried to eliminate nondeterminism, there are some situations where nondeterminism actually improves understandability.

In particular, randomized approaches introduce nondeterminism, but they tend to reduce the state space by handling all possible choices in a similar fashion (“choose any; it doesn’t matter”).We used randomization to simplify the Raft leader election algorithm.

5 The Raft consensus algorithm

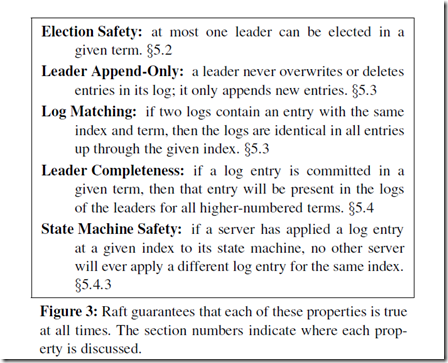

Raft is an algorithm for managing a replicated log of the form described in Section 2. Figure 2 summarizes the algorithm in condensed form for reference, and Figure 3 lists key properties of the algorithm; the elements of these

figures are discussed piecewise over the rest of this section.

Raft implements consensus by first electing a distinguished leader, then giving the leader complete responsibility for managing the replicated log.

The leader accepts log entries from clients, replicates them on other servers, and tells servers when it is safe to apply log entries to their state machines.

Having a leader simplifies the management of the replicated log. For example, the leader can decide where to place new entries in the log without consulting other servers, and data flows in a simple fashion from the leader to other servers. A leader can fail or become disconnected from the other servers, in which case a new leader is elected.

Given the leader approach, Raft decomposes the consensus problem into three relatively independent subproblems, which are discussed in the subsections that follow:

• Leader election: a new leader must be chosen when an existing leader fails (Section 5.2).

• Log replication: the leader must accept log entries from clients and replicate them across the cluster, forcing the other logs to agree with its own (Section 5.3).

• Safety: the key safety property for Raft is the State Machine Safety Property in Figure 3: if any server has applied a particular log entry to its state machine, then no other server may apply a different command for the same log index. Section 5.4 describes how Raft ensures this property; the solution involves an additional restriction on the election mechanism described in Section 5.2.

这里再次强调,用于采用了Strong Leader,我们把一件复杂的事情给分解了,逐个解决,后面会逐个说这些问题如何解决的

5.1 Raft basics

A Raft cluster contains several servers; five is a typical number, which allows the system to tolerate two failures.

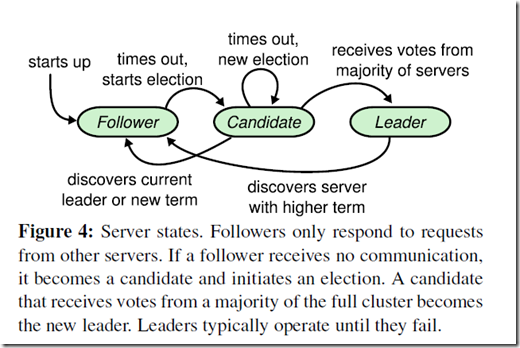

At any given time each server is in one of three states:

leader, follower, or candidate. In normal operation there is exactly one leader and all of the other servers are followers.

Followers are passive: they issue no requests on their own but simply respond to requests from leaders and candidates. The leader handles all client requests (if a client contacts a follower, the follower redirects it to the leader). The third state, candidate, is used to elect a new leader as described in Section 5.2. Figure 4 shows the states and their transitions; the transitions are discussed below.

这里描述,三种角色,以及他们之间的转换关系,比较好理解

注意Follower在变成Candidate时,是有随机timeout的,这个很关键,防止多个followers同时变成candidate,造成冲突

而只有candidate才能向其他server,请求Votes

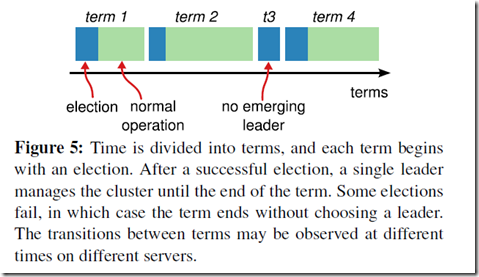

Raft divides time into terms of arbitrary length, as shown in Figure 5.

Terms are numbered with consecutive integers. Each term begins with an election, in which one or more candidates attempt to become leader as described in Section 5.2.

Term这是分布式系统里面常用的设计,如zk里面的epoch,在Raft里面,term是连续递增的整形,term会一直持续,直到下一次election;

如果选举失败,没有产生leader,那么会以一个新的term开始下一次election,总之一次election一定标记着,一个term的开始

用term就很容易发现过期的信息,比如过期的leader

If a candidate wins the election, then it serves as leader for the rest of the term. In some situations an election will result in a split vote. In this case the term will end with no leader; a new term (with a new election) will begin shortly. Raft ensures that there is at most one leader in a given term.

Different servers may observe the transitions between terms at different times, and in some situations a server may not observe an election or even entire terms.

Terms act as a logical clock [14] in Raft, and they allow servers to detect obsolete information such as stale leaders. Each server stores a current term number, which increases monotonically over time. Current terms are exchanged

whenever servers communicate; if one server’s current term is smaller than the other’s, then it updates its current term to the larger value. If a candidate or leader discovers that its term is out of date, it immediately reverts to follower

state. If a server receives a request with a stale term number, it rejects the request.

Raft servers communicate using remote procedure calls (RPCs), and the basic consensus algorithm requires only two types of RPCs.

RequestVote RPCs are initiated by candidates during elections (Section 5.2), and Append-Entries RPCs are initiated by leaders to replicate log entries and to provide a form of heartbeat (Section 5.3).

Section7 adds a third RPC for transferring snapshots between servers. Servers retry RPCs if they do not receive a response in a timely manner, and they issue RPCs in parallel for best performance.

只有两种RPC,也可以反映出Raft协议,足够简单

5.2 Leader election

Raft uses a heartbeat mechanism to trigger leader election.

When servers start up, they begin as followers. A server remains in follower state as long as it receives valid RPCs from a leader or candidate. Leaders send periodic heartbeats (AppendEntriesRPCs that carry no log entries) to all followers in order to maintain their authority. If a follower receives no communication over a period of time called the election timeout, then it assumes there is no viable leader and begins an election to choose a new leader.

To begin an election, a follower increments its current term and transitions to candidate state. It then votes for itself and issues RequestVote RPCs in parallel to each of the other servers in the cluster. A candidate continues in this state until one of three things happens: (a) it wins the election, (b) another server establishes itself as leader, or (c) a period of time goes by with no winner. These outcomes are discussed separately in the paragraphs below.

Win.

A candidate wins an election if it receives votes from a majority of the servers in the full cluster for the same term. Each server will vote for at most one candidate in a given term, on a first-come-first-served basis (note: Section

5.4 adds an additional restriction on votes). The majority rule ensures that at most one candidate can win the election for a particular term (the Election Safety Property in Figure 3). Once a candidate wins an election, it becomes leader. It then sends heartbeat messages to all of the other servers to establish its authority and prevent new elections.

Other Win.

While waiting for votes, a candidate may receive an AppendEntries RPC from another server claiming to be leader. If the leader’s term (included in its RPC) is at least as large as the candidate’s current term, then the candidate recognizes the leader as legitimate and returns to follower state. If the term in the RPC is smaller than the candidate’s current term, then the candidate rejects the RPC and continues in candidate state.

Nobody Win.

The third possible outcome is that a candidate neither wins nor loses the election: if many followers become candidates at the same time, votes could be split so that no candidate obtains a majority.When this happens, each candidate will time out and start a new election by incrementing its term and initiating another round of Request-Vote RPCs. However, without extra measures split votes could repeat indefinitely.

server刚开始是follower,会定时收到从leader发来的心跳,为了简单心跳就是通过无内容的AppendEntriesRPCs来实现的

如果收不到心跳了,说明leader挂了或不可达了,follower会在随机timeout后成为candidate,发出RequestVote RPCs

然后就会有3种结果,如果上面我总结的,也比较好理解

Raft uses randomized election timeouts to ensure that split votes are rare and that they are resolved quickly.

To prevent split votes in the first place, election timeouts are chosen randomly from a fixed interval (e.g., 150–300ms).

This spreads out the servers so that in most cases only a single server will time out; it wins the election and sends heartbeats before any other servers time out. The same mechanism is used to handle split votes. Each candidate

restarts its randomized election timeout at the start of an election, and it waits for that timeout to elapse before starting the next election; this reduces the likelihood of another split vote in the new election. Section 9.3 shows

that this approach elects a leader rapidly.

Elections are an example of how understandability guided our choice between design alternatives.

Initially we planned to use a ranking system: each candidate was assigned a unique rank, which was used to select between competing candidates. If a candidate discovered another candidate with higher rank, it would return to follower

state so that the higher ranking candidate could more easily win the next election. We found that this approach created subtle issues around availability (a lower-ranked server might need to time out and become a candidate again if a higher-ranked server fails, but if it does so too soon, it can reset progress towards electing a leader). We made adjustments to the algorithm several times, but after each adjustment new corner cases appeared. Eventually we concluded that the randomized retry approach is more obvious and understandable.

通过随机的election timeouts,来避免多个candidate发生冲突

这里还描述另外一种方案,通过ranking来选取candidate,但我个人的理解是,在分布式的环境中,往往精巧的方法都是不work的

5.3 Log replication

Once a leader has been elected, it begins servicing client requests. Each client request contains a command to be executed by the replicated state machines. The leader appends the command to its log as a new entry, then issues

AppendEntries RPCs in parallel to each of the other servers to replicate the entry. When the entry has been safely replicated (as described below), the leader applies the entry to its state machine and returns the result of that execution to the client. If followers crash or run slowly, or if network packets are lost, the leader retries Append-Entries RPCs indefinitely (even after it has responded to the client) until all followers eventually store all log entries.

因为有了leader,所以这里Log replication的算法比Paxos简单了很多,不需要二阶段去提交

leader,只是单向的同步到followers,majority完成同步,就commit,然后把commit结果也同步给followers;对于那些没有相应的或slow的minority,持续通过Append-Entries RPCs同步就好

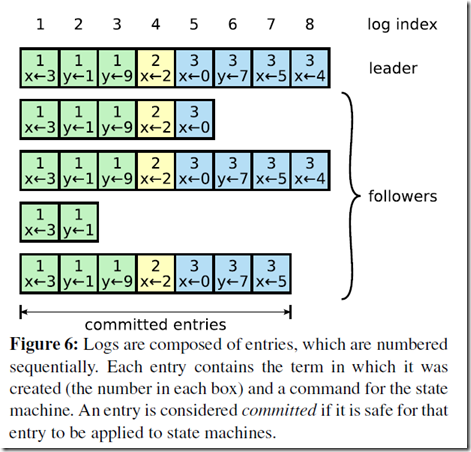

Logs are organized as shown in Figure 6. Each log entry stores a state machine command along with the term number when the entry was received by the leader.

The term numbers in log entries are used to detect inconsistencies between logs and to ensure some of the properties in Figure 3.

Each log entry also has an integer index identifying its position in the log.

log的机构,首先每个log有个递增的index

还有个term number,每个term的log上图用不同颜色来表示

最后就是log的内容,比如,x = 3

The leader decides when it is safe to apply a log entry to the state machines; such an entry is called committed.

Raft guarantees that committed entries are durable and will eventually be executed by all of the available state machines. A log entry is committed once the leader that created the entry has replicated it on a majority of the servers (e.g., entry 7 in Figure 6). This also commits all preceding entries in the leader’s log, including entries created by previous leaders. Section 5.4 discusses some subtleties when applying this rule after leader changes, and it also shows that this definition of commitment is safe. The leader keeps track of the highest index it knows to be committed, and it includes that index in future AppendEntries RPCs (including heartbeats) so that the other servers eventually find out. Once a follower learns that a log entry is committed, it applies the entry to its local state machine (in log order).

We designed the Raft log mechanism to maintain a high level of coherency between the logs on different servers.

Not only does this simplify the system’s behavior and make it more predictable, but it is an important component of ensuring safety.

Raft maintains the following properties, which together constitute the Log Matching Property in Figure 3:

• If two entries in different logs have the same index and term, then they store the same command.

• If two entries in different logs have the same index and term, then the logs are identical in all preceding entries.

The first property follows from the fact that a leader creates at most one entry with a given log index in a given term, and log entries never change their position in the log.

The second property is guaranteed by a simple consistency check performed by AppendEntries.

When sending an AppendEntries RPC, the leader includes the index and term of the entry in its log that immediately precedes the new entries.

If the follower does not find an entry in its log with the same index and term, then it refuses the new entries.

The consistency check acts as an induction step: the initial empty state of the logs satisfies the Log Matching Property, and the consistency check preserves the Log Matching Property whenever logs are extended.

As a result, wheneverAppendEntries returns successfully, the leader knows that the follower’s log is identical to its own log up through the new entries.

Raft对于Log满足如下属性,

如果两个不同log replica中的entries,有相同的index and term,那么

他们有相同的command内容,并且all preceding entries也是相同的

这表达,首先entries的唯一性,不可更改性;其次一致性的保障,即一致性的同步是按序的,如果当前同步,则之前的也一定已完成同步

During normal operation, the logs of the leader and followers stay consistent, so the AppendEntries consistency check never fails.

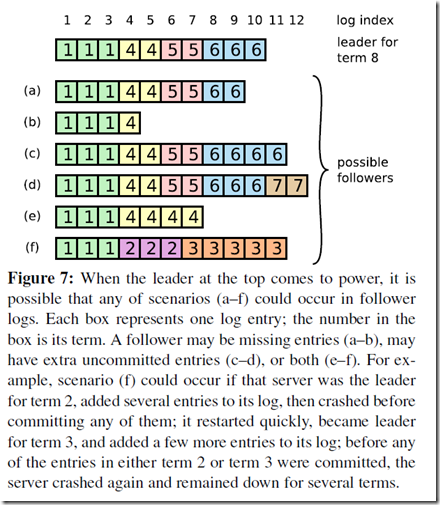

However, leader crashes can leave the logs inconsistent (the old leader may not have fully replicated all of the entries in its log). These inconsistencies can compound over a series of leader and follower crashes. Figure 7 illustrates the ways in which followers’ logs may differ from that of a new leader.

A follower may be missing entries that are present on the leader, it may have extra entries that are not present on the leader, or both. Missing and extraneous entries in a log may span multiple terms.

In Raft, the leader handles inconsistencies by forcing the followers’ logs to duplicate its own. This means that conflicting entries in follower logs will be overwritten with entries from the leader’s log.

Section 5.4 will show that this is safe when coupled with one more restriction.

To bring a follower’s log into consistency with its own, the leader must find the latest log entry where the two logs agree, delete any entries in the follower’s log after that point, and send the follower all of the leader’s entries after that point. All of these actions happen in response to the consistency check performed by AppendEntries RPCs. The leader maintains a nextIndex for each follower, which is the index of the next log entry the leader will send to that follower.When a leader first comes to power, it initializes all nextIndex values to the index just after the last one in its log (11 in Figure 7).

If a follower’s log is inconsistent with the leader’s, the AppendEntries consistency check will fail in the next AppendEntries RPC. After a rejection, the leader decrements nextIndex and retries the AppendEntries RPC.

Eventually nextIndex will reach a point where the leader and follower logs match. When this happens,AppendEntrieswill succeed, which removes any conflicting entries in the follower’s log and appends entries fromthe leader’s log (if any).

Once AppendEntries succeeds, the follower’s log is consistentwith the leader’s, and it will remain that way for the rest of the term.

If desired, the protocol can be optimized to reduce the number of rejected AppendEntries RPCs. For example, when rejecting an AppendEntries request, the follower can include the term of the conflicting entry and the first index it stores for that term. With this information, the leader can decrement nextIndex to bypass all of the conflicting entries in that term; one AppendEntries RPC will be required for each term with conflicting entries, rather than one RPC per entry. In practice, we doubt this optimization is necessary, since failures happen infrequently and it is unlikely that there will be many inconsistent entries.

With this mechanism, a leader does not need to take any special actions to restore log consistency when it comes to power. It just begins normal operation, and the logs automatically converge in response to failures of the Append-Entries consistency check. A leader never overwrites or deletes entries in its own log (the Leader Append-Only Property in Figure 3).

在leader crash发生切换的时候,就很容易发生,leader和follower不一致的情况

Raft的做法是follower必须和leader保持一致,那么具体的做法,是leader首先要找到和follower latest的同步的entry;因为根据上面的属性,一对相同的entry,可以证明前面的所有entries都是同步的

leader找这个同步点的方式,比较简单,就是从nextIndex,开始发送AppendEntries,因为follower在收到AppendEntries时,会首先check 前一个entry是否同步,如果不同步,会直接fail;所以就逐条递减的发送AppendEntries,直到成功,说明找到同步点

当然这个机制可以优化,如上文

This log replication mechanism exhibits the desirable consensus properties described in Section 2:

Raft can accept, replicate, and apply new log entries as long as a majority of the servers are up; in the normal case a new entry can be replicated with a single round of RPCs to a majority of the cluster; and a single slow follower will not impact performance.

5.4 Safety

The previous sections described how Raft elects leaders and replicates log entries. However, the mechanisms described so far are not quite sufficient to ensure that each state machine executes exactly the same commands in the

same order. For example, a follower might be unavailable while the leader commits several log entries, then it could be elected leader and overwrite these entries with new ones; as a result, different state machines might execute

different command sequences.

This section completes the Raft algorithm by adding a restriction on which servers may be elected leader. The restriction ensures that the leader for any given term contains all of the entries committed in previous terms (the Leader Completeness Property from Figure 3). Given the election restriction, we then make the rules for commitment more precise. Finally, we present a proof sketch for the Leader Completeness Property and show how it leads to correct behavior of the replicated state machine.

5.4.1 Election restriction

In any leader-based consensus algorithm, the leader must eventually store all of the committed log entries.

In some consensus algorithms, such as Viewstamped Replication [22], a leader can be elected even if it doesn’t initially contain all of the committed entries. These algorithms contain additional mechanisms to identify the missing entries and transmit them to the new leader, either during the election process or shortly afterwards. Unfortunately, this results in considerable additional mechanism and complexity. Raft uses a simpler approach where it guarantees that all the committed entries from previous terms are present on each new leader from the moment of its election, without the need to transfer those entries to the leader.

This means that log entries only flow in one direction, from leaders to followers, and leaders never overwrite existing entries in their logs.

Raft uses the voting process to prevent a candidate from winning an election unless its log contains all committed entries.

A candidate must contact a majority of the cluster in order to be elected, which means that every committed entry must be present in at least one of those servers.

If the candidate’s log is at least as up-to-date as any other log in that majority (where “up-to-date” is defined precisely below), then it will hold all the committed entries. The RequestVote RPC implements this restriction: the RPC

includes information about the candidate’s log, and the voter denies its vote if its own log is more up-to-date than that of the candidate.

Raft determines which of two logs is more up-to-date by comparing the index and term of the last entries in the logs. If the logs have last entries with different terms, then the log with the later term is more up-to-date. If the logs

end with the same term, then whichever log is longer is more up-to-date.

这章开始谈safety,即数据的正确性,这里给出的约束是,

成为leader的candidate,必须包含所有committed entries

否则,新的leader很容易把之前term commit的entries给覆盖掉,很容易理解

具体的做法,是commited的entries,至少是在majority上存在的;所以所有server,在vote的时候会将,请求者的latest entry index,和自己的比较,如果请求者的旧,就不同意vote

所以,如果你不包含所有commited的entries,就不可能得到majority的votes

5.4.2 Committing entries from previous terms

As described in Section 5.3, a leader knows that an entry from its current term is committed once that entry is stored on a majority of the servers. If a leader crashes before committing an entry, future leaders will attempt to finish replicating the entry. However, a leader cannot immediately conclude that an entry from a previous term is committed once it is stored on a majority of servers. Figure 8 illustrates a situation where an old log entry is stored on a majority of servers, yet can still be overwritten by a future leader.

To eliminate problems like the one in Figure 8, Raft never commits log entries from previous terms by counting replicas. Only log entries from the leader’s current term are committed by counting replicas; once an entry from the current term has been committed in this way, then all prior entries are committed indirectly because of the Log Matching Property. There are some situations where a leader could safely conclude that an older log entry is committed (for example, if that entry is stored on every server), but Raft takes a more conservative approach for simplicity.

Raft incurs this extra complexity in the commitment rules because log entries retain their original term numbers when a leader replicates entries from previous terms. In other consensus algorithms, if a new leader replicates entries from prior “terms,” it must do so with its new “term number.” Raft’s approach makes it easier to reason about log entries, since they maintain the same term number over time and across logs. In addition, new leaders in Raft send fewer log entries from previous terms than in other algorithms (other algorithms must send redundant log entries to renumber them before they can be committed).

5.4.3 Safety argument

Given the complete Raft algorithm, we can now argue more precisely that the Leader Completeness Property holds (this argument is based on the safety proof; see Section 9.2). We assume that the Leader Completeness Property does not hold, then we prove a contradiction.

Suppose the leader for term T (leaderT) commits a log entry from its term, but that log entry is not stored by the leader of some future term.

Consider the smallest term U> T whose leader (leaderU) does not store the entry.

1. The committed entry must have been absent from leaderU’s log at the time of its election (leaders never delete or overwrite entries).

2. leaderT replicated the entry on a majority of the cluster, and leaderU received votes from a majority of the cluster. Thus, at least one server (“the voter”) both accepted the entry from leaderT and voted for leaderU, as shown in Figure 9. The voter is key to reaching a contradiction.

。。。。。。

好吧,后面一堆证明,就是说,如果leaderU,没有存这条entry,它就没有可能被选为leader;这个在我看来是显而易见的

Given the Leader Completeness Property, we can prove the State Machine Safety Property from Figure 3, which states that if a server has applied a log entry at a given index to its state machine, no other server will ever apply a different log entry for the same index. At the time a server applies a log entry to its state machine, its log must be identical to the leader’s log up through that entry and the entry must be committed. Now consider the lowest term in which any server applies a given log index; the Log Completeness Property guarantees that the leaders for all higher terms will store that same log entry, so servers that apply the index in later terms will apply the same value.

Thus, the State Machine Safety Property holds.

Finally, Raft requires servers to apply entries in log index order. Combined with the StateMachine Safety Property, this means that all servers will apply exactly the same set of log entries to their state machines, in the same order.

5.6 Timing and availability

One of our requirements for Raft is that safety must not depend on timing: the system must not produce incorrect results just because some event happensmore quickly or slowly than expected.

However, availability (the ability of the system to respond to clients in a timely manner) must inevitably depend on timing.

For example, if message exchanges take longer than the typical time between server crashes, candidates will not stay up long enough to win an election; without a steady leader, Raft cannot make progress.

Leader election is the aspect of Raft where timing is most critical.

Raft will be able to elect and maintain a steady leader as long as the system satisfies the following timing requirement:

broadcastTime≪electionTimeout≪MTBF

In this inequality broadcastTime is the average time it takes a server to send RPCs in parallel to every server in the cluster and receive their responses; electionTimeout is the election timeout described in Section 5.2;

and MTBF is the average time between failures for a single server.

The broadcast time should be an order of magnitude less than the election timeout so that leaders can reliably send the heartbeat messages required to keep followers from starting elections; given the randomized approach used for election timeouts, this inequality also makes split votes unlikely. The election timeout should be a few orders of magnitude less than MTBF so that the system makes steady progress.

When the leader crashes, the system will be unavailable for roughly the election timeout;

we would like this to represent only a small fraction of overall time.

The broadcast time and MTBF are properties of the underlying system, while the election timeout is something we must choose. Raft’s RPCs typically require the recipient to persist information to stable storage, so the broadcast time may range from 0.5ms to 20ms, depending on storage technology. As a result, the election timeout is likely to be somewhere between 10ms and 500ms. Typical server MTBFs are several months or more, which easily satisfies the timing requirement.

谈时间对可用性的影响

6 Cluster membership changes

Up until now we have assumed that the cluster configuration (the set of servers participating in the consensus algorithm) is fixed.

In practice, it will occasionally be necessary to change the configuration, for example to replace servers when they fail or to change the degree of replication.

Although this can be done by taking the entire cluster off-line, updating configuration files, and then restarting the cluster, this would leave the cluster unavailable during the changeover. In addition, if there are any manual steps, they risk operator error. In order to avoid these issues, we decided to automate configuration changes and incorporate them into the Raft consensus algorithm.

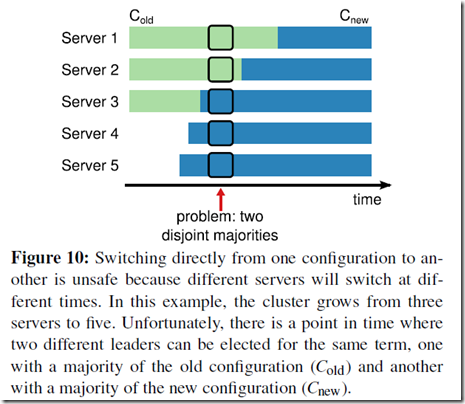

For the configuration change mechanism to be safe, there must be no point during the transition where it is possible for two leaders to be elected for the same term. Unfortunately, any approach where servers switch directly from the old configuration to the new configuration is unsafe. It isn’t possible to atomically switch all of the servers at once, so the cluster can potentially split into two independentmajorities during the transition (see Figure 10).

如何解决集群server变化的问题?都停机改配置的方法,实在太low了

但是在transition的过程中,很容易会出现问题,比如同时两个leader被选举

如上图,从3个server扩展到5个server的过程中,1,2仍然是老配置,3已经换成新配置,而4,5新加的一定是新配置

这样1,2可能形成一个leader,对于3个而已,2已经是majority;同样对于3,4,5,也可以成为5个的majority

In order to ensure safety, configuration changes must use a two-phase approach.

There are a variety of ways to implement the two phases. For example, some systems (e.g., [22]) use the first phase to disable the old configuration so it cannot process client requests; then the second phase enables the new configuration.

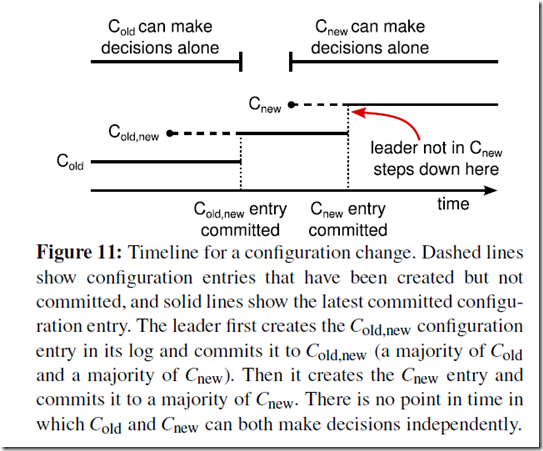

In Raft the cluster first switches to a transitional configuration we call joint consensus; once the joint consensus has been committed, the system then transitions to the new configuration.

The joint consensus combines both the old and new configurations:

• Log entries are replicated to all servers in both configurations.

• Any server from either configuration may serve as leader.

• Agreement (for elections and entry commitment) requires separate majorities from both the old and new configurations.

Raft提出一个joint consensus,在transition过程中,

1. 所有log entries会被replicated到所有的servers上(both 新旧 conf)

2. 任一server都可以被选为leader(both 新旧 conf)

3. Agreement的限制是,必须同时满足,新旧conf的majority

The joint consensus allows individual servers to transition between configurations at different times without compromising safety. Furthermore, joint consensus allows the cluster to continue servicing client requests throughout the configuration change.

Cluster configurations are stored and communicated using special entries in the replicated log; Figure 11 illustrates the configuration change process.

When the leader receives a request to change the configuration from Cold to Cnew, it stores the configuration for joint consensus (Cold,new in the figure) as a log entry and replicates that entry using the mechanisms described previously.

Once a given server adds the new configuration entry to its log, it uses that configuration for all future decisions (a server always uses the latest configuration in its log, regardless of whether the entry is committed). This means that the leader will use the rules of Cold,new to determine when the log entry for Cold,new is committed.

If the leader crashes, a new leader may be chosen under either Cold or Cold,new, depending on whether the winning candidate has received Cold,new.

In any case, Cnew cannot make unilateral decisions during this period.

Once Cold,new has been committed, neither Cold nor Cnew can make decisions without approval of the other, and the Leader Completeness Property ensures that only servers with the Cold,new log entry can be elected as leader. It is

now safe for the leader to create a log entry describing Cnew and replicate it to the cluster. Again, this configuration will take effect on each server as soon as it is seen.

When the new configuration has been committed under the rules of Cnew, the old configuration is irrelevant and servers not in the new configuration can be shut down. As shown in Figure 11, there is no time when Cold and Cnew

can both make unilateral decisions; this guarantees safety.

首先,conf也是一种特殊的entry,所以更改conf也是提交一个新的log entry

这里为了safe,采取两阶段提交的方式,先提交Cold,new到所有的server,让大家都知道old,new的存在,消除前面导致各自选举的可能性;因为这个时候,agreement需要依据新老配置,都能达到majority

然后再往Cnew的servers上提交新的配置,完成commit后,再shutdown在新配置里面没有的server

There are three more issues to address for reconfiguration.

The first issue is that new servers may not initially store any log entries. If they are added to the cluster in this state, it could take quite a while for them to catch up, during which time it might not be possible to commit new log entries. In order to avoid availability gaps, Raft introduces an additional phase before the configuration change, in which the new servers join the cluster as non-voting members (the leader replicates log entries to them, but they are not considered for majorities).

Once the new servers have caught up with the rest of the cluster, the reconfiguration can proceed as described above.

第一个问题,冷启动问题,新的server需要先同步数据,同步完后,再更改配置

The second issue is that the cluster leader may not be part of the new configuration. In this case, the leader steps down (returns to follower state) once it has committed the Cnew log entry.

This means that there will be a period of time (while it is committing Cnew) when the leader is managing a cluster that does not include itself; it replicates log entries but does not count itself in majorities.

The leader transition occurs when Cnew is committed because this is the first point when the new configuration can operate independently (it will always be possible to choose a leader fromCnew).

Before this point, it may be the case that only a server from Cold can be elected leader.

第二个问题,leader不在新配置中,那会有一段时间,leader管理一个自己不属于的cluster

The third issue is that removed servers (those not in Cnew) can disrupt the cluster.

These servers will not receive heartbeats, so they will time out and start new elections.

They will then send RequestVote RPCs with new term numbers, and this will cause the current leader to revert to follower state.

A new leader will eventually be elected, but the removed servers will time out again and the process will repeat, resulting in poor availability.

To prevent this problem, servers disregard RequestVote RPCs when they believe a current leader exists.

Specifically, if a server receives a RequestVote RPC within the minimum election timeout of hearing from a current leader, it does not update its term or grant its vote.

This does not affect normal elections, where each server waits at least a minimum election timeout before starting an election. However, it helps avoid disruptions from removed servers:

if a leader is able to get heartbeats to its cluster, then it will not be deposed by larger term numbers.

第三个问题,被removed servers,有可能发起新的选举,已扰乱当前集群;解决方法是,server在能收到leader的定期心跳时,不会理会RequestVote 请求

7 Log compaction

Raft’s log grows during normal operation to incorporate more client requests, but in a practical system, it cannot grow without bound. As the log grows longer, it occupies more space and takes more time to replay.

This will eventually cause availability problems without some mechanism to discard obsolete information that has accumulated in the log.

Snapshotting is the simplest approach to compaction.

In snapshotting, the entire current system state is written to a snapshot on stable storage, then the entire log up to that point is discarded.

Snapshotting is used in Chubby and ZooKeeper, and the remainder of this section describes snapshotting in Raft.

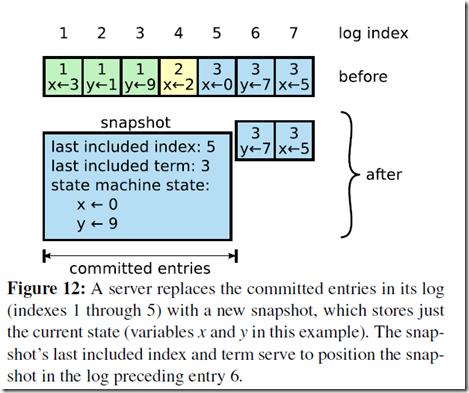

Figure 12 shows the basic idea of snapshotting in Raft.

Each server takes snapshots independently, covering just the committed entries in its log.

Most of the work consists of the state machine writing its current state to the snapshot.

Raft also includes a small amount of metadata in the snapshot: the last included index is the index of the last entry in the log that the snapshot replaces (the last entry the state machine had applied), and the last included term is the term of this entry.

These are preserved to support the AppendEntries consistency check for the first log entry following the snapshot, since that entry needs a previous log index and term.

To enable cluster membership changes (Section 6), the snapshot also includes the latest configuration in the log as of last included index. Once a server completes writing a snapshot, it may delete all log entries up through the last included index, as well as any prior snapshot.

The leader uses a new RPC called InstallSnapshot to send snapshots to followers that are too far behind; see Figure 13. When a follower receives a snapshot with this RPC, it must decide what to do with its existing log entries.

Usually the snapshot will contain new information not already in the recipient’s log. In this case, the follower discards its entire log; it is all superseded by the snapshot and may possibly have uncommitted entries that conflict with the snapshot. If instead the follower receives a snapshot that describes a prefix of its log (due to retransmission or by mistake), then log entries covered by the snapshot are deleted but entries following the snapshot are still valid and must be retained.

This snapshotting approach departs from Raft’s strong leader principle, since followers can take snapshots without the knowledge of the leader. However, we think this departure is justified. While having a leader helps avoid conflicting decisions in reaching consensus, consensus has already been reached when snapshotting, so no decisions conflict. Data still only flows from leaders to followers, just followers can now reorganize their data.

8 Client interaction

This section describes how clients interact with Raft, including how clients find the cluster leader and how Raft supports linearizable semantics [10].

These issues apply to all consensus-based systems, and Raft’s solutions are similar to other systems.

Clients of Raft send all of their requests to the leader.

When a client first starts up, it connects to a randomly chosen server.

If the client’s first choice is not the leader, that server will reject the client’s request and supply information about the most recent leader it has heard from (AppendEntries requests include the network address of the leader).

If the leader crashes, client requests will timeout; clients then try again with randomly-chosen servers.

Our goal for Raft is to implement linearizable semantics (each operation appears to execute instantaneously, exactly once, at some point between its invocation and its response).

However, as described so far Raft can execute a command multiple times: for example, if the leader crashes after committing the log entry but before responding to the client, the client will retry the command with a new leader, causing it to be executed a second time. The solution is for clients to assign unique serial numbers to every command. Then, the state machine tracks the latest serial number processed for each client, along with the associated response. If it receives a command whose serial number has already been executed, it responds immediately without re-executing the request.

可能写多遍的问题

Read-only operations can be handled without writing anything into the log.

However, with no additional measures, this would run the risk of returning stale data, since the leader responding to the request might have been superseded by a newer leader of which it is unaware.

Linearizable reads must not return stale data, and Raft needs two extra precautions to guarantee this without using the log.

First, a leader must have the latest information on which entries are committed. The Leader Completeness Property guarantees that a leader has all committed entries, but at the start of its term, it may not know which those are. To find out, it needs to commit an entry from its term. Raft handles this by having each leader commit a blank no-op entry into the log at the start of its term.

Second, a leader must check whether it has been deposed before processing a read-only request (its information may be stale if a more recent leader has been elected).

Raft handles this by having the leader exchange heartbeat messages with a majority of the cluster before responding to read-only requests. Alternatively, the leader could rely on the heartbeat mechanism to provide a form of lease [9], but this would rely on timing for safety (it assumes bounded clock skew).

读的问题,如果避免读到旧数据

9 Implementation and evaluation

We have implemented Raft as part of a replicated state machine that stores configuration information for RAMCloud [33] and assists in failover of the RAMCloud coordinator. The Raft implementation contains roughly 2000 lines of C++ code, not including tests, comments, or blank lines. The source code is freely available [23]. There are also about 25 independent third-party open source implementations [34] of Raft in various stages of development, based on drafts of this paper. Also, various companies are deploying Raft-based systems [34].

9.3 Performance

Raft’s performance is similar to other consensus algorithms such as Paxos.

The most important case for performance is when an established leader is replicating new log entries.

Raft achieves this using the minimal number of messages (a single round-trip fromthe leader to half the cluster). It is also possible to further improve Raft’s performance.

For example, it easily supports batching and pipelining requests for higher throughput and lower latency.

Various optimizations have been proposed in the literature for other algorithms; many of these could be applied to Raft, but we leave this to future work.

10 Related work

There have been numerous publications related to consensus algorithms, many of which fall into one of the following categories:

• Lamport’s original description of Paxos [15], and attempts to explain it more clearly [16, 20, 21].

• Elaborations of Paxos, which fill in missing details and modify the algorithm to provide a better foundation for implementation [26, 39, 13].

• Systems that implement consensus algorithms, such as Chubby [2, 4], ZooKeeper [11, 12], and Spanner [6].

The algorithms for Chubby and Spanner have not been published in detail, though both claim to be based on Paxos.

ZooKeeper’s algorithm has been published in more detail, but it is quite different from Paxos.

• Performance optimizations that can be applied to Paxos [18, 19, 3, 25, 1, 27].

• Oki and Liskov’s Viewstamped Replication (VR), an alternative approach to consensus developed around the same time as Paxos.

The original description [29] was intertwined with a protocol for distributed transactions, but the core consensus protocol has been separated in a recent update [22]. VR uses a leaderbased approach with many similarities to Raft.

The greatest difference between Raft and Paxos is Raft’s strong leadership:

Raft uses leader election as an essential part of the consensus protocol, and it concentrates as much functionality as possible in the leader.

This approach results in a simpler algorithm that is easier to understand.

For example, in Paxos, leader election is orthogonal to the basic consensus protocol: it serves only as a performance optimization and is not required for achieving consensus.

However, this results in additional mechanism:

Paxos includes both a two-phase protocol for basic consensus and a separate mechanism for leader election.

In contrast, Raft incorporates leader election directly into the consensus algorithm and uses it as the first of the two phases of consensus. This results in less mechanism than in Paxos.

Raft和paxos相比,最主要的不同是,Strong leadership

Like Raft, VR and ZooKeeper are leader-based and therefore share many of Raft’s advantages over Paxos.

However, Raft has less mechanism that VR or ZooKeeper because it minimizes the functionality in non-leaders.

For example, log entries in Raft flow in only one direction: outward from the leader in AppendEntries RPCs.

In VR log entries flow in both directions (leaders can receive log entries during the election process);

this results in additional mechanism and complexity.

The published description of ZooKeeper also transfers log entries both to and from the leader, but the implementation is apparently more like Raft [35].

并且虽然ZK和VR也是用leader-based,但Raft尽量减少非leader的functionality,把职能尽量放在leader,使问题大幅简化;比如在raft中,leader和follower之间的数据是单向的

Raft has fewer message types than any other algorithm for consensus-based log replication that we are aware of.

For example, we counted the message types VR and ZooKeeper use for basic consensus and membership changes (excluding log compaction and client interaction, as these are nearly independent of the algorithms).

VR and ZooKeeper each define 10 different message types, while Raft has only 4 message types (two RPC requests and their responses).

Raft’s messages are a bit more dense than the other algorithms’, but they are simpler collectively.

In addition, VR and ZooKeeper are described in terms of transmitting entire logs during leader changes;

additional message types will be required to optimize these mechanisms so that they are practical.

Raft有更少的消息类型和状态空间,所以更加简单

Raft’s strong leadership approach simplifies the algorithm, but it precludes(妨碍) some performance optimizations.

For example, Egalitarian Paxos (EPaxos) can achieve higher performance under some conditions with a leaderless approach [27]. EPaxos exploits commutativity in state machine commands.

Any server can commit a command with just one round of communication as long as other commands that are proposed concurrently commute with it.

However, if commands that are proposed concurrently do not commute with each other, EPaxos requires an additional round of communication.

Because any server may commit commands, EPaxos balances load well between servers and is able to achieve lower latency than Raft in WAN settings. However, it adds significant complexity to Paxos.

采用strong leader,性能上的一些损失

Several different approaches for cluster membership changes have been proposed or implemented in other work, including Lamport’s original proposal [15], VR [22], and SMART [24].

We chose the joint consensus approach for Raft because it leverages the rest of the consensus protocol, so that very little additional mechanism is required for membership changes.

Lamport’s a-based approach was not an option for Raft because it assumes consensus can be reached without a leader.

In comparison to VR and SMART, Raft’s reconfiguration algorithm has the advantage that membership changes can occur without limiting the processing of normal requests;

in contrast, VR stops all normal processing during configuration changes, and SMART imposes an a-like limit on the number of outstanding requests.

Raft’s approach also adds less mechanism than either VR or SMART.

在cluster membership changes 上和其他比,raft的协议更简单,并不用限制正常的requests

已为社区贡献7条内容

已为社区贡献7条内容

所有评论(0)