Kubernetes学习笔记

用于自动部署、扩缩和管理容器化应用程序的开源系统,支持自动化部署、大规模可伸缩。

Kubernetes学习笔记

文章使用Kubernetes版本为1.23.6

1、简介

用于自动部署、扩缩和管理容器化应用程序的开源系统,支持自动化部署、大规模可伸缩。

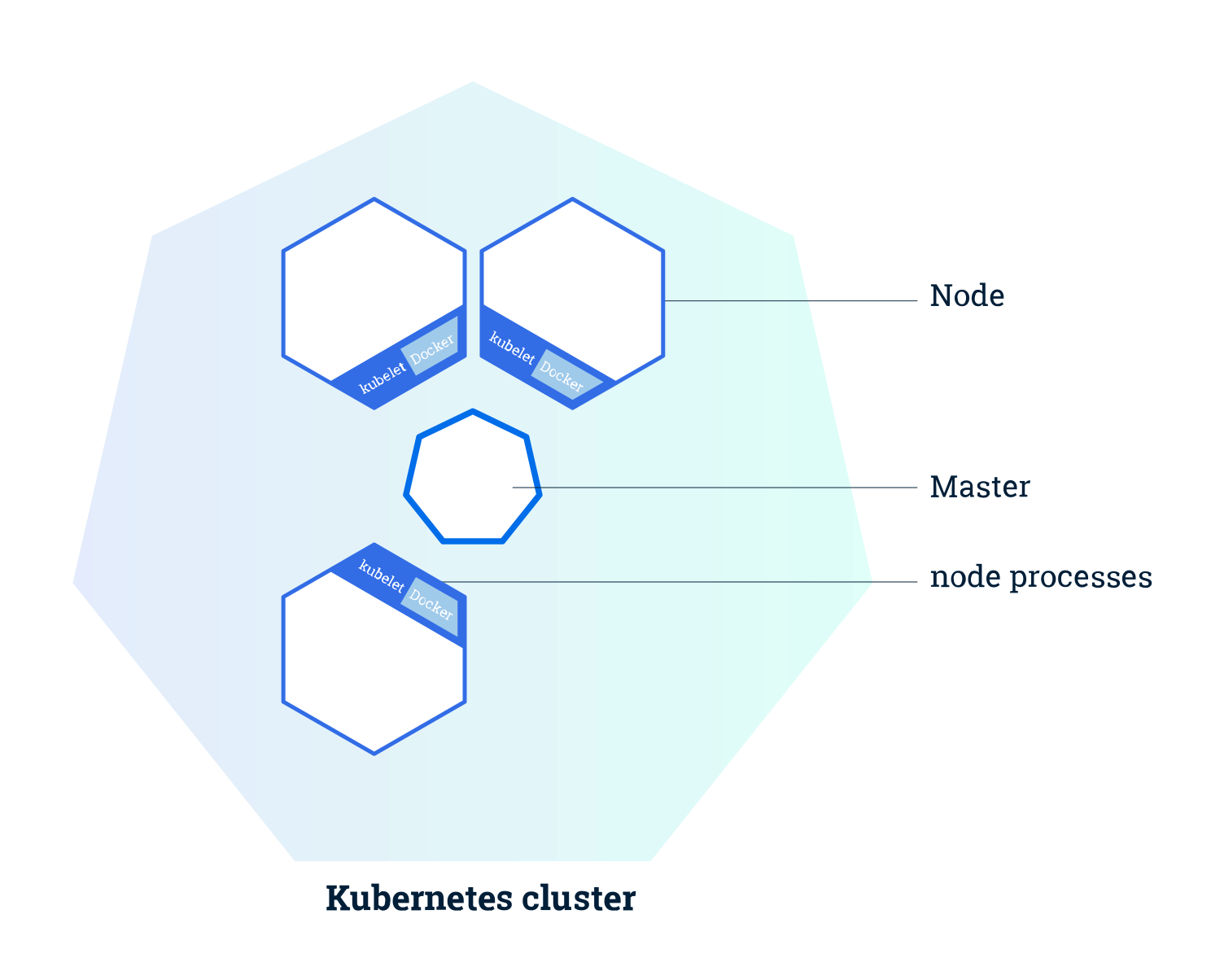

2、架构

2.1、Control Plane

对集群做出全局决策

Controller manager

在主节点上运行控制器的组件,包含

- 节点控制器(Node Controller)

- 任务控制器(Job controller)

- 端点控制器(Endpoints Controller)

- 服务帐户和令牌控制器(Service Account & Token Controllers)

Etcd

保存 Kubernetes 所有集群数据的后台键值数据库

Scheduler

监视新创建的、未指定运行节点(node)的Pods,选择节点让 Pod 在上面运行

Api server

Kubernetes API服务

2.2、Node

kubelet

节点(node)上运行的代理

kube-proxy

节点上运行的网络代理

3、安装

节点规划

| 主机名称 | 主机IP |

|---|---|

| k-master | 124.223.4.217 |

| k-cluster2 | 124.222.59.241 |

| k-cluster3 | 150.158.24.200 |

3.1、安装docker

查看可用的docker版本

yum list docker-ce --showduplicates

#!/bin/bash

# remove old docker

yum remove docker \

docker-client \

docker-client-latest \

docker-common \

docker-latest \

docker-latest-logrotate \

docker-logrotate \

docker-engine

# install dependents

yum install -y yum-utils

# set yum repo

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

# install docker

yum -y install docker-ce-20.10.9-3.el7 docker-ce-cli-20.10.9-3.el7 containerd.io

# start

systemctl enable docker --now

# docker config(现在加速配置无效了)

# sudo mkdir -p /etc/docker

# sudo tee /etc/docker/daemon.json <<-'EOF'

# {

# "registry-mirrors": ["https://12sotewv.mirror.aliyuncs.com"],

# "exec-opts": ["native.cgroupdriver=systemd"],

# "log-driver": "json-file",

# "log-opts": {

# "max-size": "100m"

# },

# "storage-driver": "overlay2"

# }

# EOF

sudo systemctl daemon-reload

sudo systemctl enable docker --now

3.2、安装准备

#!/bin/bash

# set SELinux permissive(disable)

sudo setenforce 0

sudo sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config

# close swap

swapoff -a

sed -ri 's/.*swap.*/#&/' /etc/fstab

# permit iptables

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

br_netfilter

EOF

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

echo "1" > /proc/sys/net/ipv4/ip_forward

# flush

sudo sysctl --system

3.3、安装kubernetes

#!/bin/bash

# set Kubernetes repo

cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

exclude=kubelet kubeadm kubectl

EOF

# installKubernetes

sudo yum install -y kubelet-1.23.6 kubeadm-1.23.6 kubectl-1.23.6 --disableexcludes=kubernetes

systemctl enable kubelet --now

4、卸载

yum -y remove kubelet kubeadm kubectl

sudo kubeadm reset -f

sudo rm -rvf $HOME/.kube

sudo rm -rvf ~/.kube/

sudo rm -rvf /etc/kubernetes/

sudo rm -rvf /etc/systemd/system/kubelet.service.d

sudo rm -rvf /etc/systemd/system/kubelet.service

sudo rm -rvf /usr/bin/kube*

sudo rm -rvf /etc/cni

sudo rm -rvf /opt/cni

sudo rm -rvf /var/lib/etcd

sudo rm -rvf /var/etcd

5、初始化主节点(仅主节点)

节点规划

| 主机名称 | 主机IP |

|---|---|

| k-master | 124.223.4.217 |

| k-cluster2 | 124.222.59.241 |

| k-cluster3 | 150.158.24.200 |

5.1、初始化

kubeadm init \

--apiserver-advertise-address=10.0.4.6 \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version v1.23.6 \

--service-cidr=10.96.0.0/16 \

--pod-network-cidr=192.168.0.0/16

5.2、初始化常见错误

[root@k-master ~]# kubeadm init \

> --apiserver-advertise-address=10.0.4.6 \

> --image-repository registry.aliyuncs.com/google_containers \

> --kubernetes-version v1.23.6 \

> --service-cidr=10.96.0.0/16 \

> --pod-network-cidr=192.168.0.0/16

[init] Using Kubernetes version: v1.20.9

[preflight] Running pre-flight checks

[WARNING SystemVerification]: this Docker version is not on the list of validated versions: 20.10.5. Latest validated version: 19.03

[WARNING Hostname]: hostname "k-master" could not be reached

[WARNING Hostname]: hostname "k-master": lookup k-master on 183.60.83.19:53: no such host

error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR FileContent--proc-sys-net-ipv4-ip_forward]: /proc/sys/net/ipv4/ip_forward contents are not set to 1

[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`

To see the stack trace of this error execute with --v=5 or higher

此时执行下面的命令

echo "1" > /proc/sys/net/ipv4/ip_forward

5.3、初始化成功

[root@k-master ~]# kubeadm init \

> --apiserver-advertise-address=10.0.4.6 \

> --image-repository registry.aliyuncs.com/google_containers \

> --kubernetes-version v1.23.6 \

> --service-cidr=10.96.0.0/16 \

> --pod-network-cidr=192.168.0.0/16

[init] Using Kubernetes version: v1.23.6

[preflight] Running pre-flight checks

[WARNING SystemVerification]: this Docker version is not on the list of validated versions: 20.10.5. Latest validated version: 19.03

[WARNING Hostname]: hostname "k-master" could not be reached

[WARNING Hostname]: hostname "k-master": lookup k-master on 183.60.83.19:53: no such host

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [k-master kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 150.158.187.211]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [k-master localhost] and IPs [150.158.187.211 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [k-master localhost] and IPs [150.158.187.211 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 15.502637 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.20" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node k-master as control-plane by adding the labels "node-role.kubernetes.io/master=''" and "node-role.kubernetes.io/control-plane='' (deprecated)"

[mark-control-plane] Marking the node k-master as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: llukay.o7amg6bstg9abts3

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 150.158.187.211:6443 --token llukay.o7amg6bstg9abts3 \

--discovery-token-ca-cert-hash sha256:2f6c42689f5d5189947239997224916c94003cf9ed92220487ace5032206b4b9

5.4、后续步骤

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

export KUBECONFIG=/etc/kubernetes/admin.conf

5.5、设置网络组件

curl https://docs.projectcalico.org/v3.20/manifests/calico.yaml -O

kubectl apply -f calico.yaml

5.6、注意事项

如果忘记了令牌,可以使用下面的命令来创建新的令牌

# 获取新join命令

kubeadm token create --print-join-command

每次初始化失败/加入失败都需要进行重置

kubeadm reset

5.7、核心文件位置

/etc/kubernetes

[root@k-master ~]# cd /etc/kubernetes/

[root@k-master kubernetes]# ls

admin.conf controller-manager.conf kubelet.conf manifests pki scheduler.conf

[root@k-master kubernetes]# cd manifests/

[root@k-master manifests]# ls

etcd.yaml kube-apiserver.yaml kube-controller-manager.yaml kube-scheduler.yaml

5.8、配置命令自动补全

yum -y install bash-completion

echo 'source <(kubectl completion bash)' >>~/.bashrc

kubectl completion bash >/etc/bash_completion.d/kubectl

source /usr/share/bash-completion/bash_completion

5.9、主节点参与调度

默认情况下,Kubernetes集群不会在主节点上调度pod

kubectl taint node k-master node-role.kubernetes.io/master:NoSchedule-

6、节点加入

[root@k-cluster1 ~]# kubeadm join 150.158.187.211:6443 --token llukay.o7amg6bstg9abts3 \

> --discovery-token-ca-cert-hash sha256:2f6c42689f5d5189947239997224916c94003cf9ed92220487ace5032206b4b9

[preflight] Running pre-flight checks

[WARNING SystemVerification]: this Docker version is not on the list of validated versions: 20.10.5. Latest validated version: 19.03

[WARNING Hostname]: hostname "k-cluster1" could not be reached

[WARNING Hostname]: hostname "k-cluster1": lookup k-cluster1 on 183.60.83.19:53: no such host

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

7、节点删除

# 将节点设置成不可调度状态

kubectl cordon nodeName

# 将节点从集群中移除

kubectl delete node nodeName

被移除的节点想要重新加入集群需先重置kubeadm

kubeadm reset

8、设置ipvs模式

kubectl edit cm kube-proxy -n kube-system

将mode修改为"ipvs"

mode: "ipvs"

并重新删除已有的kube-proxy

[root@VM-4-6-centos ~]# kubectl delete pod kube-proxy-frlpp kube-proxy-r4kpw kube-proxy-xk2ss -n kube-system

pod "kube-proxy-frlpp" deleted

pod "kube-proxy-r4kpw" deleted

pod "kube-proxy-xk2ss" deleted

日志应为如下效果

[root@k-master ~]# kubectl logs kube-proxy-wbgnj -n kube-system

I0417 15:28:54.174178 1 node.go:172] Successfully retrieved node IP: 124.222.59.241

I0417 15:28:54.174245 1 server_others.go:142] kube-proxy node IP is an IPv4 address (124.222.59.241), assume IPv4 operation

I0417 15:28:54.205700 1 server_others.go:258] Using ipvs Proxier.

E0417 15:28:54.205937 1 proxier.go:389] can't set sysctl net/ipv4/vs/conn_reuse_mode, kernel version must be at least 4.1

W0417 15:28:54.206077 1 proxier.go:445] IPVS scheduler not specified, use rr by default

I0417 15:28:54.206272 1 server.go:650] Version: v1.20.9

I0417 15:28:54.206603 1 conntrack.go:52] Setting nf_conntrack_max to 131072

I0417 15:28:54.206889 1 config.go:315] Starting service config controller

I0417 15:28:54.206909 1 shared_informer.go:240] Waiting for caches to sync for service config

I0417 15:28:54.206940 1 config.go:224] Starting endpoint slice config controller

I0417 15:28:54.206949 1 shared_informer.go:240] Waiting for caches to sync for endpoint slice config

I0417 15:28:54.307063 1 shared_informer.go:247] Caches are synced for endpoint slice config

I0417 15:28:54.307152 1 shared_informer.go:247] Caches are synced for service config

9、kubectl命令

9.1、获取资源

1、获取节点信息

# 获取节点信息(只能在主节点使用)

[root@VM-4-6-centos ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k-cluster1 Ready <none> 5m40s v1.20.9

k-cluster2 Ready <none> 5m20s v1.20.9

k-master Ready control-plane,master 6m59s v1.20.9

# 修改节点Role

kubectl label node k-cluster1 node-role.kubernetes.io/cluster1=

[root@VM-4-6-centos ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k-cluster1 Ready cluster1 6m40s v1.20.9

k-cluster2 Ready cluster2 6m20s v1.20.9

k-master Ready control-plane,master 7m59s v1.20.9

2、获取Pod信息

# 获取集群Pod信息

[root@VM-4-6-centos ~]# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-577f77cb5c-8gmjp 1/1 Running 0 6m45s

kube-system calico-node-lxzpz 1/1 Running 0 5m44s

kube-system calico-node-qxvfm 1/1 Running 0 6m4s

kube-system calico-node-vvmgh 1/1 Running 0 6m46s

kube-system coredns-7f89b7bc75-cj6rt 1/1 Running 0 7m6s

kube-system coredns-7f89b7bc75-ctb8l 1/1 Running 0 7m6s

kube-system etcd-k-master 1/1 Running 0 7m14s

kube-system kube-apiserver-k-master 1/1 Running 0 7m14s

kube-system kube-controller-manager-k-master 1/1 Running 0 7m14s

kube-system kube-proxy-fc64c 1/1 Running 0 3m10s

kube-system kube-proxy-g2sj2 1/1 Running 0 3m9s

kube-system kube-proxy-gcjfc 1/1 Running 0 3m9s

kube-system kube-scheduler-k-master 1/1 Running 0 7m14s

##################################### 参数 ################################################

-A 查看所有Pod,包含kube-system命名空间下的

3、获取部署信息

[root@k-master ~]# kubectl get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

nginx 1/1 1 1 11m

9.2、创建资源

1、创建部署

所有的部署都有自我修复能力,部署的Pod IP在集群任意节点均可访问!

[root@VM-4-6-centos ~]# kubectl create deploy nginx --image=nginx

deployment.apps/nginx created

[root@VM-4-6-centos ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-6799fc88d8-cjmsq 1/1 Running 0 9s

2、创建Pod

启动的pod无自我修复能力

kubectl run nginx1 --image=nginx

9.3、移除资源

1、移除部署

kubectl delete pod podName -n nameSpace

2、移除Pod

kubectl delete deploy deployName

9.4、资源日志/描述信息

Pod日志/描述信息

# 查看pod运行日志

kubectl logs podName -n nameSpace

# 查看pod构建

kubectl describe pod podName -n nameSpace

9.5、进入Pod

[root@k-master ~]# kubectl exec -it nginx-6799fc88d8-cjmsq -- /bin/bash

root@nginx-6799fc88d8-cjmsq:/# ls

bin boot dev docker-entrypoint.d docker-entrypoint.sh etc home lib lib64 media mnt opt proc root run sbin srv sys tmp usr var

root@nginx-6799fc88d8-cjmsq:/# exit

exit

常见问题1

有时候可能会碰到下面的问题

[root@k-cluster2 ~]# kubectl exec -it nginx-6799fc88d8-cjmsq -- /bin/bash

The connection to the server localhost:8080 was refused - did you specify the right host or port?

此时需要将主节点的/etc/kubernetes/admin.conf文件拷贝到从节点相同目录下

scp admin.conf root@150.158.24.200:/etc/kubernetes/admin.conf

echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile

此时再次进入容器就不会报错了

[root@k-cluster2 ~]# kubectl exec -it nginx-6799fc88d8-cjmsq -- bash

root@nginx-6799fc88d8-cjmsq:/# ls

bin boot dev docker-entrypoint.d docker-entrypoint.sh etc home lib lib64 media mnt opt proc root run sbin srv sys tmp usr var

root@nginx-6799fc88d8-cjmsq:/# exit

exit

9.6、扩缩容

1、扩容

[root@k-cluster2 ~]# kubectl get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

nginx 1/1 1 1 19h

[root@k-cluster2 ~]# kubectl scale --replicas=3 deploy nginx

deployment.apps/nginx scaled

[root@k-cluster2 ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-6799fc88d8-cjmsq 1/1 Running 0 19h

nginx-6799fc88d8-xg7rr 1/1 Running 0 20s

nginx-6799fc88d8-zdd9c 1/1 Running 0 20s

2、缩容

[root@k-cluster2 ~]# kubectl scale --replicas=1 deploy nginx

deployment.apps/nginx scaled

[root@k-cluster2 ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-6799fc88d8-cjmsq 1/1 Running 0 19h

9.7、Label

1、新加标签

[root@k-cluster2 ~]# kubectl get pods --show-labels

NAME READY STATUS RESTARTS AGE LABELS

nginx-6799fc88d8-cjmsq 1/1 Running 0 20h app=nginx,pod-template-hash=6799fc88d8

nginx-6799fc88d8-d2p54 1/1 Running 0 31m app=nginx,pod-template-hash=6799fc88d8

nginx-6799fc88d8-vwrxp 1/1 Running 0 31m app=nginx,pod-template-hash=6799fc88d8

[root@k-cluster2 ~]# kubectl label pod nginx-6799fc88d8-cjmsq hello=666

pod/nginx-6799fc88d8-cjmsq labeled

[root@k-cluster2 ~]# kubectl get pods --show-labels

NAME READY STATUS RESTARTS AGE LABELS

nginx-6799fc88d8-cjmsq 1/1 Running 0 20h app=nginx,hello=666,pod-template-hash=6799fc88d8

nginx-6799fc88d8-d2p54 1/1 Running 0 34m app=nginx,pod-template-hash=6799fc88d8

nginx-6799fc88d8-vwrxp 1/1 Running 0 34m app=nginx,pod-template-hash=6799fc88d8

2、覆盖标签

[root@k-cluster2 ~]# kubectl label pod nginx-6799fc88d8-cjmsq --overwrite hello=6699

pod/nginx-6799fc88d8-cjmsq labeled

[root@k-cluster2 ~]# kubectl get pods --show-labels

NAME READY STATUS RESTARTS AGE LABELS

nginx-6799fc88d8-cjmsq 1/1 Running 0 20h app=nginx,hello=6699,pod-template-hash=6799fc88d8

nginx-6799fc88d8-d2p54 1/1 Running 0 38m app=nginx,pod-template-hash=6799fc88d8

nginx-6799fc88d8-vwrxp 1/1 Running 0 38m app=nginx,pod-template-hash=6799fc88d8

3、移除标签

[root@k-cluster2 ~]# kubectl label pod nginx-6799fc88d8-cjmsq hello-

pod/nginx-6799fc88d8-cjmsq labeled

[root@k-cluster2 ~]# kubectl get pods --show-labels

NAME READY STATUS RESTARTS AGE LABELS

nginx-6799fc88d8-cjmsq 1/1 Running 0 20h app=nginx,pod-template-hash=6799fc88d8

nginx-6799fc88d8-d2p54 1/1 Running 0 36m app=nginx,pod-template-hash=6799fc88d8

nginx-6799fc88d8-vwrxp 1/1 Running 0 36m app=nginx,pod-template-hash=6799fc88d8

9.8、Service

暴露一次部署为服务,服务会自动负载均衡(轮询)

[root@k-cluster2 ~]# kubectl expose deploy nginx --port=8080 --target-port=80 --type=NodePort

service/nginx exposed

[root@k-cluster2 ~]# kubectl get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 20h

nginx NodePort 10.96.156.44 <none> 8080:30724/TCP 10s

[root@k-cluster2 ~]# kubectl get all

NAME READY STATUS RESTARTS AGE

pod/nginx-6799fc88d8-cjmsq 1/1 Running 0 19h

pod/nginx-6799fc88d8-d2p54 1/1 Running 0 7m19s

pod/nginx-6799fc88d8-vwrxp 1/1 Running 0 7m19s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 20h

service/nginx NodePort 10.96.156.44 <none> 8080:30724/TCP 93s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/nginx 3/3 3 3 19h

NAME DESIRED CURRENT READY AGE

replicaset.apps/nginx-6799fc88d8 3 3 3 19h

###################################### 参数 #########################################

# --type=ClusterPort: 分配一个集群内部可以访问的虚拟IP

# --type=NodePort: 在每个Node上分配一个端口作为外部访问入口

http://150.158.24.200:30724/(有时候公网ip放回感觉不出来负载均衡,貌似是长连接问题,可以隔一会再刷新)

9.9、滚动更新(灰度更新)

会在目标部署下移除一个启动一个,重复此操作直至完全替换

[root@k-master ~]# kubectl set image deploy nginx nginx=nginx:1.24.0 --record

deployment.apps/nginx image updated

[root@k-master ~]# kubectl get pods --show-labels

NAME READY STATUS RESTARTS AGE LABELS

nginx-c48b5bb67-ncwpw 1/1 Running 0 28s app=nginx,pod-template-hash=c48b5bb67

nginx-c48b5bb67-snkvf 1/1 Running 0 9s app=nginx,pod-template-hash=c48b5bb67

nginx-c48b5bb67-x469l 1/1 Running 0 61s app=nginx,pod-template-hash=c48b5bb67

#################################### 参数 ########################################

# --record: 记录此次变更

获取版本升级记录

[root@k-master ~]# kubectl rollout history deployment nginx

deployment.apps/nginx

REVISION CHANGE-CAUSE

2 <none>

3 <none>

4 kubectl set image deploy nginx nginx=nginx:1.23.0 --record=true

9.10、回滚

[root@k-master ~]# kubectl rollout history deployment nginx

deployment.apps/nginx

REVISION CHANGE-CAUSE

3 <none>

4 kubectl set image deploy nginx nginx=nginx:1.23.0 --record=true

5 kubectl set image deploy nginx nginx=nginx:1.24.0 --record=true

[root@k-master ~]# kubectl rollout undo deployment nginx

deployment.apps/nginx rolled back

[root@k-master ~]# kubectl rollout history deployment nginx

deployment.apps/nginx

REVISION CHANGE-CAUSE

3 <none>

5 kubectl set image deploy nginx nginx=nginx:1.24.0 --record=true

6 kubectl set image deploy nginx nginx=nginx:1.23.0 --record=true

#################################### 参数 ########################################

# --to-revision: 回滚到指定版本序号的位置

9.11、污点

# 等同于kubectl taint k-cluster1 taintKey=taintValue:noSchedule

kubectl cordon nodeName

# 等同于kubectl taint k-cluster1 taintKey:noSchedule-

kubectl uncordon nodeName

# 等同于kubectl taint k-cluster1 taintKey=taintValue:noExecute

kubectl drain nodeName

10、安装dashboard

10.1、应用配置文件

[root@k-master ~]# kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.5.1/aio/deploy/recommended.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

[root@k-master ~]# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-577f77cb5c-5jwch 1/1 Running 0 17m

kube-system calico-node-9wphc 1/1 Running 0 17m

kube-system calico-node-gkjpt 0/1 Running 0 17m

kube-system calico-node-qv47h 1/1 Running 0 17m

kube-system calico-node-rvcrh 1/1 Running 0 17m

kube-system coredns-7f89b7bc75-bbkfz 1/1 Running 0 28m

kube-system coredns-7f89b7bc75-m46t5 1/1 Running 0 28m

kube-system etcd-k-master 1/1 Running 0 28m

kube-system kube-apiserver-k-master 1/1 Running 0 28m

kube-system kube-controller-manager-k-master 1/1 Running 0 28m

kube-system kube-proxy-87hgc 1/1 Running 0 26m

kube-system kube-proxy-ksk4m 1/1 Running 0 28m

kube-system kube-proxy-nkmsl 1/1 Running 0 26m

kube-system kube-proxy-w4qdj 1/1 Running 0 26m

kube-system kube-scheduler-k-master 1/1 Running 0 28m

kubernetes-dashboard dashboard-metrics-scraper-79c5968bdc-mdhxx 1/1 Running 0 55s

kubernetes-dashboard kubernetes-dashboard-658485d5c7-k2ds5 1/1 Running 0 55s

设置访问端口

kubectl edit svc kubernetes-dashboard -n kubernetes-dashboard

将其中的type: ClusterIP 改为 type: NodePort

10.2、创建访问用户

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

10.3、获取访问密钥

kubectl -n kubernetes-dashboard describe secret

10.4、使用dashboard

查看面板端口

[root@k-master ~]# kubectl get svc -A |grep kubernetes-dashboard

kubernetes-dashboard dashboard-metrics-scraper ClusterIP 10.96.215.168 <none> 8000/TCP 3m34s

kubernetes-dashboard kubernetes-dashboard NodePort 10.96.102.122 <none> 443:32121/TCP 3m35s

11、Pod

11.0、常用命令

# 把某个资源输入为yaml

kubectl get xxx -oyaml

# 解释指定字段

kubectl explain xxx.xxx

Idea安装Kubernetes插件,file->setting->plugins->maketplace

11.1、Pod文件解释

# api版本,可以使用kubectl api-resources查询

apiVersion: v1

# 资源类型,这里是Pod

kind: Pod

# 元数据

metadata:

# Pod名称

name: multi-pods

# Pod标签

labels:

# 标签名(key:value)

app: multi-pods

# 目标状态

spec:

# pod的hostname,可搭配service进行访问(podName.subdomain.名称空间)

hostname: busybox-1

# 必须和svc名称一样

subdomain: default-subdomain

# 挂载信息

volumes:

# 挂载名称

- name: nginx-data

# 挂载路径

emptyDir: {}

# 容器信息

containers:

# 容器名称

- name: nginx-pod

# 容器镜像

image: nginx:1.22.0

# 镜像下载策略

imagePullPolicy: IfNotPresent

# 镜像下载密钥

imagePullSecrets:

# 环境变量

env:

# 标签名(key:value)

hello: "world"

# 容器挂载信息

volumeMounts:

# 容器挂载路径

- mountPath: /usr/share/nginx/html

# 容器挂载名称

name: nginx-data

# 容器生命周期

lifecycle:

# 容器启动后

postStart:

# 执行命令

exec:

# 命令

command: ["/bin/sh","-c","echo helloworld"]

# http请求

httpGet:

# 协议

scheme: HTTP

# 主机ip

host: 127.0.0.1

# 端口

port: 80

# 路径

path: /abc.html

# 容器停止前

preStop:

# 执行命令

exec:

# 命令

command: ["/bin/sh","-c","echo helloworld"]

# 容器资源限制

resources:

# 首次启动请求资源

requests:

# 内存限制

memory: "200Mi"

# cpu限制

cpu: "700m"

# 最大请求资源

limits:

# 内存限制

memory: "200Mi"

# cpu限制

cpu: "700m"

# 启动探针

startupProbe:

# 执行命令

exec:

# 命令

command: ["/bin/sh","-c","echo helloworld"]

# http请求

httpGet:

# 协议

scheme: HTTP

# 主机ip

host: 127.0.0.1

# 端口

port: 80

# 路径

path: /abc.html

# 存活探针

livenessProbe:

# 执行命令

exec:

# 命令

command: ["/bin/sh","-c","echo helloworld"]

# http请求

httpGet:

# 协议

scheme: HTTP

# 主机ip

host: 127.0.0.1

# 端口

port: 80

# 路径

path: /abc.html

# 探针延迟(s)

initialDelaySeconds: 2

# 探针间隔(s)

periodSeconds: 5

# 探针超时时间(s)

timeoutSeconds: 5

# 探针成功阈值

successThreshold: 3

# 探针失败阈值

failureThreshold: 5

# 就绪探针

readinessProbe:

# 容器名称

- name: alpine-pod

# 容器镜像

image: alpine

# 容器挂载信息

volumeMounts:

# 容器挂载路径

- mountPath: /app

# 容器挂载名称

name: nginx-data

# 容器启动命令

command: ["/bin/sh", "-c", "while true; do sleep 1; date > /app/index.html; done;"]

# 重启策略

restartPolicy: Always

11.2、镜像下载密钥

不同命名空间,密钥不共享

kubectl create secret docker-registry aliyun-docker \

--docker-server=registry.cn-hangzhou.aliyuncs.com \

--docker-username=ialso \

--docker-password=xumeng2233. \

--docker-email=2750955630@qq.com

[root@k-master ~]# kubectl create secret docker-registry aliyun-docker \

> --docker-server=registry.cn-hangzhou.aliyuncs.com \

> --docker-username=ialso \

> --docker-password=xumeng2233. \

> --docker-email=2750955630@qq.com

secret/aliyun-docker created

[root@k-master ~]# kubectl get secrets

NAME TYPE DATA AGE

aliyun-docker kubernetes.io/dockerconfigjson 1 4s

default-token-chtr2 kubernetes.io/service-account-token 3 3d20h

测试密钥

vim vue-pod

apiVersion: v1

kind: Pod

metadata:

name: vue-pod

labels:

app: vue

spec:

containers:

- name: vue-demo

image: registry.cn-hangzhou.aliyuncs.com/ialso/vue-demo

imagePullPolicy: IfNotPresent

restartPolicy: Always

imagePullSecrets:

- name: aliyun-docker

[root@k-master ~]# kubectl apply -f vue-pod.yaml

pod/vue-pod created

[root@k-master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-5c8bc489f8-fs684 1/1 Running 0 33m

nginx-5c8bc489f8-t7vbh 1/1 Running 0 33m

nginx-5c8bc489f8-wm8pp 1/1 Running 0 34m

vue-pod 1/1 Running 0 8s

[root@k-master ~]# kubectl describe pod vue-pod

Name: vue-pod

Namespace: default

Priority: 0

Node: k-cluster2/10.0.4.12

Start Time: Sat, 22 Apr 2023 00:07:15 +0800

Labels: app=vue

Annotations: cni.projectcalico.org/containerID: c665663968023e7fc6c30b29d6add8393865452035d4e1e70f5641a03f7d1cb9

cni.projectcalico.org/podIP: 192.168.2.217/32

cni.projectcalico.org/podIPs: 192.168.2.217/32

Status: Running

IP: 192.168.2.217

IPs:

IP: 192.168.2.217

Containers:

vue-demo:

Container ID: docker://ed88adae5082fef2104251f05e43a785a47dde99ba73f99b0bdc64cbf135a8db

Image: registry.cn-hangzhou.aliyuncs.com/ialso/vue-demo

Image ID: docker-pullable://registry.cn-hangzhou.aliyuncs.com/ialso/vue-demo@sha256:7747ada548c0ae3efcaa167c9ef1337414d1005232fb41c649b121fe8524f264

Port: <none>

Host Port: <none>

State: Running

Started: Sat, 22 Apr 2023 00:07:17 +0800

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-chtr2 (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

default-token-chtr2:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-chtr2

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 30s default-scheduler Successfully assigned default/vue-pod to k-cluster2

Normal Pulling 29s kubelet Pulling image "registry.cn-hangzhou.aliyuncs.com/ialso/vue-demo"

Normal Pulled 28s kubelet Successfully pulled image "registry.cn-hangzhou.aliyuncs.com/ialso/vue-demo" in 904.180724ms

Normal Created 28s kubelet Created container vue-demo

Normal Started 28s kubelet Started container vue-demo

11.3、环境变量

vim mysql-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: mysql-pod

labels:

app: mysql-pod

spec:

containers:

- name: mysql-pod

image: mysql:5.7

imagePullPolicy: IfNotPresent

env:

- name: MYSQL_ROOT_PASSWORD

value: "root"

restartPolicy: Always

[root@k-master ~]# kubectl apply -f mysql-pod.yaml

pod/mysql-pod created

[root@k-master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

mysql-pod 1/1 Running 0 45s

nginx-5c8bc489f8-fs684 1/1 Running 0 49m

nginx-5c8bc489f8-t7vbh 1/1 Running 0 49m

nginx-5c8bc489f8-wm8pp 1/1 Running 0 49m

vue-pod 1/1 Running 0 15m

[root@k-master ~]# kubectl describe pod mysql-pod

Name: mysql-pod

Namespace: default

Priority: 0

Node: k-cluster2/10.0.4.12

Start Time: Sat, 22 Apr 2023 00:22:16 +0800

Labels: app=mysql-pod

Annotations: cni.projectcalico.org/containerID: 361923ab5ad4dd7f40021cf69c4e36d963e346081f6339adc4080d76166da09d

cni.projectcalico.org/podIP: 192.168.2.218/32

cni.projectcalico.org/podIPs: 192.168.2.218/32

Status: Running

IP: 192.168.2.218

IPs:

IP: 192.168.2.218

Containers:

mysql-pod:

Container ID: docker://a3d39d09d02ce0e71680d7947d70e929c0de985b3f858832b9211cca6b8506cd

Image: mysql:5.7

Image ID: docker-pullable://mysql@sha256:f2ad209efe9c67104167fc609cca6973c8422939491c9345270175a300419f94

Port: <none>

Host Port: <none>

State: Running

Started: Sat, 22 Apr 2023 00:22:50 +0800

Ready: True

Restart Count: 0

Environment:

MYSQL_ROOT_PASSWORD: root

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-chtr2 (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

default-token-chtr2:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-chtr2

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 39s default-scheduler Successfully assigned default/mysql-pod to k-cluster2

Normal Pulling 38s kubelet Pulling image "mysql:5.7"

Normal Pulled 6s kubelet Successfully pulled image "mysql:5.7" in 32.202053959s

Normal Created 5s kubelet Created container mysql-pod

Normal Started 5s kubelet Started container mysql-pod

[root@k-master ~]# kubectl exec -it mysql-pod -- bash

root@mysql-pod:/# mysql -uroot -proot

mysql: [Warning] Using a password on the command line interface can be insecure.

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 2

Server version: 5.7.36 MySQL Community Server (GPL)

Copyright (c) 2000, 2021, Oracle and/or its affiliates.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql> exit

Bye

root@mysql-pod:/# exit

exit

11.4、容器启动命令

vim nginx-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod

labels:

app: nginx-pod

spec:

containers:

- name: nginx-pod

image: nginx:1.22.0

imagePullPolicy: IfNotPresent

env:

- name: message

value: "hello world"

command:

- /bin/bash

- -c

- "echo $(message);sleep 1000"

restartPolicy: Always

[root@k-master ~]# kubectl apply -f nginx-pod.yaml

pod/nginx-pod created

[root@k-master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

mysql-pod 1/1 Running 0 15m

nginx-pod 1/1 Running 0 65s

nginx-5c8bc489f8-fs684 1/1 Running 0 64m

nginx-5c8bc489f8-t7vbh 1/1 Running 0 64m

nginx-5c8bc489f8-wm8pp 1/1 Running 0 64m

vue-pod 1/1 Running 0 30m

[root@k-master ~]# kubectl logs nginx-pod

hello world

11.5、生命周期(容器)

vim nginx-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod

labels:

app: nginx-pod

spec:

containers:

- name: nginx-pod

image: nginx:1.22.0

imagePullPolicy: IfNotPresent

lifecycle:

postStart:

exec:

command:

- "/bin/bash"

- "-c"

- "echo hello postStart"

preStop:

exec:

command: ["/bin/bash", "-c", "echo hello preStop"]

restartPolicy: Always

11.6、生命周期(Pod)

initContainers

11.7、探针(容器)

probe

11.8、资源限制

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod

labels:

app: nginx-pod

spec:

containers:

- name: nginx-pod

image: nginx:1.22.0

imagePullPolicy: IfNotPresent

resources:

requests:

memory: "200Mi"

cpu: "700m"

limits:

memory: "500Mi"

cpu: "1000m"

restartPolicy: Always

11.9、多容器

apiVersion: v1

kind: Pod

metadata:

name: multi-pods

labels:

app: multi-pods

spec:

volumes:

- name: nginx-data

emptyDir: {}

containers:

- name: nginx-pod

image: nginx:1.22.0

volumeMounts:

- mountPath: /usr/share/nginx/html

name: nginx-data

- name: alpine-pod

image: alpine

volumeMounts:

- mountPath: /app

name: nginx-data

command: ["/bin/sh", "-c", "while true; do sleep 1; date > /app/index.html; done;"]

restartPolicy: Always

[root@k-master ~]# kubectl apply -f multi-pod.yaml

pod/multi-pods created

[root@k-master ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

multi-pods 2/2 Running 0 44s 192.168.2.225 k-cluster2 <none> <none>

mysql-pod 1/1 Running 0 13h 192.168.2.218 k-cluster2 <none> <none>

nginx-5c8bc489f8-fs684 1/1 Running 0 14h 192.168.2.216 k-cluster2 <none> <none>

nginx-5c8bc489f8-t7vbh 1/1 Running 0 14h 192.168.2.215 k-cluster2 <none> <none>

nginx-5c8bc489f8-wm8pp 1/1 Running 0 14h 192.168.2.214 k-cluster2 <none> <none>

nginx-pod 1/1 Running 0 4h15m 192.168.2.223 k-cluster2 <none> <none>

[root@k-master ~]# curl 192.168.2.225

Sat Apr 22 06:20:10 UTC 2023

[root@k-master ~]# curl 192.168.2.225

Sat Apr 22 06:20:11 UTC 2023

[root@k-master ~]# curl 192.168.2.225

Sat Apr 22 06:20:12 UTC 2023

多容器进入容器可以使用-c指定容器

[root@k-master ~]# kubectl exec -it multi-pods -c alpine-pod -- sh

/ # cat /app/index.html

Sat Apr 22 06:41:45 UTC 2023

/ # exit

11.10、静态Pod

在/etc/kubernetes/manifests下的yaml kubelet会自动(在当前节点)启动,即使被删除仍会被重新启动一个新的

12、Deployment

12.1、Deployment文件解释

# api版本,可以使用kubectl api-resources查询

apiVersion: apps/v1

# 资源类型,这里是Deployment

kind: Deployment

# 元数据

metadata:

# Deployment名称

name: deployment1

# Deployment标签

labels:

# 标签名(key:value)

app: deployment1

# 目标状态

spec:

# 是否停止更新

# 可使用kubectl rollout pause deployment deployment1暂停更新,kubectl rollout resume deployment deployment1恢复更新

paused: false

# 副本数

replicas: 2

# 保留的最大版本历史数

revisionHistoryLimit: 10

# 更新策略

strategy:

# 更新,Recreate(全部停止,重新启动新的)RollingUpdate(滚动更新)

type: RollingUpdate

rollingUpdate:

# 每次滚动更新数量(数字或百分比)

maxSurge: 1

# 最大不可用数(数字或百分比)

maxUnavailable: 1

# Deployment中Pod信息

template:

# 元数据

metadata:

# Pod名称

name: deployment1

# Pod标签

labels:

# 标签名(key:value)

app: deployment1

# 目标状态

spec:

# 容器信息

containers:

# 容器名称

- name: deployment1

# 容器镜像

image: nginx:alpine

imagePullPolicy: IfNotPresent

restartPolicy: Always

# 选择器

selector:

# 简单选择器

matchLabels:

app: deployment1

# 复杂选择器

matchExpressions:

- key: podName

value: [aaa,bbb]

operator: In

[root@k-master ~]# kubectl apply -f deployment1.yaml

deployment.apps/deployment1 created

[root@k-master ~]# kubectl get all

NAME READY STATUS RESTARTS AGE

pod/deployment1-58484bd895-pgtvr 1/1 Running 0 64s

pod/deployment1-58484bd895-s4q8h 1/1 Running 0 64s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 4d16h

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/deployment1 2/2 2 2 64s

NAME DESIRED CURRENT READY AGE

replicaset.apps/deployment1-58484bd895 2 2 2 64s

12.2、动态扩缩容

下载yaml文件

wget https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/high-availability-1.21+.yaml

修改yaml文件

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

rbac.authorization.k8s.io/aggregate-to-admin: "true"

rbac.authorization.k8s.io/aggregate-to-edit: "true"

rbac.authorization.k8s.io/aggregate-to-view: "true"

name: system:aggregated-metrics-reader

rules:

- apiGroups:

- metrics.k8s.io

resources:

- pods

- nodes

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

rules:

- apiGroups:

- ""

resources:

- nodes/metrics

verbs:

- get

- apiGroups:

- ""

resources:

- pods

- nodes

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server-auth-reader

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: extension-apiserver-authentication-reader

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server:system:auth-delegator

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:auth-delegator

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:metrics-server

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: v1

kind: Service

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

ports:

- name: https

port: 443

protocol: TCP

targetPort: https

selector:

k8s-app: metrics-server

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

replicas: 2

selector:

matchLabels:

k8s-app: metrics-server

strategy:

rollingUpdate:

maxUnavailable: 1

template:

metadata:

labels:

k8s-app: metrics-server

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchLabels:

k8s-app: metrics-server

namespaces:

- kube-system

topologyKey: kubernetes.io/hostname

containers:

- args:

- --cert-dir=/tmp

- --secure-port=4443

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --kubelet-use-node-status-port

- --metric-resolution=15s

- --kubelet-insecure-tls #加上参数

image: registry.aliyuncs.com/google_containers/metrics-server:v0.6.3 # 配置镜像地址

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 3

httpGet:

path: /livez

port: https

scheme: HTTPS

periodSeconds: 10

name: metrics-server

ports:

- containerPort: 4443

name: https

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /readyz

port: https

scheme: HTTPS

initialDelaySeconds: 20

periodSeconds: 10

resources:

requests:

cpu: 100m

memory: 200Mi

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 1000

volumeMounts:

- mountPath: /tmp

name: tmp-dir

nodeSelector:

kubernetes.io/os: linux

priorityClassName: system-cluster-critical

serviceAccountName: metrics-server

volumes:

- emptyDir: {}

name: tmp-dir

---

apiVersion: policy/v1

kind: PodDisruptionBudget

metadata:

name: metrics-server

namespace: kube-system

spec:

minAvailable: 1

selector:

matchLabels:

k8s-app: metrics-server

---

apiVersion: apiregistration.k8s.io/v1

kind: APIService

metadata:

labels:

k8s-app: metrics-server

name: v1beta1.metrics.k8s.io

spec:

group: metrics.k8s.io

groupPriorityMinimum: 100

insecureSkipTLSVerify: true

service:

name: metrics-server

namespace: kube-system

version: v1beta1

versionPriority: 100

应用配置文件

[root@VM-4-6-centos ~]# kubectl apply -f high-availability-1.21+.yaml

serviceaccount/metrics-server created

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrole.rbac.authorization.k8s.io/system:metrics-server created

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

service/metrics-server created

deployment.apps/metrics-server created

poddisruptionbudget.policy/metrics-server created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

# 获取node资源使用情况

[root@VM-4-6-centos ~]# kubectl top nodes

W0422 22:15:12.979484 30366 top_node.go:119] Using json format to get metrics. Next release will switch to protocol-buffers, switch early by passing --use-protocol-buffers flag

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

k-cluster1 115m 5% 1038Mi 54%

k-cluster2 121m 1% 1514Mi 9%

k-master 286m 14% 1296Mi 68%

# 查看pod资源使用情况

[root@VM-4-6-centos ~]# kubectl top pods -n kube-system

W0422 22:15:32.246417 30695 top_pod.go:140] Using json format to get metrics. Next release will switch to protocol-buffers, switch early by passing --use-protocol-buffers flag

NAME CPU(cores) MEMORY(bytes)

calico-kube-controllers-594649bd75-sldhx 2m 23Mi

calico-node-652kq 30m 85Mi

calico-node-874nz 28m 66Mi

calico-node-slsx5 31m 131Mi

coredns-545d6fc579-w67ms 2m 19Mi

coredns-545d6fc579-zlpv7 3m 16Mi

etcd-k-master 15m 46Mi

kube-apiserver-k-master 114m 395Mi

kube-controller-manager-k-master 18m 88Mi

kube-proxy-2kkkx 8m 26Mi

kube-proxy-75gq5 9m 24Mi

kube-proxy-q4694 4m 19Mi

kube-scheduler-k-master 4m 37Mi

metrics-server-74b8bdc985-4g62c 2m 20Mi

metrics-server-74b8bdc985-h84gz 3m 17Mi

扩缩容配置

apiVersion: autoscaling/v1

kind: HorizontalPodAutoscaler

metadata:

name: hap1

spec:

maxReplicas: 10

minReplicas: 1

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: deployment1

targetCPUUtilizationPercentage: 50

[root@VM-4-6-centos ~]# kubectl apply -f hap1.yaml

horizontalpodautoscaler.autoscaling/hap1 created

[root@VM-4-6-centos ~]# kubectl get HorizontalPodAutoscaler

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

hap1 Deployment/deployment1 <unknown>/50% 1 10 2 27s

12.3、Canary(金丝雀)部署

灰度发布无法控制灰度时间,可采用同一个service下多个deployment来实现灰度发布,即金丝雀部署

12.4、DaemonSet

会为每个节点部署一个pod副本(master除外),常用于监控、日志收集等

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: daemonset1

labels:

app: daemonset1

spec:

template:

metadata:

name: daemonset1

labels:

app: daemonset-nginx

spec:

containers:

- name: nginx-pod

image: nginx:alpine

imagePullPolicy: IfNotPresent

restartPolicy: Always

selector:

matchLabels:

app: daemonset-nginx

[root@k-master ~]# kubectl apply -f daemonset1.yaml

daemonset.apps/daemonset1 created

[root@k-master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

daemonset1-chzp6 1/1 Running 0 34s

daemonset1-wkj7r 1/1 Running 0 34s

deployment1-58484bd895-qt9nd 1/1 Running 0 23h

deployment1-58484bd895-zlb4c 1/1 Running 0 23h

12.5、StatefulSet

Deployment部署称为无状态应用(网络可能会变,存储可能会变,顺序可能会变,常用于业务代码)

StatefulSet部署称为有状态应用(网络不变,存储不变,顺序不变,常用于存储和中间件)

apiVersion: v1

kind: Service

metadata:

name: statefulset-serves

spec:

selector:

app: statefulset

ports:

- port: 80

targetPort: 80

type: ClusterIP

clusterIP: None

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: statefulset

spec:

replicas: 3

selector:

matchLabels:

app: statefulset

serviceName: statefulset-serves

template:

metadata:

labels:

app: statefulset

spec:

containers:

- name: nginx

image: nginx:1.24.0

[root@k-master ~]# kubectl apply -f statefulset.yaml

service/statefulset-serves created

statefulset.apps/statefulset created

[root@k-master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

daemonset1-chzp6 1/1 Running 0 42m

daemonset1-wkj7r 1/1 Running 0 42m

deployment1-58484bd895-qt9nd 1/1 Running 0 23h

deployment1-58484bd895-zlb4c 1/1 Running 0 23h

statefulset-0 1/1 Running 0 109s

statefulset-1 1/1 Running 0 107s

statefulset-2 1/1 Running 0 105s

[root@k-master ~]# curl statefulset-0.statefulset-serves

curl: (6) Could not resolve host: statefulset-0.statefulset-serves; Unknown error

[root@k-master ~]# kubectl exec -it daemonset1-chzp6 -- /bin/sh

/ # curl statefulset-0.statefulset-serves

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

/ # exit

12.6、Job

apiVersion: batch/v1

kind: Job

metadata:

name: job-test

labels:

app: job-test

spec:

completions: 2

template:

metadata:

name: job-pod

labels:

app: job1

spec:

containers:

- name: job-container

image: alpine

restartPolicy: Never

[root@k-master ~]# kubectl apply -f job1.yaml

job.batch/job-test created

[root@k-master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

daemonset1-chzp6 1/1 Running 0 84m

daemonset1-wkj7r 1/1 Running 0 84m

deployment1-58484bd895-qt9nd 1/1 Running 0 24h

deployment1-58484bd895-zlb4c 1/1 Running 0 24h

job-test-mt6ds 0/1 Completed 0 80s

job-test-prxqt 0/1 Completed 0 62s

statefulset-0 1/1 Running 0 43m

statefulset-1 1/1 Running 0 43m

statefulset-2 1/1 Running 0 43m

12.7、CronJob

CronJob创建Job执行任务

apiVersion: batch/v1

kind: CronJob

metadata:

name: cronjob

spec:

jobTemplate:

metadata:

name: job-test

labels:

app: job-test

spec:

completions: 2

template:

metadata:

name: job-pod

labels:

app: job1

spec:

containers:

- name: job-container

image: alpine

command: ["/bin/sh", "-c", "echo hello world"]

restartPolicy: Never

# 每分钟

schedule: "*/1 * * * *"

[root@k-master ~]# kubectl apply -f cronjob1.yaml

cronjob1cronjob1.batch/cronjob-test created

[root@k-master ~]# kubectl get cronjobs

NAME SCHEDULE SUSPEND ACTIVE LAST SCHEDULE AGE

cronjob */1 * * * * False 0 <none> 28s

[root@k-master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

cronjob-28037753-9frvk 0/1 Completed 0 36s

cronjob-28037753-qbmwv 0/1 Completed 0 20s

daemonset1-chzp6 1/1 Running 0 115m

daemonset1-wkj7r 1/1 Running 0 115m

deployment1-58484bd895-qt9nd 1/1 Running 0 25h

deployment1-58484bd895-zlb4c 1/1 Running 0 25h

job-test-mt6ds 0/1 Completed 0 32m

job-test-prxqt 0/1 Completed 0 32m

statefulset-0 1/1 Running 0 74m

statefulset-1 1/1 Running 0 74m

statefulset-2 1/1 Running 0 74m

[root@k-master ~]# kubectl logs cronjob-28037753-9frvk

hello world

13、Service

13.1、ClusterIP

apiVersion: v1

kind: Service

metadata:

name: kser1

spec:

selector:

app: nginx-pod

ports:

- port: 80

targetPort: 8080

type: ClusterIP

# 不指定则随机分配,为None则为无头服务

clusterIP: 10.96.80.80

# 指定一组集群内IP

clusterIPs:

- 10.96.80.80

- 10.96.80.81

# 内部流量策略,Cluster负载均衡,Local优先本机节点

internalTrafficPolicy: Cluster

13.2、nodePort

apiVersion: v1

kind: Service

metadata:

name: kser1

spec:

selector:

app: nginx-pod

ports:

- port: 80

targetPort: 8080

# 不指定则随机分配

nodePort: 8099

type: NodePort

# 外部流量策略,Cluster隐藏源IP,Local不隐藏源IP

externalTrafficPolicy: Cluster

# 指定可访问service的IP(白名单)

externalIPs:

# 其他ip

- 10.0.4.14

# session亲和

sessionAffinity: "ClientIP"

# session亲和配置

sessionAffinityConfig:

clientIP:

timeoutSeconds: 300

13.3、headless

apiVersion: v1

kind: Service

metadata:

name: mysql

namespace: mysql

labels:

app: mysql

spec:

selector:

# 匹配带有app: mysql标签的pod

app: mysql

clusterIP: None

ports:

- name: mysql

port: 3306

13.4、代理外部服务(ep)

apiVersion: v1

kind: Endpoints

metadata:

labels:

app: nginx-svc-external

name: nginx-svc-external

subsets:

- addresses:

- ip: 150.158.187.211

ports:

- name: http

port: 80

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

labels:

# 要与Endpoints label一致

app: nginx-svc-external

name: nginx-svc-external

spec:

ports:

- name: http

port: 80

protocol: TCP

targetPort: 80

type: ClusterIP

13.5、代理外部服务(ExternalName)

apiVersion: v1

kind: Service

metadata:

name: externalName1

spec:

type: ExternalName

externalName: baidu.com

[root@k-master ~]# kubectl exec -it deployment1-58484bd895-qt9nd -- /bin/sh

/ # curl externalname1

curl: (56) Recv failure: Connection reset by peer

/ # ping externalname1

PING externalname1 (39.156.66.10): 56 data bytes

64 bytes from 39.156.66.10: seq=0 ttl=248 time=30.950 ms

64 bytes from 39.156.66.10: seq=1 ttl=248 time=30.944 ms

64 bytes from 39.156.66.10: seq=2 ttl=248 time=30.930 ms

^C

--- externalname1 ping statistics ---

3 packets transmitted, 3 packets received, 0% packet loss

round-trip min/avg/max = 30.930/30.941/30.950 ms

/ # exit

14、强制删除

14.1、强删Pod

kubectl delete pod <pod-name> -n <name-space> --force --grace-period=0

14.2、强制删除pv、pvc

kubectl patch pv xxx -p '{"metadata":{"finalizers":null}}'

kubectl patch pvc xxx -p '{"metadata":{"finalizers":null}}'

14.3、强制删除ns

kubectl delete ns <terminating-namespace> --force --grace-period=0

15、Ingress

Service使用NodePort暴露集群外访问端口性能低且不安全,无法完成限流,特定路由转发,所以需要再向上抽取一层

Ingress将作为整个集群唯一的入口,完成路由转发,限流等功能

15.1、安装

apiVersion: v1

kind: Namespace

metadata:

labels:

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

name: ingress-nginx

---

apiVersion: v1

automountServiceAccountToken: true

kind: ServiceAccount

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx

namespace: ingress-nginx

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx

namespace: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- namespaces

verbs:

- get

- apiGroups:

- ""

resources:

- configmaps

- pods

- secrets

- endpoints

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io

resources:

- ingressclasses

verbs:

- get

- list

- watch

- apiGroups:

- ""

resourceNames:

- ingress-controller-leader

resources:

- configmaps

verbs:

- get

- update

- apiGroups:

- ""

resources:

- configmaps

verbs:

- create

- apiGroups:

- coordination.k8s.io

resourceNames:

- ingress-controller-leader

resources:

- leases

verbs:

- get

- update

- apiGroups:

- coordination.k8s.io

resources:

- leases

verbs:

- create

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission

namespace: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- secrets

verbs:

- get

- create

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

- namespaces

verbs:

- list

- watch

- apiGroups:

- coordination.k8s.io

resources:

- leases

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- networking.k8s.io

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io

resources:

- ingressclasses

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission

rules:

- apiGroups:

- admissionregistration.k8s.io

resources:

- validatingwebhookconfigurations

verbs:

- get

- update

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

apiVersion: v1

data:

allow-snippet-annotations: "true"

kind: ConfigMap

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-controller

namespace: ingress-nginx

---

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

ipFamilies:

- IPv4

ipFamilyPolicy: SingleStack

ports:

- appProtocol: http

name: http

port: 80

protocol: TCP

targetPort: http

- appProtocol: https

name: https

port: 443

protocol: TCP

targetPort: https

selector:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

type: NodePort

---

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-controller-admission

namespace: ingress-nginx

spec:

ports:

- appProtocol: https

name: https-webhook

port: 443

targetPort: webhook

selector:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

type: ClusterIP

---

apiVersion: apps/v1

# kind: Deployment

kind: DaemonSet

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

minReadySeconds: 0

revisionHistoryLimit: 10

selector:

matchLabels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

template:

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

spec:

containers:

- args:

- /nginx-ingress-controller

- --election-id=ingress-controller-leader

- --controller-class=k8s.io/ingress-nginx

- --ingress-class=nginx

- --configmap=$(POD_NAMESPACE)/ingress-nginx-controller

- --validating-webhook=:8443

- --validating-webhook-certificate=/usr/local/certificates/cert

- --validating-webhook-key=/usr/local/certificates/key

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: LD_PRELOAD

value: /usr/local/lib/libmimalloc.so

# image: registry.k8s.io/ingress-nginx/controller:v1.3.1@sha256:54f7fe2c6c5a9db9a0ebf1131797109bb7a4d91f56b9b362bde2abd237dd1974

image: registry.cn-hangzhou.aliyuncs.com/google_containers/nginx-ingress-controller:v1.3.1

imagePullPolicy: IfNotPresent

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

livenessProbe:

failureThreshold: 5

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

name: controller

ports:

- containerPort: 80

name: http

protocol: TCP

- containerPort: 443

name: https

protocol: TCP

- containerPort: 8443

name: webhook

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

resources:

requests:

cpu: 100m

memory: 90Mi

securityContext:

allowPrivilegeEscalation: true

capabilities:

add:

- NET_BIND_SERVICE

drop:

- ALL

runAsUser: 101

volumeMounts:

- mountPath: /usr/local/certificates/

name: webhook-cert

readOnly: true

# dnsPolicy: ClusterFirst

dnsPolicy: ClusterFirstWithHostNet

# 开放的为node端口

hostNetwork: true

nodeSelector:

# 选择节点角色为ingress的

# kubernetes.io/os: linux

node-role: ingress

serviceAccountName: ingress-nginx

terminationGracePeriodSeconds: 300

volumes:

- name: webhook-cert

secret:

secretName: ingress-nginx-admission

---

apiVersion: batch/v1

kind: Job

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission-create

namespace: ingress-nginx

spec:

template:

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission-create

spec:

containers:

- args:

- create

- --host=ingress-nginx-controller-admission,ingress-nginx-controller-admission.$(POD_NAMESPACE).svc

- --namespace=$(POD_NAMESPACE)

- --secret-name=ingress-nginx-admission

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

# image: registry.k8s.io/ingress-nginx/kube-webhook-certgen:v1.3.0@sha256:549e71a6ca248c5abd51cdb73dbc3083df62cf92ed5e6147c780e30f7e007a47

image: registry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen:v1.3.0

imagePullPolicy: IfNotPresent

name: create

securityContext:

allowPrivilegeEscalation: false

nodeSelector:

kubernetes.io/os: linux

restartPolicy: OnFailure

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 2000

serviceAccountName: ingress-nginx-admission

---

apiVersion: batch/v1

kind: Job

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission-patch

namespace: ingress-nginx

spec:

template:

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission-patch

spec:

containers:

- args:

- patch

- --webhook-name=ingress-nginx-admission

- --namespace=$(POD_NAMESPACE)

- --patch-mutating=false

- --secret-name=ingress-nginx-admission

- --patch-failure-policy=Fail

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

image: registry.k8s.io/ingress-nginx/kube-webhook-certgen:v1.3.0@sha256:549e71a6ca248c5abd51cdb73dbc3083df62cf92ed5e6147c780e30f7e007a47

imagePullPolicy: IfNotPresent

name: patch

securityContext:

allowPrivilegeEscalation: false

nodeSelector:

kubernetes.io/os: linux

restartPolicy: OnFailure

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 2000

serviceAccountName: ingress-nginx-admission

---

apiVersion: networking.k8s.io/v1

kind: IngressClass

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: nginx

spec:

controller: k8s.io/ingress-nginx

---

apiVersion: admissionregistration.k8s.io/v1

kind: ValidatingWebhookConfiguration

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission

webhooks:

- admissionReviewVersions:

- v1

clientConfig:

service:

name: ingress-nginx-controller-admission

namespace: ingress-nginx

path: /networking/v1/ingresses

failurePolicy: Fail

matchPolicy: Equivalent

name: validate.nginx.ingress.kubernetes.io

rules:

- apiGroups:

- networking.k8s.io

apiVersions:

- v1

operations:

- CREATE

- UPDATE

resources:

- ingresses

sideEffects: None

[root@k-master ~]# kubectl apply -f ingress-nginx.yaml

service/ingress-nginx created

[root@k-master ~]# kubectl get all -n ingress-nginx

NAME READY STATUS RESTARTS AGE

pod/ingress-nginx-admission-create-2hrfl 0/1 Completed 0 4m43s

pod/ingress-nginx-admission-patch-lh8ph 0/1 Completed 0 4m43s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/ingress-nginx-controller NodePort 10.96.33.14 <none> 80:31926/TCP,443:32352/TCP 4m43s

service/ingress-nginx-controller-admission ClusterIP 10.96.222.198 <none> 443/TCP 4m43s

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/ingress-nginx-controller 0 0 0 0 0 node-role=ingress 4m43s

NAME COMPLETIONS DURATION AGE

job.batch/ingress-nginx-admission-create 1/1 36s 4m43s

job.batch/ingress-nginx-admission-patch 1/1 44s 4m43s

# 为node节点打标签

[root@k-master ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k-cluster1 Ready <none> 3d v1.22.0

k-cluster2 Ready <none> 3d v1.22.0

k-master Ready control-plane,master 3d v1.22.0

[root@k-master ~]# kubectl label node k-cluster1 node-role=ingress

node/k-cluster1 labeled