BERT(cpu)代码复现

逐行注释,逐行解析。可直接运行。codefrom https://github.com/graykode/nlp-tutorial/tree/master/5-2.BERT

·

逐行注释,逐行解析。可直接运行。

code from https://github.com/graykode/nlp-tutorial/tree/master/5-2.BERT

import math

import re

import time

from random import *

import numpy as np

import torch

import torch.nn as nn

import torch.optim as optim

from torch.utils.data import Dataset, DataLoader

# 10. MyDataset

class MyDataset(Dataset):

# 读数据

def __init__(self, input_ids, segment_ids, masked_tokens, masked_pos, isNext):

self.input_ids = input_ids

self.segment_ids = segment_ids

self.masked_tokens = masked_tokens

self.masked_pos = masked_pos

self.isNext = isNext

# 返回数据长度(有几行数据)

def __len__(self):

return len(self.input_ids)

# return self.input_ids.shape[0]

# 返回相对位置上的元素,会比make_batch函数返回的tensor数据少一个维度

def __getitem__(self, idx):

return self.input_ids[idx], self.segment_ids[idx], self.masked_tokens[idx], self.masked_pos[idx], self.isNext[idx]

# 9. gelu激活函数 (GAUSSIAN ERROR LINEAR UNITS:高斯误差线性单元)

def gelu(x):

"""

Implementation of the gelu activation function.

For information: OpenAI GPT's gelu is slightly different (and gives slightly different results):

0.5 * x * (1 + torch.tanh(math.sqrt(2 / math.pi) * (x + 0.044715 * torch.pow(x, 3))))

Also see https://arxiv.org/abs/1606.08415

"""

return x * 0.5 * (1.0 + torch.erf(x / math.sqrt(2.0))) # x==[6, 30, 3072]

# 8. PoswiseFeedForwardNet

class PoswiseFeedForwardNet(nn.Module):

def __init__(self):

super(PoswiseFeedForwardNet, self).__init__()

self.fc1 = nn.Linear(d_model, d_ff)

self.fc2 = nn.Linear(d_ff, d_model)

def forward(self, x):

# x==[6, 30, 768]

return self.fc2(gelu(self.fc1(x))) # 9.

# 7. ScaledDotProductAttention

class ScaledDotProductAttention(nn.Module):

def __init__(self):

super(ScaledDotProductAttention, self).__init__()

def forward(self, Q, K, V, attn_mask):

# Q,K,V==[6, 12, 30, 64] attn_mask==[6, 12, 30, 30]

scores = torch.matmul(Q, K.transpose(-1, -2)) / np.sqrt(d_k) # [6, 12, 30, 64]*[6, 12, 64, 30]==[6, 12, 30, 30]

scores.masked_fill_(attn_mask, -1e9)

# 矩阵横行 特征维度上做softmax

attn = nn.Softmax(dim=-1)(scores) # [6, 12, 30, 30]

context = torch.matmul(attn, V) # [6, 12, 30, 30]*[6, 12, 30, 64]==[6, 12, 30, 64]

return context, attn

# 6. MultiHeadAttention

class MultiHeadAttention(nn.Module):

def __init__(self):

super(MultiHeadAttention, self).__init__()

self.W_Q = nn.Linear(d_model, d_k * n_heads)

self.W_K = nn.Linear(d_model, d_k * n_heads)

self.W_V = nn.Linear(d_model, d_v * n_heads)

self.linear = nn.Linear(n_heads * d_v, d_model)

self.layer_norm = nn.LayerNorm(d_model)

def forward(self, Q, K, V, attn_mask):

# Q,K,V==[6, 30, 768] attn_mask==[6, 30, 30]

residual = Q # 残差连接

batch_size = Q.size(0)

q_s = self.W_Q(Q).view(batch_size, -1, n_heads, d_k).transpose(1, 2) # [6, 30, 12, 64]--->[6, 12, 30, 64]

k_s = self.W_K(K).view(batch_size, -1, n_heads, d_k).transpose(1, 2) # [6, 30, 12, 64]--->[6, 12, 30, 64]

v_s = self.W_V(V).view(batch_size, -1, n_heads, d_v).transpose(1, 2) # [6, 30, 12, 64]--->[6, 12, 30, 64]

attn_mask = attn_mask.unsqueeze(1).repeat(1, n_heads, 1, 1) # [6, 1, 30, 30]--->[6, 12, 30, 30]

# attn==[6, 12, 30, 30] context==[6, 12, 30, 64]

context, attn = ScaledDotProductAttention()(q_s, k_s, v_s, attn_mask) # 7.

context = context.transpose(1, 2).contiguous().view(batch_size, -1, n_heads * d_v) # [6, 12, 30, 64]--->[6, 30, 12, 64]--->[6, 30, 768]

output = self.linear(context) # [6, 30, 768]--->[6, 30, 768]

return self.layer_norm(output + residual), attn

# 5. EncoderLayer:包含两个部分,多头自注意力层和前馈神经网络

class EncoderLayer(nn.Module):

def __init__(self):

super(EncoderLayer, self).__init__()

self.enc_self_attn = MultiHeadAttention() # 6.

self.pos_ffn = PoswiseFeedForwardNet() # 8.

def forward(self, enc_inputs, enc_self_attn_mask):

# attn==[6, 12, 30, 30] enc_outputs==[6, 30, 768]

enc_outputs, attn = self.enc_self_attn(enc_inputs, enc_inputs, enc_inputs, enc_self_attn_mask)

enc_outputs = self.pos_ffn(enc_outputs) # [6, 30, 768]

return enc_outputs, attn

# 4. get_attn_pad_mask

def get_attn_pad_mask(seq_q, seq_k):

batch_size, len_q = seq_q.size() # [6, 30]

batch_size, len_k = seq_k.size() # [6, 30]

pad_attn_mask = seq_k.data.eq(0).unsqueeze(1) # [6, 30]--->[6, 1, 30]

return pad_attn_mask.expand(batch_size, len_q, len_k) # [6, 30, 30]

# 3. 词向量层 构建词表矩阵

class Embedding(nn.Module):

def __init__(self):

super(Embedding, self).__init__()

self.tok_embed = nn.Embedding(vocab_size, d_model) # Token Embeddings [29, 768]

self.seg_embed = nn.Embedding(n_segments, d_model) # Segment Embeddings [2, 768]

self.pos_embed = nn.Embedding(max_len, d_model) # Position Embeddings [30, 768]

self.norm = nn.LayerNorm(d_model)

def forward(self, x, seg): # x==[6, 30] seg==[6, 30]

seq_len = x.size(1) # 30

pos = torch.arange(seq_len, dtype=torch.long) # (30,) tensor([0,1,...29])

pos = pos.unsqueeze(0).expand_as(x) # [1, 30]--->[6, 30]

embedding = self.tok_embed(x) + self.seg_embed(seg) + self.pos_embed(pos) # [6, 30, 768]+[6, 30, 768]+[6, 30, 768]==[6, 30, 768]

return self.norm(embedding)

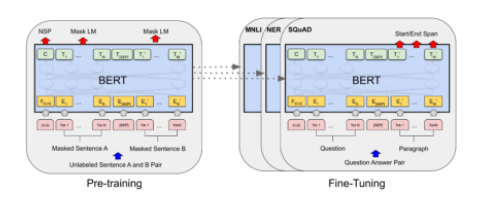

# 2. BERT模型整体架构

class BERT(nn.Module):

def __init__(self):

super(BERT, self).__init__()

self.embedding = Embedding() # 3.

self.layers = nn.ModuleList([EncoderLayer() for _ in range(n_layers)]) # 5.

self.fc = nn.Linear(d_model, d_model) # nsp任务--->cls后面接的线性层

self.activ1 = nn.Tanh() # nsp任务--->cls后面接个线性层之后再接个激活函数(Tanh)

self.classifier = nn.Linear(d_model, 2) # nsp任务--->cls后面接完线性层和激活函数后 最后再接个线性层进行二分类

self.linear = nn.Linear(d_model, d_model) # mlm任务--->mlm后面接的线性层

self.activ2 = gelu # mlm任务--->mlm后面接个线性层之后再接个激活函数(gelu)

self.norm = nn.LayerNorm(d_model) # mlm任务--->mlm后面接完线性层和激活函数后 最后在进行规范化

# decoder is shared with embedding layer

embed_weight = self.embedding.tok_embed.weight # Token Embeddings [29, 768]

n_vocab, n_dim = embed_weight.size() # n_vocab==29 n_dim==768

self.decoder = nn.Linear(n_dim, n_vocab, bias=False) # 把768映射为词表大小(29)

self.decoder.weight = embed_weight # 权重w

self.decoder_bias = nn.Parameter(torch.zeros(n_vocab)) # 偏置b:创建一个可学习参数(29个全0一维)

def forward(self, input_ids, segment_ids, masked_pos):

output = self.embedding(input_ids, segment_ids) # [6, 30, 768]

# get_attn_pad_mask:是为了记录句子中pad的位置信息,传给模型后面,在计算自注意力的时候去掉pad符号的影响 4.

enc_self_attn_mask = get_attn_pad_mask(input_ids, input_ids) # [6, 30, 30]

for layer in self.layers:

output, enc_self_attn = layer(output, enc_self_attn_mask) # enc_self_attn==[6, 12, 30, 30] output==[6, 30, 768]

# 处理cls

h_pooled = self.activ1(self.fc(output[:, 0])) # [6, 30, 768]--->[6, 768]

logits_clsf = self.classifier(h_pooled) # [6, 2]

# 处理mask

masked_pos = masked_pos[:, :, None].expand(-1, -1, output.size(-1)) # [6, 5]--->[6, 5, 1]--->[6, 5, 768]

h_masked = torch.gather(output, 1, masked_pos) # get masked_tokens:[6, 5, 768]

h_masked = self.norm(self.activ2(self.linear(h_masked))) # [6, 5, 768]

logits_lm = self.decoder(h_masked) + self.decoder_bias # [6, 5, 29]+[29]==[6, 5, 29] 在最后一个维度上相加

return logits_lm, logits_clsf

# 1. 预训练任务的数据构建

def make_batch():

batch = [] # 最终要返回的结果

# 为了记录NSP任务中的正样本和负样本的个数,比例最好是在一个batch中接近1:1

positive = negative = 0

while positive != batch_size/2 or negative != batch_size/2:

# 比如tokens_a_index=3,tokens_b_index=1;从整个样本中抽取对应的样本

tokens_a_index = randrange(len(sentences))

tokens_b_index = randrange(len(sentences))

# 根据索引获取对应样本:比如tokens_a=[5, 23, 26, 20, 9, 13, 18] tokens_b=[27, 11, 23, 8, 17, 28, 12, 22, 16, 25]

tokens_a = token_list[tokens_a_index]

tokens_b = token_list[tokens_b_index]

# 加上特殊符号,cls符号是1,sep符号是2:[1, 5, 23, 26, 20, 9, 13, 18, 2, 27, 11, 23, 8, 17, 28, 12, 22, 16, 25, 2]

input_ids = [word_dict['[CLS]']] + tokens_a + [word_dict['[SEP]']] + tokens_b + [word_dict['[SEP]']]

# 分割句子符号:[0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1]

segment_ids = [0] * (1 + len(tokens_a) + 1) + [1] * (len(tokens_b) + 1)

# MASK LM

# n_pred=3:整个句子的15%的字符可以被mask掉,这里取和max_pred中的最小值,相当于起到截断的作用,确保每次计算损失的时候没有那么多字符以及信息充足

n_pred = min(max_pred, max(1, int(round(len(input_ids) * 0.15))))

# cand_maked_pos=[1, 2, 3, 4, 5, 6, 7, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18]:整个句子input_ids中可以被mask的符号必须是非cls和sep符号的,要不然没意义

cand_maked_pos = [i for i, token in enumerate(input_ids) if token != word_dict['[CLS]'] and token != word_dict['[SEP]']]

# 打乱顺序:cand_maked_pos=[6, 5, 17, 3, 1, 13, 16, 10, 12, 2, 9, 7, 11, 18, 4, 14, 15] 随机取mask,这里只是采用众多方式中的一种方式来取(shuffle)

shuffle(cand_maked_pos)

masked_tokens = [] # 被mask的元素的原始真实标签 也就是ground truth

masked_pos = [] # 记录哪些位置被mask了

# 随机取其中的前三个,masked_pos=[6, 5, 17]:注意这里对应的是position信息索引;masked_tokens=[13, 9, 16]:注意这里是被mask的元素之前对应的原始单字数字

for pos in cand_maked_pos[:n_pred]:

masked_pos.append(pos)

masked_tokens.append(input_ids[pos])

if random() < 0.8: # 80%概率用mask替换

input_ids[pos] = word_dict['[MASK]']

elif random() > 0.9: # 10%概率用其他词替换

index = randint(0, vocab_size - 1)

while index < 4: # 不能小于4,因为词表里面前4个词是[PAD][CLS][SEP][MASK],用这4个词替换没意义,所以再重新选

index = randint(0, vocab_size - 1)

input_ids[pos] = word_dict[number_dict[index]]

# 还有剩下0.8到0.9之间这10%的概率不做任何替换,所以这里没写

# Zero Paddings1

n_pad = max_len - len(input_ids) # max_len=30 n_pad=10

input_ids.extend([0] * n_pad) # 在input_ids后面补零

segment_ids.extend([0] * n_pad) # segment_ids后面也要跟着补零,这里有一个问题,0和之前的重了,这里主要是为了区分不同的句子,所以无所谓,它其实是另一种维度的位置信息

# Zero Paddings2 (mask 15%) tokens

# 同一个batch里面每条样本的mask数量必须是相等的,是为了计算一个batch中句子的MLM损失的时候可以组成一个有效矩阵放进去;不然第一个句子预测5个字符,第二句子预测7个字符,第三个句子预测8个字符,组不成一个有效的矩阵

# 对masked_tokens补0是最好的,因为0本身对应PAD,没有意义。当然补123也是可以的([CLS][SEP][MASK]),只不过不太好

if max_pred > n_pred: # n_pred不可能比max_pred大,因为MASK LM里面设置截断了

n_pad = max_pred - n_pred

masked_tokens.extend([0] * n_pad) # masked_tokens= [13, 9, 16, 0, 0]

masked_pos.extend([0] * n_pad) # masked_pos= [6, 5, 17,0,0]

# 判断这两句话是否相邻

if tokens_a_index + 1 == tokens_b_index and positive < batch_size/2:

batch.append([input_ids, segment_ids, masked_tokens, masked_pos, True]) # IsNext

positive += 1

elif tokens_a_index + 1 != tokens_b_index and negative < batch_size/2:

batch.append([input_ids, segment_ids, masked_tokens, masked_pos, False]) # NotNext

negative += 1

return batch

if __name__ == '__main__':

# BERT Parameters

max_len = 30 # 句子的最大长度 cover住95%(表示同一个batch中的所有句子都由30个token组成,不够的补PAD)

batch_size = 6

max_pred = 5 # 表示最多需要预测多少个mask单词,设置了一个上限(比如100个单词按照15%算有15个单词做mask,那我不做15个,只做max_pred个)

n_layers = 12 # number of Encoder Layer

n_heads = 12 # number of heads in Multi-Head Attention

d_model = 768 # Embedding Size(Token Embeddings、Segment Embeddings、Position Embeddings)

d_ff = 3072 # 4*d_model, FeedForward dimension

d_k = d_v = 64 # dimension of Q(=k),V

n_segments = 2 # 表示Encoder input 由几句话组成

text = (

'Hello, how are you? I am Romeo.\n'

'Hello, Romeo My name is Juliet. Nice to meet you.\n'

'Nice meet you too. How are you today?\n'

'Great. My baseball team won the competition.\n'

'Oh Congratulations, Juliet\n'

'Thanks you Romeo'

)

sentences = re.sub(r'[.,!?\-]', '', text.lower()).split('\n') # filter '.', ',', '?', '!', '-' --->['', '', '', '', '', '']

# ' '.join(sentences):将一个字符串返回成一个新的字符串,' '作用在字符串与字符串之间,用' '隔开

# split():默认以空格切分,返回字符串列表['', '', ...]

# set():返回去重后的集合{},会乱序

# list():返回列表[]

word_list = list(set(' '.join(sentences).split()))

# word_to_id

word_dict = {'[PAD]': 0, '[CLS]': 1, '[SEP]': 2, '[MASK]': 3}

for i, w in enumerate(word_list):

word_dict[w] = i + 4

# id_to_word

number_dict = {i: w for i, w in enumerate(word_dict)}

# 29

vocab_size = len(word_dict)

# 原始的文本转为数字(索引),便于被计算机处理:[[15, 7, 27, 22, 8, 4, 16], ..., []]

token_list = list()

for sentence in sentences:

arr = [word_dict[s] for s in sentence.split()]

token_list.append(arr)

# 数据

# batch:[[[],[],[],[],F/T], [[],[],[],[],F/T], [[],[],[],[],F/T], [[],[],[],[],F/T], [[],[],[],[],F/T], [[],[],[],[],F/T]]

batch = make_batch() # 1.

# zip(*batch):[([],[],[],[],[],[]), ([],[],[],[],[],[]), ([],[],[],[],[],[]), ([],[],[],[],[],[]), (F/T, F/T, F/T, F/T, F/T, F/T)]

input_ids, segment_ids, masked_tokens, masked_pos, isNext = map(torch.LongTensor, zip(*batch)) # 转成tensor

# 模型

model = BERT() # 2.

# 损失函数

criterion = nn.CrossEntropyLoss(ignore_index=0) # 真实label中为0的部分不参与损失计算

# 优化器

optimizer = optim.Adam(model.parameters(), lr=0.001)

# 实例化数据源

dataset = MyDataset(input_ids, segment_ids, masked_tokens, masked_pos, isNext) # 10.

# 实例化

dataloader = DataLoader(dataset=dataset, batch_size=batch_size, shuffle=True)

model.train()

start = time.time()

for epoch in range(10):

# 通过DataLoader把MyDataset中的__getitem__函数返回的数据拿出来后 会多增加一个维度

for input_ids, segment_ids, masked_tokens, masked_pos, isNext in dataloader:

logits_lm, logits_clsf = model(input_ids, segment_ids, masked_pos)

loss_lm = criterion(logits_lm.transpose(1, 2), masked_tokens) # mlm任务损失计算

loss_lm = (loss_lm.float()).mean()

loss_clsf = criterion(logits_clsf, isNext) # nsp任务损失计算(cls classification)

loss = loss_lm + loss_clsf

if (epoch + 1) % 2 == 0:

print('Epoch:', '%04d' % (epoch + 1), 'cost=', '{:.6f}'.format(loss))

optimizer.zero_grad()

loss.backward()

optimizer.step()

# 保存模型

# torch.save(model.state_dict(), './wb_model/wb_bert(cpu).pt')

end = time.time()

print(f'cpu下共执行--->{end - start}s')

print('-------------------- Predict mask tokens and isNext --------------------')

# 加载数据

batch = make_batch()

# batch[0]:取出第0个批次 [[],[],[],[],F/T]

# zip(batch[0]):[([],), ([],), ([],), ([],), (F/T,)]

# [1, 30] [1, 30] [1, 5] [1, 5] [1,]

input_ids, segment_ids, masked_tokens, masked_pos, isNext = map(torch.LongTensor, zip(batch[0])) # 转成tensor

print(text)

print([number_dict[w.item()] for w in input_ids[0] if number_dict[w.item()] != '[PAD]'])

# 加载训练好的模型参数

model = BERT()

# model.load_state_dict(torch.load('./wb_model/wb_bert(cpu).pt'))

# 模型预测

with torch.no_grad():

logits_lm, logits_clsf = model(input_ids, segment_ids, masked_pos) # logits_lm==[1, 5, 29] logits_clsf==[1, 2]

logits_lm = logits_lm.data.max(2)[1][0].data.numpy()

print('masked tokens list: ', [pos.item() for pos in masked_tokens[0] if pos.item() != 0])

print('predict masked tokens list: ', [pos for pos in logits_lm if pos != 0])

logits_clsf = logits_clsf.data.max(1)[1].data.numpy()[0]

print('isNext: ', True if isNext else False)

print('predict isNext: ', True if logits_clsf else False)

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)